The accuracy and flexibility of facial recognition technology has seen it securing everything from smartphones to Australia’s airports, but a team of security researchers is warning of potential manipulation after finding a way to trick the systems using deepfake images.

Researchers within the McAfee Advanced Threat Research (ATR) team have been exploring ways that ‘model hacking’ – also known as adversarial machine learning – can be used to trick artificial intelligence (AI) computer-vision algorithms into misidentifying the content of the images they see.

This approach has previously been used to show how autonomous-car safety systems, which can read speed-limit signs and adjust the car’s speed accordingly, could be tricked by modifying street signs with stickers that were misread by the systems.

Subtle modifications to the signs would be picked up by the computer-vision algorithms but might be indiscernible to the human eye – an approach that the McAfee team has now successfully turned towards the challenge of identifying people from photos, as in the screening of passports.

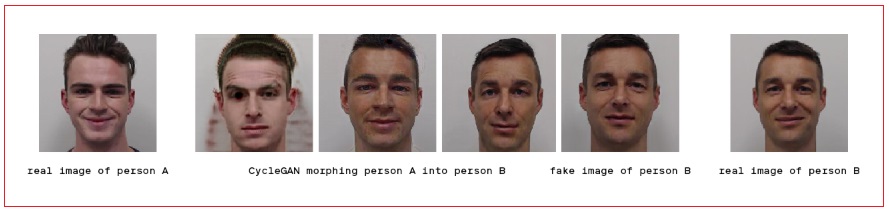

Starting with photos of two people – called A and B – ATR researchers used what they described as a “deep learning-based morphing approach” to generate large numbers of composite images that combined features from both.

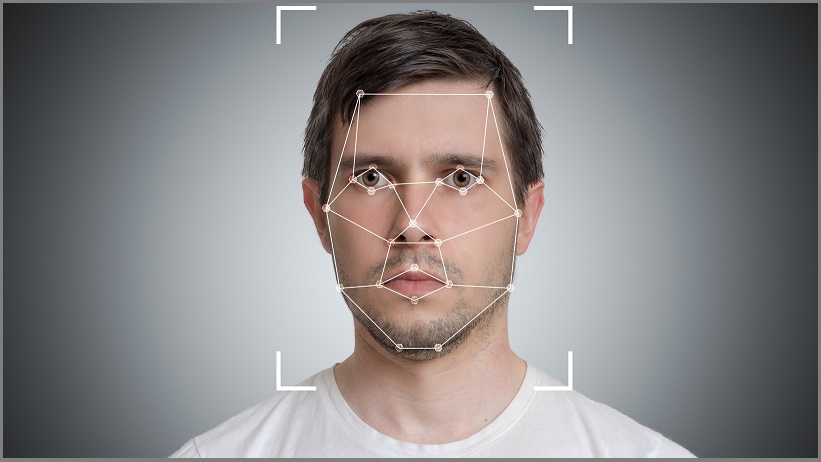

The images were fed into a generative adversarial network (GAN) – a pair of AI-based tools including a ‘generator’ that creates new faces and a ‘discriminator’ that evaluates their verisimilitude – to iteratively adjust the minute facial landmarks that face-recognition algorithms use to make a match.

McAfee face scanners can be tricked. Source: McAfee

Those landmarks define the structure and shape of the face, as well as the relative position of facial features like the corner of the eye and tip of the chin, to create digital models of a person’s face.

While early-stage images looked like a messy blur between the pictures of person A and B, the team found that after a few hundred iterations the CycleGAN system they used would produce composite photos that a human observer would identify as person B – but a facial-recognition system would identify as person A.

Flagging a vulnerability

The approach hasn’t been tested in the field, but lab tests suggested that in an era of increasingly automated face-recognition systems – such as those used in the SmartGates currently being rolled out in Australian airports by the Department of Home Affairs – this kind of manipulation could potentially allow someone on the no-fly list to forge a passport photo that would let them pass an automated gate without being detected.

“As we begin to adopt these types of technologies, we need to consider the adversarial misuse of these systems,” Raj Samani, a McAfee fellow and chief scientist within the McAfee ATR team, told Information Age ahead of the research being presented at this month’s Black Hat US event.

“When you start to think about the practical uses of the technology – such as keeping people who shouldn’t be flying from getting on airplanes, and known ‘bads’ from getting inside places – the application of this becomes very significant.”

The technique has so far only been tested in ‘white box’ and ‘grey box’ scenarios – where operating parameters are tightly controlled by researchers and the operation of algorithms can be closely monitored – but experiments in real-world ‘black box’ scenarios would show just how much of a threat the technique poses.

Biometric systems have been a constant target for security researchers and hackers, with 3D printers and even sticky tape used to fool some fingerprint scanners and the accuracy of biometric systems under constant evaluation.

Late last year, the US National Institute of Standards and Technology (NIST) released fingerprint, facial image and optical character recognition (OCR) data sets to help designers of biometric security systems evaluate their accuracy.

Past hacks of biometric systems led their designers to add ‘liveness detection’ features to confirm that a real person is being scanned, while newer smartphone-based face recognition systems use depth-detecting cameras to measure faces in 3D.

Nonetheless, Samani said, the McAfee team’s successful model hacking highlights the kind of vulnerabilities that must be continually evaluated as new systems increasingly bypass human protections and hand critical security decisions to AI systems.

“It’s not something we have actively seen being exploited,” he said, “but by being open and transparent about the vulnerabilities, we can explore these limitations.”

“As biometric systems become more widely deployed, it’s important to consider these attack scenarios.”