Reading stop signs and staying in lanes may be easy for most of us, but attempting to understand the outside world can still be difficult for machines.

And this leaves them vulnerable to a new cyber threat.

McAfee’s Advanced Threat Research team has been experimenting with machine learning models similar to those used by autonomous vehicles to understand their environment.

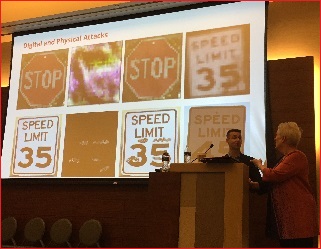

The researchers used what they called an adversarial machine learning approach to find vulnerabilities in the models which could then be digitally manipulated to cause misclassifications.

A stop sign, for example, can be made to look like a speed limit sign.

That digital manipulation was then reproduced with stickers placed on signs and tested using their trained classification model. The camera saw one sign and thought it was another.

McAfee researchers Steve Polvony (L) and Celeste Fralick (R) demonstrating the misclassifications.

In China, Tencent’s Keen Security Lab has demonstrated the dangers of this kind of attack in the real world.

Using stickers placed on the road, they tricked a Tesla in Autopilot mode into thinking there was a traffic lane, causing the car to steer itself into a fake lane.

Despite the vast research into this attack vector, Steve Povolny, head of McAfee’s Advanced Threat Research team, said there was no evidence it has been used against cars in the real world.

“That's probably a good decade out from real world attacks,” he told Information Age.

“But when you have a fleet of millions of vehicles that can talk to each other, can talk to pedestrians, that can talk to the infrastructure and the internet around them, that can drive themselves, or even just partially drive themselves – then you've got a rolling attack surface.”

Elon Musk’s ambitious Robotaxi concept relies on a fleet of autonomous vehicles, as do Uber’s future plans for their transport platform.

Pre-empting the hackers

As the tech becomes more advanced and pervasive, Povolny thinks it is important to try and stay one step ahead of people who will look to exploit weaknesses in order to cause harm.

“We're trying to ask ourselves ‘What do we really need to protect?’,” he said.

“Not just ‘Is this conceptually possible?’, but ‘How would the adversary take this in formation and implemented a real-world attack?’

“Today, I just don't think it has any kind of a value proposition. Imagine if, in the worst-case scenario, they put some stickers out and they cause a semi-autonomous Tesla or Google car to crash.

“Well, maybe somebody is hurt, but does that do anything fundamental except reveal the fact that they're attacking those systems? No.

“Where we would predict this becomes a problem is when you have fleet mentality of vehicles and where you can cause damage to multiple vehicles at the same time.

“You could potentially disrupt an entire transportation supply chain and really have a fundamental impact on the economy.”

Even though there has been no sign of deliberate attacks autonomous vehicles, Povolny said the fact that cybersecurity researchers were interested highlighted the potential of this vector.

This shows the constant tug-of-war between white and black hat hackers.

“The research community as a whole is kind of interested in focused on the same types of flaws, and that, to me, says that we're in line with what the adversary is looking at as well,” Povolny said.

“Those always line up whether we're beating them to the punch, or more realistically, whether we're following where the attacks are going because the research community and the underground hacker community are always looking at the same types of targets.”

Casey Tonkin travelled to the McAfee MPower conference in Las Vegas as a guest of McAfee.