Killer robots may have met their maker, after a consortium of leading technology figures signed a pledge vowing to oppose the development of lethal autonomous weapon systems (LAWS).

The pledge outlaws the development, manufacture, trade or use of lethal autonomous weapons amongst signatories with the aim of preventing “an arms race that the international community lacks the technical tools and global governance systems to manage.”

Released at the 2018 International Joint Conference on Artificial Intelligence (IJCAI) in Stockholm, the Lethal Autonomous Weapons Pledge has more than 150 companies and 2,400 individual signatories.

Amongst these: Tesla and SpaceX CEO Elon Musk, Google DeepMind, ClearPath Robotics/OTTO Motors, the European Association for AI and the XPRIZE Foundation.

The pledge zeroes in on LAWS, which it classifies as weapons that can identify, target and kill a person without human intervention.

President of the Future of Life Institute, Max Tegmark, announced the agreement.

“I’m excited to see AI leaders shifting from talk to action, implementing a policy that politicians have thus far failed to put into effect,” he said.

“AI has huge potential to help the world – if we stigmatise and prevent its abuse. AI weapons that autonomously decide to kill people are as disgusting and destabilising as bioweapons, and should be dealt with in the same way.”

Professor of Artificial Intelligence at the University of New South Wales and ACS AI Ethics Committee Member, Professor Toby Walsh was a key organiser of the pledge.

“We cannot hand over the decision as to who lives and who dies to machines. They do not have the ethics to do so,” he said.

“I encourage you and your organisations to pledge to ensure that war does not become more terrible in this way.”

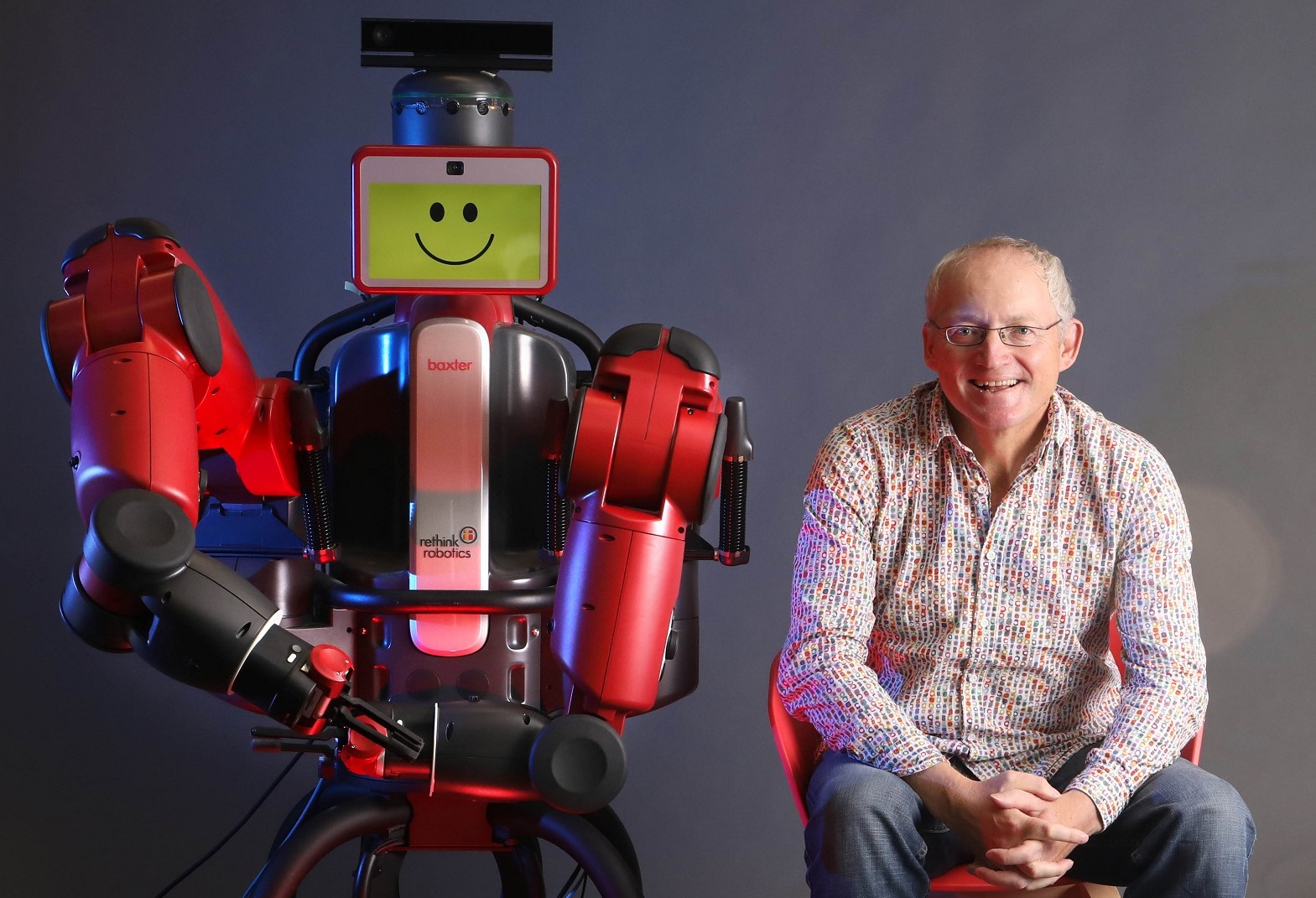

Professor Toby Walsh helped organise the agreement. Source: Supplied

Professor Toby Walsh helped organise the agreement. Source: Supplied

The agreement

It explains that this decision has been made on both an ethical and pragmatic basis.

“There is a moral component to this position, that we should not allow machines to make life-taking decisions for which others – or nobody – will be culpable,” it states.

“There is also a powerful pragmatic argument: lethal autonomous weapons, selecting and engaging targets without human intervention, would be dangerously destabilising for every country and individual.”

The agreement addresses concerns that LAWS are likely to end up on the black market and hence become obtainable for terrorists and despots.

It also calls on political decision-makers to follow suit.

“We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons.

The United Nations will meet next month to further discuss LAWS, as it continues a push to ban fully autonomous weapons.

Full text of pledge

Artificial intelligence (AI) is poised to play an increasing role in military systems. There is an urgent opportunity and necessity for citizens, policymakers, and leaders to distinguish between acceptable and unacceptable uses of AI.

In this light, we the undersigned agree that the decision to take a human life should never be delegated to a machine. There is a moral component to this position, that we should not allow machines to make life-taking decisions for which others – or nobody – will be culpable.

There is also a powerful pragmatic argument: lethal autonomous weapons, selecting and engaging targets without human intervention, would be dangerously destabilizing for every country and individual. Thousands of AI researchers agree that by removing the risk, attributability, and difficulty of taking human lives, lethal autonomous weapons could become powerful instruments of violence and oppression, especially when linked to surveillance and data systems.

Moreover, lethal autonomous weapons have characteristics quite different from nuclear, chemical and biological weapons, and the unilateral actions of a single group could too easily spark an arms race that the international community lacks the technical tools and global governance systems to manage. Stigmatizing and preventing such an arms race should be a high priority for national and global security.

We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons. These currently being absent, we opt to hold ourselves to a high standard: we will neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons. We ask that technology companies and organizations, as well as leaders, policymakers, and other individuals, join us in this pledge.