Last week, Google officially claimed its researchers achieved ‘quantum supremacy’ – the point at which quantum computers can out-perform classical computers.

The milestone was announced with a paper in Nature and accompanying blogpost.

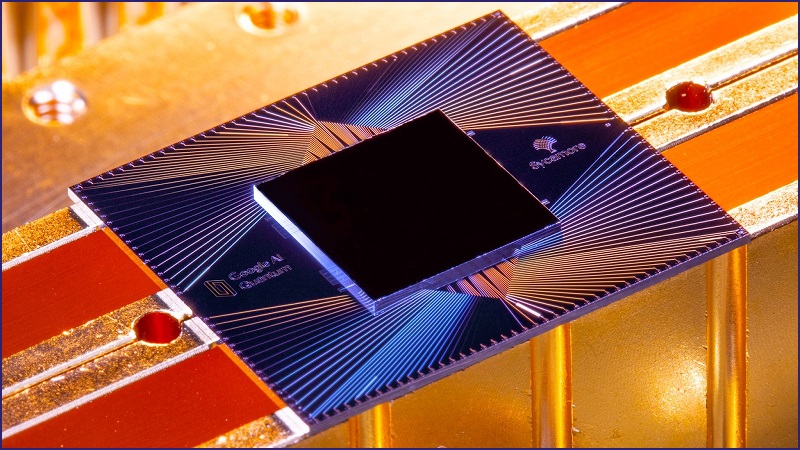

“We developed a new 54-qubit processor, named ‘Sycamore’, that is comprised of fast, high-fidelity quantum logic gates, in order to perform the benchmark testing,” Google researchers John Martinis and Sergio Boixo said in the post.

“Our machine performed the target computation in 200 seconds, and from measurements in our experiment we determined that it would take the world’s fastest supercomputer 10,000 years to produce a similar output.

“With the first quantum computation that cannot reasonably be emulated on a classical computer, we have opened up a new realm of computing to be explored.”

Google ran an experiment sampling the output (bit strings) of a pseudo-random 53 qubit quantum circuit using the Sycamore processor.

Accurately emulating the output of this quantum circuit in a classical computer is “infeasible”, according to Google, who attempted the simulation using the world’s largest supercomputers.

Point of contention

Google’s announcement of quantum supremacy was hardly a well-kept secret.

A month ago, Google’s paper made news when it was uploaded to a NASA website.

But before Google could remove the paper, the news was picked up and quickly spread around the world.

This gave Google’s quantum competitor, IBM, a chance to get on the front foot and produce a blogpost of its own, criticising the monumental achievement.

“A headline that includes some variation of ‘Quantum Supremacy Achieved’ is almost irresistible to print, but it will inevitably mislead the general public,” four of IBM’s researchers said in the post.

“First because […] by its strictest definition the goal has not been met.

“But more fundamentally, because quantum computers will never reign ‘supreme’ over classical computers, but will rather work in concert with them, since each have their unique strengths.”

IBM’s researchers challenged Google, saying the tech giant did not fully integrate all the features of a classical computer during the experiment.

“Classical computers have resources of their own such as a hierarchy of memories and high-precision computations in hardware, various software assets, and a vast knowledge base of algorithms, and it is important to leverage all such capabilities when comparing quantum to classical,” the IBM post said.

“When their comparison to classical was made, [Google] relied on an advanced simulation that leverages parallelism, fast and error-free computation, and large aggregate RAM, but failed to fully account for plentiful disk storage."

IBM argued that it could simulate the same experiment on a classical computer in just two and a half days.

“We urge the community to treat claims that, for the first time, a quantum computer did something that a classical computer cannot with a large dose of skepticism due to the complicated nature of benchmarking an appropriate metric.”

Physicist and Director of Quantum Technology at Archer Explorations, Dr Martin Fuechsle, leveled similar criticisms against Google’s research paper.

“You can always engineer a problem to suit your needs,” Fuechsle told Information Age.

“Let’s say you’ve got Joe Average, and he’s not really good at anything.

“But if you think about it hard enough you can find something he’s better at than everybody else, even if it’s just remembering what he did two years ago – because he’s the only one who can do that.

“The problem Google has solved is not really useful and was very tailor-made to be tackled more efficiently than classical computers.”

Google responds

In an interview with the MIT Technology Review, Google CEO, Sundar Pichai, responded to IBM’s criticism by saying the phrase ‘quantum supremacy’ was “a technical term of art”.

“People in the community understand exactly what the milestone means,” Pichai said.

“It’s no different from when we all celebrate AI.

“There are people who conflate it with general artificial intelligence, which is why I think it’s important we publish."

“It’s important that people who are explaining these things help the public understand where we are, how early it is, and how you’re always going to apply classical computing to most of the problems you need in the world.

“That will still be true in the future.”