Facebook content moderators who developed mental health issues due to their work will share in more than $80 million after the tech giant agreed to a legal settlement in principle.

The preliminary settlement filed on Friday in San Mateo Superior Court will see Facebook pay content moderators a lump sum of $US1000, along with a further $US1,500 if they have been diagnosed with a mental illness due to the moderation work.

Those with concurrent conditions will be eligible for up to $US6000.

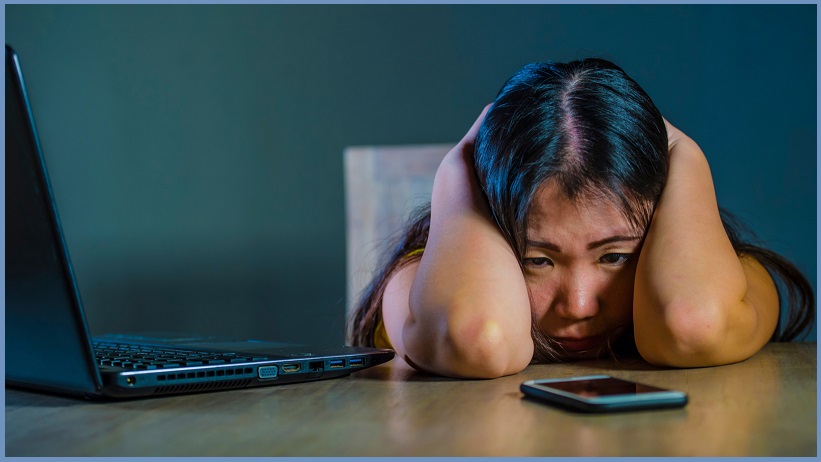

The lawsuit had alleged that Facebook failed to provide a safe workplace for the content moderators, who were regularly forced to view horrific images and videos as part of their work.

The ruling will cover more than 11,000 content moderators in Arizona, California, Florida and Texas who developed depression or post-traumatic stress disorder from the work, among other conditions.

As part of the preliminary agreement, Facebook will also roll out a series of changes to its moderation tools and provide better access to counselling for its moderators.

“We are so pleased that Facebook worked with us to create an unprecedented program to help people performing work that was unimaginable even a few years ago,” the plaintiffs’ lawyer Steve Williams said in a statement.

“The harm that can be suffered from this work is real and severe.”

A Facebook spokesperson said the company was committed to supporting its content moderators.

“We are grateful to the people who do this important work to make Facebook a safe environment for everyone,” they said.

“We’re committed to providing them additional support through this settlement and in the future.”

Facebook brought in huge numbers of content moderators in 2016 after widespread criticism that it was failing to remove harmful content from its platform.

These moderators were contracted through large consulting firms in the US.

Reports by The Verge revealed the moderators were sometimes working for as little as $28,800 annually in a very high-pressure environment where they would regularly view traumatic content.

If a post on Facebook is flagged by other users, one of these moderators will often have to decide whether it can stay or not.

This means they are regularly subjected to hate speech, murders, suicides and other graphic content.

Former Facebook content moderator Selene Scola developed PTSD from her work and later sued Facebook with the aim of establishing a testing and treatment program for current and former moderators.

As part of her work, Scola had to regularly view images of rape, murder and suicide, and developed symptoms of PTSD after nine months in the job.

As part of the ruling, Facebook has also committed to introducing a range of changes in how it works with its content moderators.

It will be muting audio by default and making videos black and white for 80 per cent of the moderators by the end of the year, and for all moderators by 2021.

Moderators will also have access to a weekly one-on-one coaching session with a licensed mental health professional and monthly group therapy sessions with other moderators.

If a moderator is going through a mental health crisis, they will be able to access a licensed counsellor within 24 hours.

Facebook will also be introducing new rules for the hiring companies used to contract the moderators, such as screening for emotional resiliency and information about the psychological support on offer to be displayed at the work stations.