The Brady Bunch-style ‘gallery view’ of videoconferencing apps like Zoom, Microsoft Teams, and Google Meet has become a sign of the times – but it could also be a major security issue, according to Israeli researchers who warn that group chats can “vastly and easily” betray the identities of online collaborators.

Researchers at Israel’s Ben-Gurion University of the Negev used artificial intelligence (AI) tools to analyse the participants of more than 15,700 videoconference gallery images – which are often screen-shotted by participants as mementos or records of attendance – that had been shared on Instagram and Twitter.

By comparing these images against a database of 142,000 face images scraped from social-media sites, the researchers described in a newly published paper, the AI routines identified 1153 people who had appeared in more than one meeting.

“Video conference users are facing prevalent security and privacy threats,” said Dr Michael Fire of the BGU Department of Software and Information Systems Engineering, warning that “it is relatively easy to collect thousands of publicly available images of videoconference meetings and extract personal information about the participants.”

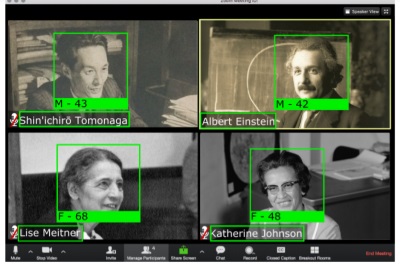

The AI could spot faces in 80 per cent of cases and could also discern the gender and names of participants, and estimate their age – further increasing the accuracy of results when the images were cross-referenced against social-network data.

Researchers were also able to identify groups of coworkers by the fact that they frequently appear in meetings together – potentially betraying the identities of project collaborators that may not, or should not, be public knowledge.

Ben-Gurion University Researchers show how posted screenshots can reveal age, face, and username. Source: Ben-Gurion University

“It is possible to identify the same individual’s participation at different meetings by simply using either face recognition or other extracted user features,” the researchers wrote.

“There is danger not only from a malicious user but also from other users in the meeting that can innocently upload a single photo, which can be maliciously used to affect their and their family’s privacy.”

Faces weren’t the only giveaway, with many users identified by their background office or home environments – leading the researchers to encourage users to use stock images as backgrounds to mask their real surroundings.

Users should also consider using generic usernames like ‘iPhone’ or ‘iZoom’ on videoconferencing services, avoid posting images of videoconference sessions online, and not sharing videos.

Sharing too much

New applications for AI-based image-recognition technologies are quickly creating new exposures for individuals and their employers, with MIT recently pulling a “derogatory” image-training database and the Australian Federal Police under fire after privacy regulators announced they would investigate its use of controversial Clearview AI face-recognition technology.

The research highlights the growing exposure that individuals and their employers face as face-to-face relationships are replaced with instant, global collaborations facilitated by ubiquitous videoconferencing.

Many users are unaware of the security implications of the platforms, which were exposed early in the COVID-19 pandemic when ‘Zoombombing’ joined the vernacular after a password dump and extensive meeting breaches led Zoom to apologise and pause its development for a 90-day security overhaul.

Security issues didn’t dampen the world’s enthusiasm for Zoom, which has seen shares surge this year as the expanding pandemic sent users scrambling for ways to maintain communication.

Zoom reported that it was supporting 300m videoconferencing user sessions every day in April, up from 200m in March and just 10m in December.

Those figures have continued to grow – Zoom publicly predicted it would carry 2 trillion meeting minutes this year if usage continued at April’s record pace – on the back of new customers like the National Australia Bank, which has grappled with widespread office closures and the shifting of operational duties to home-bound workers.

Greater usage of videoconferencing platforms has put them in the firing line of cybercriminals, producing exploits including a host of Zoom issues, a major threat to Microsoft Teams, and high-severity issues with Cisco Webex.

The potential for compromise is extensive, the BGU researchers said, warning that a malicious attacker could use deepfake tools to infiltrate a meeting by posing as someone else.

“Since organisations are relying on video conferencing to enable their employees to work from home and conduct meetings, they need to better educate and monitor a new set of security and privacy threats,” Fire said.

“Parents and children of the elderly also need to be vigilant, as video conferencing is no different than other online activity.”