Researchers have taught a computer how to smell.

Teams from Intel and Cornell University designed a neural algorithm based on the brain’s olfactory system (which recognises scents) and used it to teach a neuromorphic computer chip how to smell 10 different hazardous chemicals.

The process supposedly mimics the effect a scent has on the human.

Nabil Imam, one of the co-authors of the study published last week in Nature, explained how this research intersects neuroscience and computing.

“My friends at Cornell study the biological olfactory system in animals and measure the electrical activity in their brains as they smell odours,” he said.

“On the basis of these circuit diagrams and electrical pulses, we derived a set of algorithms and configured them on neuromorphic silicon, specifically our Loihi test chip.”

The system was able to learn new scents after being introduced to only a single sample and could identify what it had learned even through a mixed cloud of different smells.

It is also able to retain new information without losing or distorting what it has learnt – unlike deep learning systems that change previous training results when new datasets are added.

Senior author of the study, Cornell University’s Thomas Cleland, said the ease of retraining their model was a feature of designing the system to be more closely related to a human brain.

“When you learn something, it permanently differentiates neurons,” he said.

“When you learn one odour, the interneurons are trained to respond to particular configurations, so you get that segregation at the level of interneurons.

“So on the machine side, we just enhance that and draw a firm line.”

Potential uses for this technology include robots or other “electronic nose systems” that could detect hazardous materials or even conduct quality control measures.

Neuromorphic computing

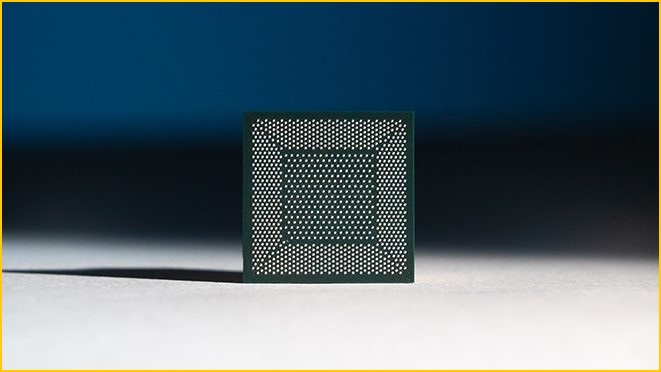

Loihi is Intel’s version of an early neuromorphic chip – one that is designed to more closely mimic the structure of the human brain than a traditional computer chip.

One of the features of neuromorphic computing is that instead of sending information between the CPU and memory, a neuromorphic chip interacts with information as an intrinsic part of its operations, allowing for a greater level parallel processing.

Because neuromorphic computing has more brain-like features, it provides a different avenue of research for machine learning and artificial intelligence and uses fewer resources than neural networks.

“My next step is to generalise this approach to a wider range of problems — from sensory scene analysis (understanding the relationships between objects you observe) to abstract problems like planning and decision-making,” Imam said.

“Understanding how the brain’s neural circuits solve these complex computational problems will provide important clues for designing efficient and robust machine intelligence.”

The brain is still the most complex object in the universe and learning how to utilise its mechanisms from scratch will going to require a lot more research.

“Just because we know how to build hardware that simulates brain components doesn’t mean we know how to make use of it,” said computer scientist Dr Peter Stratton from the University of Queensland.

“That’s because we don’t really know how the brain uses its own hardware.

“Until we understand more about how a brain computes things, the full potential of neuromorphic chips isn’t likely to be realised.”