Scientists have developed what they are calling the world’s first speech neuroprosthesis that uses artificial intelligence to decode full words directly from people’s brains.

Researchers from the University of California, San Francisco (UCSF) developed the technique which translates brain signals used for controlling vocal chords directly into text on a computer screen.

Dr Edward Chang, a neurosurgeon at UCSF and lead author of the research, said the study could be life-changing for people living with severe paralysis.

“To our knowledge, this is the first successful demonstration of direct decoding of full words from the brain activity of someone who is paralysed and cannot speak,” he said.

“It shows strong promise to restore communication by tapping into the brain's natural speech machinery.”

The research centred around a man in his late 30s who suffered from a stroke over 15 years ago which left him with severely limited movement in his head and below his neck.

He has primarily been communicating using a pointer attached to a hat which he uses to touch letters on a screen.

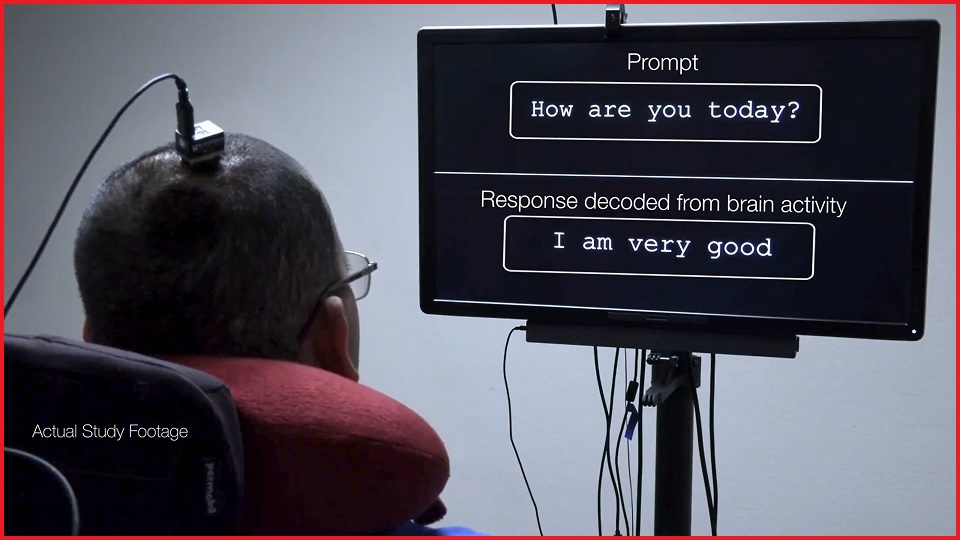

The researchers implanted an electrode array into the man’s speech motor cortex and began recording neural activity as he attempted to say 50 different words.

In each of the 48 sessions, lasting a total of 22 hours, the man would try to say each of the words as many times as possible to allow for a large variety of data.

Using neural networks trained on the data – along with models commonly used for things like autocorrect – the researchers have been able to provably decode his speech, albeit with a limited vocabulary.

David Moses, an engineer who developed ways to decode the brain signals in real-time, said it was exciting to see the proof-of-concept for this brain-to-speech recognition technology.

“We were thrilled to see the accurate decoding of a variety of meaningful sentences,” he said.

“We’ve shown that it is actually possible to facilitate communication in this way and that it has potential for use in conversational settings.”

The UCSF research paves the way for devices that differ from existing communications technology – like the NeuroNode – in an important way.

Rather than empowering users to select words or letters on a screen using slight muscle movement, or the neurological signals that triggers muscle movement, the USCF neuroprosthesis interprets complete words making it more analogous to speech than typing.

According to Dr Chang, this means it could be a significantly faster method of communication.

“With speech, we normally communicate information at a very high rate, up to 150 or 200 words per minute,” Chang said.

“Going straight to words, as we’re doing here, has great advantages because it’s closer to how we normally speak.”

The study was funded in part through a research agreement with Facebook which is interested in bringing similar mind-reading technology to consumer electronics.

Facebook has already published details about its mind-reading ambitions with a prototype non-invasive headset that measures brain signals.

In a blog post from 2019 – which has since been updated to include research from Chang and Moses – Facebook outlined how it might integrate this sort of technology into its augmented and virtual reality offerings.

“Being able to recognise even a handful of imagined commands, like ‘home,’ ‘select,’ and ‘delete,’ would provide entirely new ways of interacting with today's VR systems — and tomorrow's AR glasses,” Facebook said at the time.