Researchers have discovered camera ‘fingerprints’ that can help detect the origin of a photo or video, leading to hopes it could assist investigators in child abuse cases.

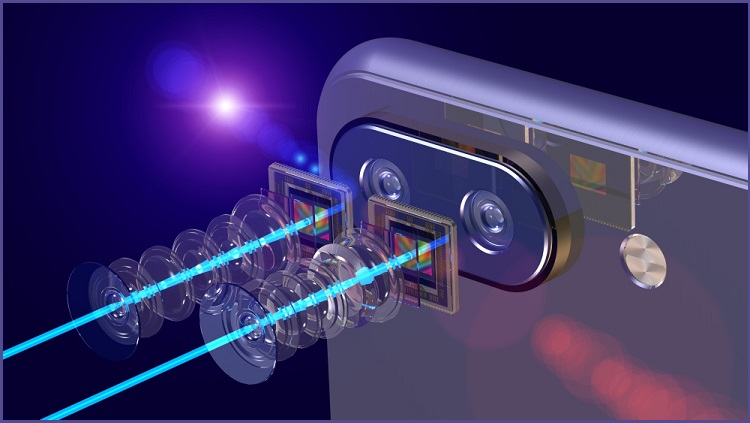

The research team at the University of Groningen developed a novel system that can "see" manufacturing defects in camera sensors used on modern electronics that are impossible to discern with the naked eye.

These imperfections result in a unique noise pattern in images and videos, which could help distinguish between different sensor types or even distinct devices using the same sensor model, linking batches of produced media to a given set of camera devices like smartphones or SLR cameras.

If the sensors are profiled for their noise pattern prior to entering the market, and the manufacturer maintains a database with matching patterns and device serial numbers, law enforcement investigators could use the info to uncover the type of device used for capturing images or video.

In child abuse investigations, analysing published content on dark web sites could help trace back a device ID.

Additionally, it could help police determine if confiscated storage devices contain material that the suspect captured using their cameras or if the material was bought from others.

The unique sensor-specific patterns could also lead to user identification by comparing to other published material on social media platforms, online photo repositories, YouTube, forums, and more.

Technical background

The Groningen University researchers published all technical details in a paper that accompanies the presentation of the new system.

In short, the profiling of camera sensors relies on training machine learning models to extract video frames, crop the centre, and then use a computational deterministic identification system.

The AI was trained to ignore the "shot noise", which varies depending on the capturing conditions, and focus on the FPN (fixed pattern noise) and PRNU (photo response non-uniformity) noise.

This type of noise is only noticeable during longer exposure shots where specific pixels may exhibit higher than average brightness.

However, this would still be hard for regular users to notice as sensors that pass quality testing have this effect in moderation.

Because the pattern acuteness is directly related to light intensity, FPN is usually generated when the scene is darker, while PRNU is present when there's more light.

Hence, the accuracy of prediction changes depending on whether the analysed shots were taken indoors or outdoors.

In the best case (multiple models were tested), the researchers achieved a camera sensor identification rate of about 75% on experiments with 502 test videos using 28 devices.

In a more limited set of classifying 18 sensor models, the experiments achieved a "fingerprinting" accuracy of 99%.

Groningen University researchers have published their dataset and prediction models on this GitHub repository.

Application potential

The researchers claim that their new system only needs five random frames from a video clip to classify the media, and it can be used to distinguish over 10,000 classes for varying computer vision applications.

Although the research project has concluded, the leading researcher George Azzopardi remains in touch with interested law enforcement agencies ready to fund the next round, poised to tackle practical challenges.

The most challenging bit right now is discerning between two very similar sources, for which the method of "simple majority voting" didn't yield satisfactory results for real-world investigation operations.