Adobe has accelerated the rollout of its watermark to show whether an image has been AI-generated as the tech giant strives for this to be the “ultimate signal of transparency in digital content”.

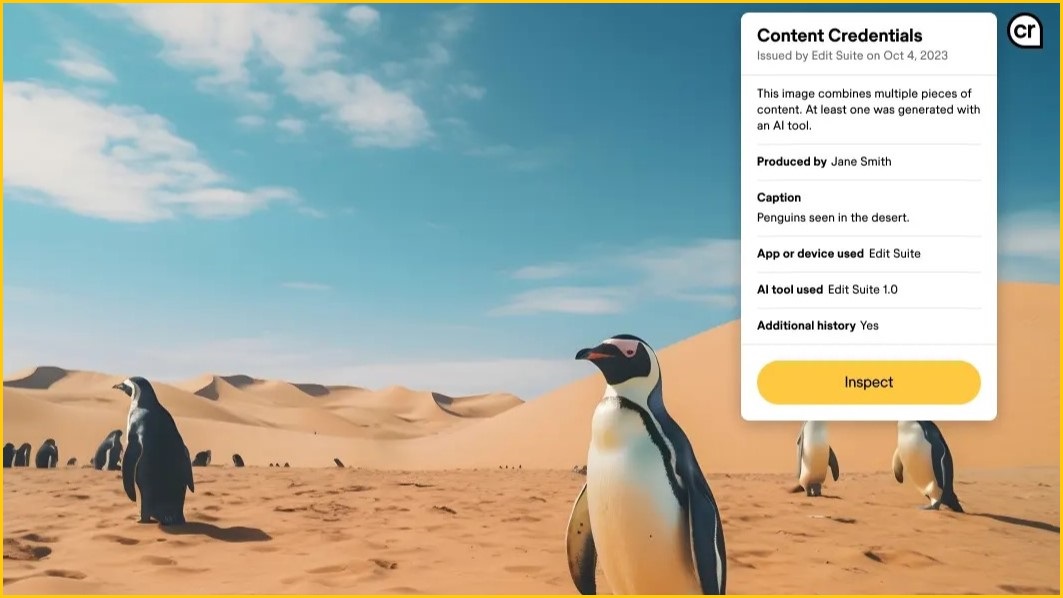

In 2021, Adobe announced Content Credentials, a CR symbol that can be attached to an image with its metadata to establish who owns it and whether it has been manipulated.

The service was made available in the Photoshop beta last year, and Adobe has announced a large-scale expansion of the service.

Microsoft has now introduced Content Credentials to all AI-generated images created by its Bing Image Creator, which confirm the date and time of the original creation.

Comms giant Publicis Groupe will also be using the service, and will share a preview for deploying Content Credentials at enterprise scale across its worldwide network of designers, marketers and creatives as part of a “trusted campaign of the future”.

Leica Camera and Nikon have also signed on to use the watermarks as part of upcoming camera models.

Adobe’s Content Credentials can be added via the company’s photo and editing platforms, with a symbol added to its metadata to show who created and owns it. When the logo is there, users can hover over it and this information will be displayed, along with the AI tool used to make it, if applicable.

This will help to “restore trust online”, Adobe said, labelling the Content Credential as an “icon of transparency” and a symbol which is set to become the “ultimate signal of transparency in digital content”.

“As thousands of images and videos are produced daily to feed the global pipeline of digital experiences, the release and adoption of this new icon are significant milestones, empowering the advertising and creative industry to be authenticating client and creative work,” Adobe said in a blog post.

“We believe Content Credentials empower a basic right for everyone to understand context and more about a piece of content.

“We see a future where consumers will see the icon on social platforms, news sites and digital brand campaigns, and habitually check for Content Credentials just like they look for nutrition labels on food.”

Spotting AI images

The newfound ease of access to free tools for creating AI-generated images has brought with it widespread concerns over deepfakes and misinformation. This has led to efforts to create tech tools that can prove the authenticity or signal that a piece of content has been manipulated.

Earlier this year a group of tech giants, including Adobe, pledged to develop watermarking technology to combat the spread of fake images.

Google has also launched a similar tool, dubbed SynthID. In contrast with Adobe’s offering, which has a visible watermark in the image as well as invisible data, Google’s digital watermark is invisible to the naked eye, allowing the user to identify if something is AI-generated through the metadata.

Digimarc has created its own digital watermark tool which includes copyright information which can track the use of the data in AI training sets.

There are concerns however that these digital watermarks will be easy to circumvent or remove from images.

University of Maryland computer science professor Soheil Feizi released a paper earlier this year concluding that “we don’t have any reliable watermarking at this point”.

“We broke all of them,” Feizi said.

Ben Colman, the CEO of AI detection startup Reality Defender, said that the watermarks could be removed or manipulated themselves.

“Watermarking at first sounds like a noble and promising solution, but its real-world applications fail from the onset when they can be easily faked, removed or ignored,” Colman told Wired.