Aiming to sidestep the ChatGPT AI platform’s habits of inventing facts and glossing over mistakes, cyber threat intelligence vendor Recorded Future has trained a generative AI platform with a narrowly defined data set supporting its analysts and customers.

The firm – whose Intelligence Cloud manages over 100 terabytes of text, images, and technical threat intelligence data relating to cyber security threats, malicious actors, incidents, and more – built its Recorded Future AI platform on more than a decade’s worth of expert analysis, feeding OpenAI’s GPT platform more than 40,000 analyst notes from its Insikt Group threat intelligence division.

That insight was combined with the Intelligence Cloud data to provide an automated cyber security support tool that, Recorded Future CEO and co-founder Dr Christopher Ahlberg said, automates much of the information gathering and analysis that has long been the domain of human analysts.

For a company that was established a decade ago “to go beyond search, and organise the internet for analytics,” Ahlberg explained as the new AI platform was introduced, the company’s data holdings have seen “growth matching the speed of the internet and enabling countries and the world’s foremost organisations with intelligence.”

“We believe we can eliminate the cyber skills shortage and increase the capacity for cyber readiness by immediately surfacing actionable intelligence.”

The system’s use of GPT – the same AI engine that powers the game-changing ChatGPT platform – helps it automatically generate summaries of data from the company’s extensive data holdings, writing plain-English analyses designed to help human operators better understand cyber threats as they arise.

By automatically generating real-time insight with a constrained and accurate data set, the platform is designed to reduce pressure on cyber security staff whose high rates of burnout have in part been fuelled by chronic information overload.

“The speed and scale of threats overwhelm today’s analysts,” Recorded Future’s chief technology officer and co-founder Staffan Truvé told Information Age, “and the application of AI can drastically help solve [problems from] too much data, too few people, and the attacks of motivated threat actors, by automating a lot of time-consuming tasks.”

“AI insights are utilised by analysts when performing detailed intelligence analysis, where the AI insights summarise the retrieved information into a simple report [and] provide concise and actionable summaries of changes in the threat landscape.”

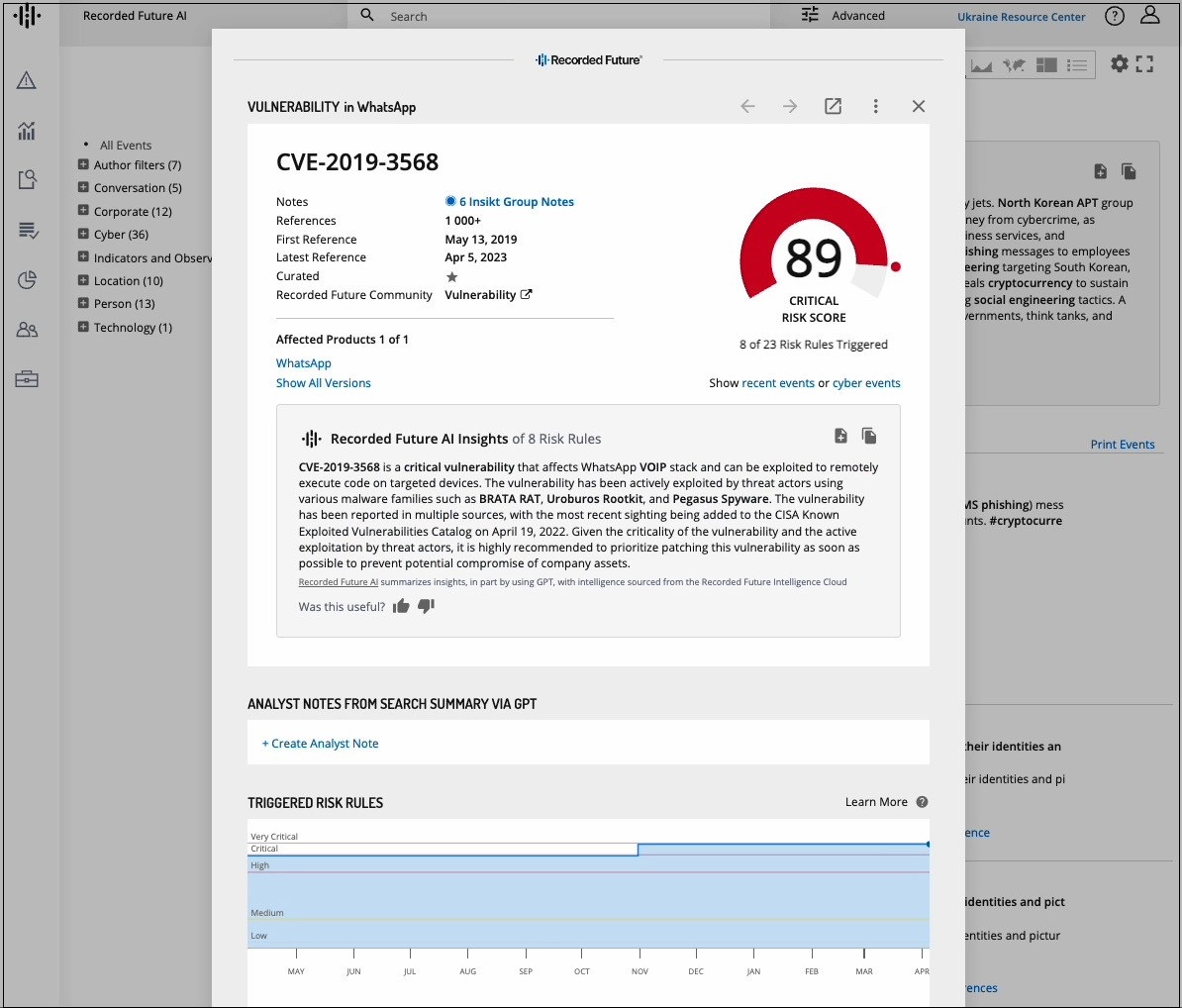

An example of the output. Image: supplied

Like ChatGPT, but accurate

Accuracy and currency have been major issues with ChatGPT, which was trained on a massive data set that stops in the year 2021, and is continually learning from the inputs of more than 100 million users prodding and poking it in both legitimate and unorthodox ways.

But by linking its in-house version of the GPT platform with the always-current data set of the company’s Intelligence Cloud and the Intelligence Graph it feeds, Recorded Future’s ‘walled garden’ AI approach works around such limitations to make its security threat analyses both accurate and contemporary.

“Unlike the raw GPT model, which utilises old training data, we are utilising real-time intelligence to be summarised and analysed by AI,” he explained, “which means that the data being summarised is always clear.”

“The model can be retrained when necessary, but the basic domain knowledge changes slowly,” he continued. “The key is that the data handled by the model will always be current, since our Intelligence Graph was built to scale at the speed of the internet and is updated continuously, in real time.”

The decision to leverage the GPT generative AI engine, but to intentionally avoid the random and arbitrary inventions of ChatGPT, reflects a growing trend towards standalone, domain-specific generative AI implementations trained on high-quality, well-structured and consistent data.

Private generative AI platforms are, Gartner has predicted, set to rapidly steer innovation in areas like drug design, material science, chip design, synthetic data, and parts design.

By 2025, research vice president for technology innovation Brian Burke recently said, more than 30 per cent of new drugs and materials will be “systemically discovered using generative AI techniques – and that is just one of numerous industry use cases."

“Enterprise uses for generative AI are far more sophisticated,” the firm noted. “ChatGPT, while cool, is just the beginning.”