The need for processing power to cater for the demands of the surging AI sector and computing intense applications such as machine learning has seen the return of supercomputers and massive computer chips.

Appropriately based in the heart of Silicon Valley at Sunnyvale California, hardware startup Cerebras Systems, this week announced its plan to launch Condor Galaxy, a network of nine interconnected supercomputers, promising to significantly reduce AI model training time.

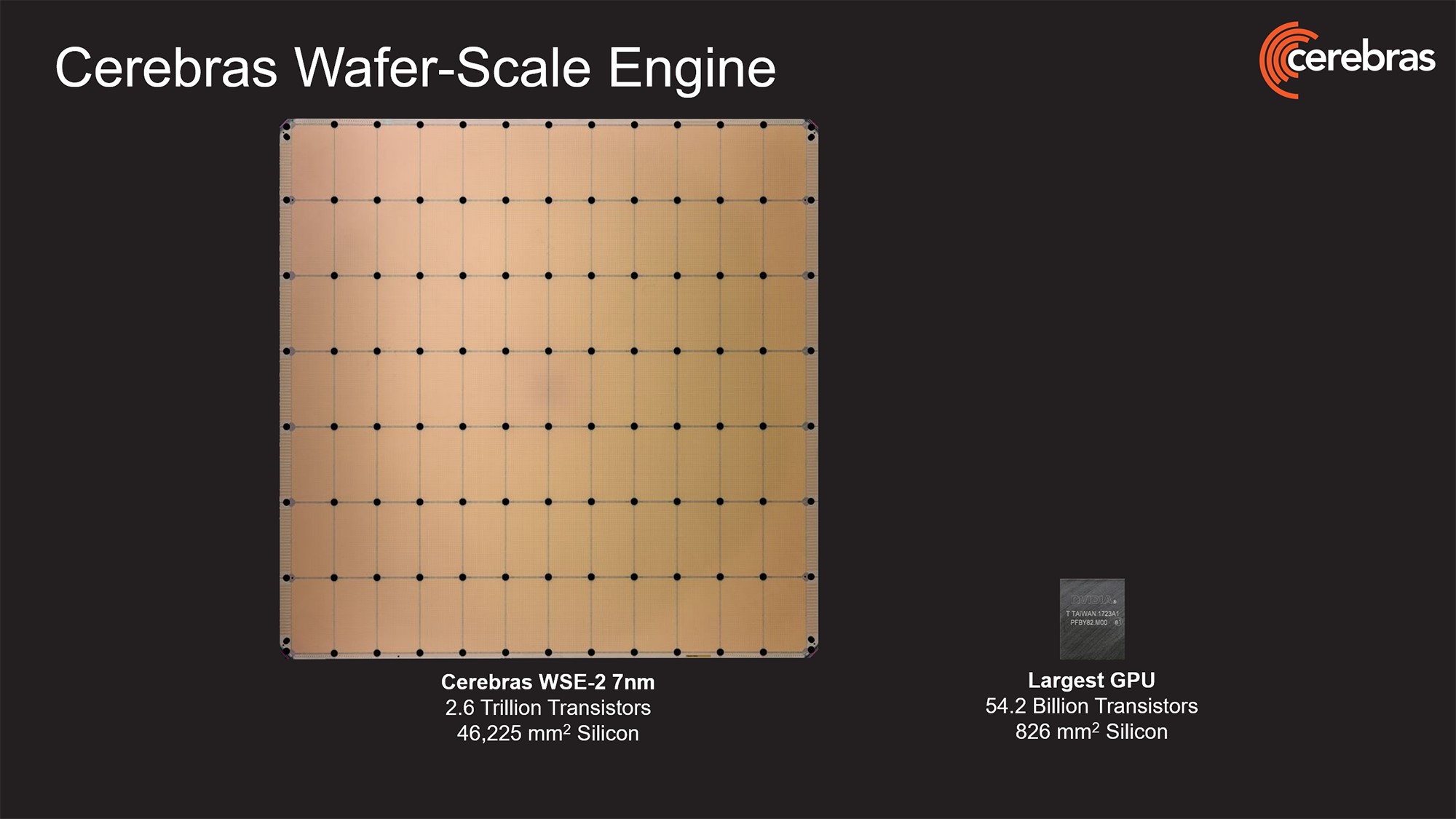

Founded in 2016, and boasting a team of 400, the company first caught the media’s attention four years ago with its dinner plate sized ‘wafer-scale’ CS-2 CPUs. The larger size, over fifty times the area of a conventional chip, gives more room for compute cores and memory while speeding up communications.

Those chips form the basis of the Condor Galaxy supercomputer network that will see Cerebras and G42, an Abu Dhabi based AI technology group, deploy two more supercomputers in the US in early 2024 followed by seven more around the world.

With a planned capacity of 36 exaFLOPs in total, the two companies claim the network will revolutionise AI adoption with its “easy to use AI supercomputers”. The company is one of a number challenging graphic processor manufacturer Nvidia’s domination of the AI computing market.

Other companies looking to take on Nvidia and develop AI optimised CPUs include Intel, IBM, AMD and Qualcomm along with startups such as UK’s Graphcore and Cerebras’ competitor, SambaNova systems which has raised over $US1.1 billion since 2017. Both Graphcore and Cerbras have raised over $US700million each over the past seven years.

Industry’s demand for AI processing power has seen Nvidia’s stock price soar 150 per cent over the past year to see the company valued over US$1.2 trillion, putting the chipmaker into the same big tech league as Apple, Microsoft, Alphabet, and Amazon.

Taking on Nvidia means building some extraordinary computing power, the individual Cerebras supercomputers will link 64 CCS-2 systems together with an AI training capacity of 4 exaFLOPs. Cerebras and G42 plan to offer a cloud service giving customers access to the supercomputer’s processing power without having to manage or distribute AI models over physical systems.

Each supercomputer is touted to boast standard support for up to 600 billion parameter models and extendable configurations supporting up to 100 trillion parameter models through its 54 million AI-optimized compute cores, 388 terabits per second of fabric bandwidth, and fed by 72,704 AMD EPYC processor cores.

“Delivering 4 exaFLOPs of AI compute at FP 16, CG-1 dramatically reduces AI training timelines while eliminating the pain of distributed compute”, said Cerebras Systems CEO Andrew Feldman.

“Many cloud companies have announced massive GPU clusters that cost billions of dollars to build, but that are extremely difficult to use. Distributing a single model over thousands of tiny GPUs takes months of time from dozens of people with rare expertise. CG-1 eliminates this challenge. Setting up a generative AI model takes minutes, not months and can be done by a single person.

“CG-1 is the first of three 4 exaFLOP AI supercomputers to be deployed across the U.S. Over the next year, together with G42, we plan to expand this deployment and stand up a staggering 36 exaFLOPs of efficient, purpose-built AI compute.”

Talal Alkaissi, CEO of G42 Cloud, added “collaborating with Cerebras to rapidly deliver the world’s fastest AI training supercomputer and laying the foundation for interconnecting a constellation of these supercomputers across the world has been enormously exciting.”

Cerberas’ network is named after the Condor Galaxy, also known as NGC 6872, stretches 522,000 light years from tip to tip, which is about 5 times larger than the Milky Way. The galaxy is visible in the southern skies as part of the Pavo constellation and is 212 million light-years from Earth.