Social media giant Meta is experimenting with facial recognition technology to combat an onslaught of sophisticated ‘celeb-bait’ scams targeting its users on Facebook and Instagram.

Celeb-bait scams refer to when a cyber-criminal makes contact via email, direct messages or comments on social media posts while pretending to be a prominent celebrity figure.

Such scams typically see victims divulging personal details or paying a scammer for supposed autographs, concert tickets or even in-person meetups under the guise of being a celebrity – most often actors, musicians, content creators or public figures.

Backed by video and audio AI deepfake technology, celeb-bait scams have risen in prominence on Meta’s flagship social media platforms Facebook and Instagram.

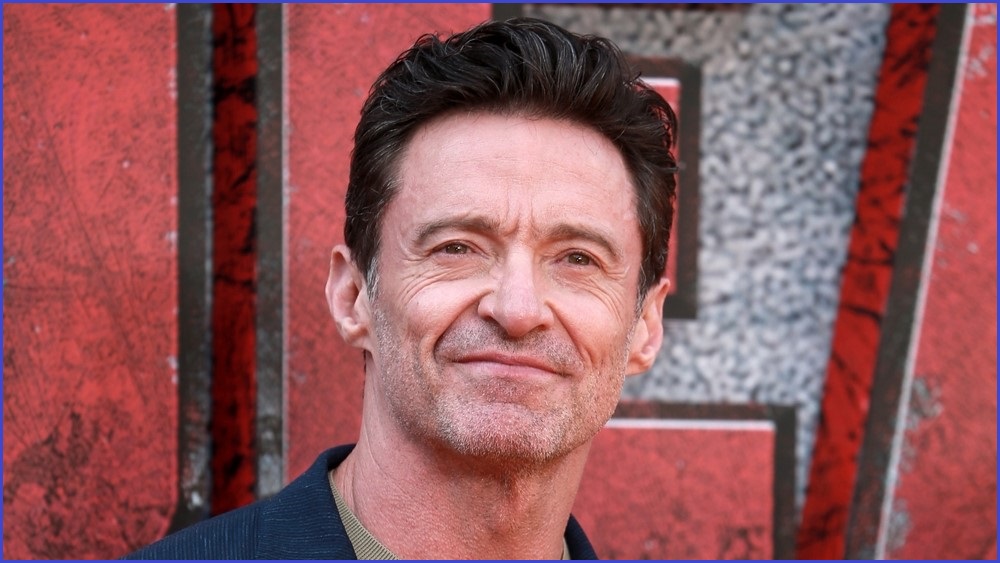

Notably, these scams are often served up via Meta’s on-platform ad services, where cyber-criminals have spread bogus investment services and household products under the likeness of such cultural icons as Hugh Jackman and Taylor Swift.

While Meta hasn’t released much in the way of figures to demonstrate the scale of the issue, earlier this month the tech giant revealed that through a partnership with the Australian Financial Crimes Exchange, it was able to remove over 9,000 spam pages and more than 8,000 AI-generated celeb bait scams across Facebook and Instagram in six months.

Upping the tech

On Monday, Meta further announced it will be upping its scam-detection processes to include facial recognition technology tailored specifically for celeb-bait scams.

“Scammers are relentless and continuously evolve their tactics to try to evade detection”, wrote Meta.

“We’re building on our existing defences by testing new ways to protect people and make it harder for scammers to deceive others.”

Meta noted the fake celebrity ads plaguing its platforms appear “designed to look real” and are “not always easy to detect”.

The company currently uses automated technology to operate its ad review system and filter scams from the “millions of ads” that run on its platforms every day.

Although Meta’s review system already checks for text, image and video indicators of potential scams and policy violations, droves of celeb-bait ads still manage to get through on virtue of mimicking legitimate content.

Meta says it will use facial recognition to prevent scams and improve account recoverability. Image: Meta / Supplied

To strengthen its existing review processes, Meta said it will test an approach where if its system notices a suspicious ad may contain the “image of a public figure”, it will attempt to use facial recognition technology to compare any faces in the ad with that person’s Facebook and Instagram profile pictures.

“If we confirm a match and determine the ad is a scam, we’ll block it,” wrote Meta.

Notably, the tech giant didn’t posit any alternative sources for comparing images of public figures with deepfakes, suggesting scams could garner more success if they impersonate celebrities who don’t have a Facebook or Instagram account, such as Emma Stone, Brad Pitt, or George Clooney.

Blockbuster actress Scarlett Johansson, who also doesn’t have a public-facing Facebook or Instagram account, recently topped antivirus company McAfee’s list of “most exploited celebrity names” list, being ranked as the US star whose name appears most frequently in online scams.

Meta said early testing with a “small group of celebrities and public figures” showed promising results, particularly when it came to increasing the “speed and efficacy” of its detection and enforcement processes.

The tech giant aims to expand its inclusion of more public figures who’ve been impacted by celeb-bait scams in coming weeks.

Celebrities will be notified of their inclusion in-app, while those who don’t wish to be included will need to opt out manually.

‘Selfie’ verification coming soon

Meta said it is also working to tackle scammers who directly impersonate public figures by creating imposter accounts.

Similarly to celeb-bait scams, these fake celebrity accounts typically aim to embezzle money or trick people into engaging with further scam content.

Meta likewise intends to fight AI with AI on this scam vector by deploying its facial recognition technology against fake accounts.

Furthermore, Meta plans to roll out a “video selfies” feature which will apply facial recognition technology to its broader userbase for the purpose of identity verification and regaining access to compromised accounts.

The opt-in feature will see users upload a video selfie which can be used as a means of facial verification against an existing profile picture, similar to face ID features on mobile phones.

Notably, the verification measure is being developed as deepfake technology edges dangerously close to accomplishing real-time face-mapping capabilities.

“While we know hackers will keep trying to exploit account recovery tools, this verification method will ultimately be harder for hackers to abuse than traditional document-based identity verification,” said Meta.