The “rights, wellbeing and interests of people” should be put first when governments around Australia implement artificial intelligence technology, according to a national framework released on Friday.

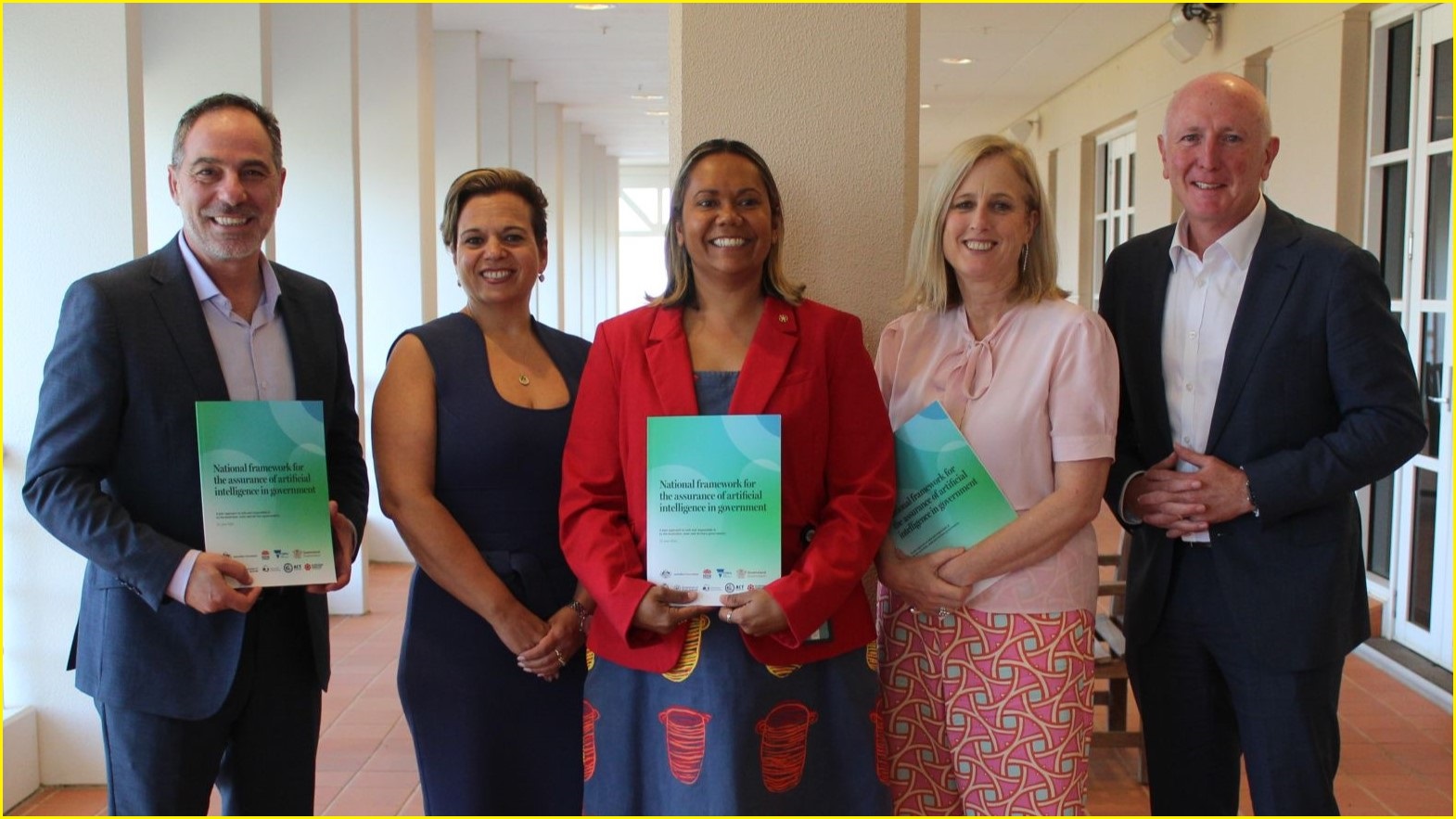

The framework was unveiled by state and territory ministers who gathered in Darwin to mark a nationally consistent approach to using AI.

Ministers agreed to the creation of the national framework in February, after the New South Wales government became the world’s first to mandate an assurance framework for the use of AI systems in 2022.

Federal Minister for Finance and the Public Service, Katy Gallagher, said the national framework was a step toward building trust with Australians, which would be crucial to any implementation of AI in government services.

A 2023 report by the Department of Prime Minister and Cabinet found there was low public trust in uses of AI.

“Today we’ve agreed across all levels of government that the rights, wellbeing, and interests of people should be put first whenever a jurisdiction considers using AI in policy and service delivery,” Gallagher said.

“People should be able to go about their lives, anywhere across Australia without anticipating operational differences between governments’ use of AI.

“This national framework shows that when all governments can work together and share individual experiences and insights, we can get a great result for Australians and improve the way government services are provided to the community.”

Minister for Industry and Science, Ed Husic, said governments needed to use AI to carry out their work more efficiently, but establishing the public’s trust first was “crucial”.

Is there a ‘clear public benefit’?

The national AI framework calls for governments across Australia to consider "whether there is a clear public benefit” to any AI technology they plan to implement.

It says risks should be documented and governments should consider whether AI is preferable or if suitable non-AI alternatives already exist.

“Governments should assess the likely impacts of an AI use case on people, communities, societal and environmental wellbeing to determine if benefits outweigh risks and manage said impacts appropriately,” the framework states.

Governments are also instructed to make sure their AI systems appropriately uphold human rights, data privacy, workplace health and safety, diversity and anti-discrimination legislation.

The framework says governments “may” need to inform people when their data is being collected by an AI system or used to train one.

“Governments should assess whether the collection, use and disclosure of personal information is necessary, reasonable and proportionate for each AI use case,” the framework says.

“When an AI system significantly impacts a person, community, group or environment, there should be a timely process to allow people to challenge the use or outcomes of the AI system.”

The framework suggests governments should consider using privacy enhancements such as encryption and de-identified or synthetic data.

“Sensitive information should always be managed with caution,” the framework says, adding that access to systems and data “should be limited to authorised staff as required by their duties”.

The push for accountability

Governments across the country should also be prepared to immediately stop using an AI solution “when an unresolvable problem is identified”, the framework states.

“This could include a data breach, unauthorised access or system compromise.”

The framework asserts that individuals responsible for government AI systems should be “identifiable and accountable” for what those systems do, while governments should also avoid developing an overreliance on AI.

A Royal Commission into the Liberal-National Coalition’s Robodebt scheme found it should serve as a warning of the potential downfalls of advanced automation and AI.

The Albanese Labor government said the national AI framework was partly based on Australia’s AI Ethics Principles, which were developed by the CSIRO and the Department of Industry, Science and Resources.

While it defined “consistent expectations for oversight of AI”, it also allowed states and territories some flexibility to suit their needs, the government said.

The federal government is separately developing a “risk-based” plan to introduce mandatory safeguards for high-risk uses of AI, such as in healthcare, self-driving vehicles, or software used to predict the likelihood of a convicted criminal reoffending.

The federal government committed to an automation overhaul in the wake of the Robodebt scandal. Photo: Shutterstock

AI funding for healthcare, startups

The unveiling of the national AI framework came ahead of the federal government’s Monday announcement of almost $30 million towards applications of AI in the healthcare sector.

The government’s Medical Research Future Fund confirmed 10 grants to leading universities, including for research into Multiple Sclerosis, heart health and skin cancer.

Nearly $3 million was awarded to the University of Queensland to trial ways in which AI could use the world’s largest skin imaging database to help detect melanoma, even without people having to visit the GP.

The University of Melbourne also received nearly $3 million for its development of a personalised platform for diagnosing and preventing youth mental health issues.

Minister Husic said the government needed to make sure patients could trust that AI was safe to use.

“The guardrails we’re considering around development and use of AI means the community can have confidence these powerful technologies are being used safely and responsibly while delivering those benefits,” he said.

Tech industry associations were disappointed by a “negligible” investment in AI in May’s federal budget, with an average of $8 million per year provided over five years.

Three startup companies have also won funding from Australia’s AI Sprint competitive program — a collaboration between the CSIRO, Google Cloud, and innovation group Stone & Chalk — to help get their AI prototypes to market.

Dragonfly Thinking won $300,000 in research and development support for AI tools which aim to help with complex decision making.

Kindship won $100,000 for its AI personal assistant which helps people navigate the National Disability Insurance Scheme, while Empathetic AI was awarded $100,000 for its tax co-pilot named Luna.