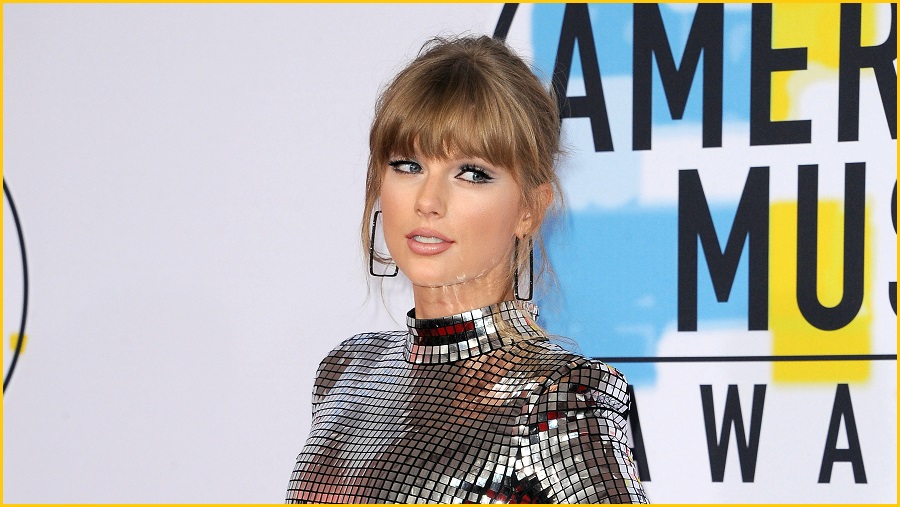

Searches for Taylor Swift have been blocked on social media platform X in response to AI-generated pornographic images of the pop star going viral.

Non-consensual and sexually explicit images of Swift were circulated widely on X (formerly Twitter) and other platforms this week, with the New York Times reporting that one such image was viewed 47 million times before it was taken down.

The virality of the images has led to calls for better awareness of AI-based tools being weaponised against women, improved laws to crack down on this, and the standard of content moderation at X.

Swift is the most prominent victim of deepfake pornography, with the issue bringing attention to how the technology has been used against other celebrities and everyday women.

As of Tuesday morning, those searching for Swift on X were given no result, with a message appearing saying: “something went wrong. Don’t fret - it’s not your fault”.

According to X, its teams are “actively removing all identified images and taking appropriate action against the accounts responsible for posting them”.

“We’re closely monitoring the situation to ensure that any further violations are immediately addressed, and the content is removed,” the company posted.

X head of business operations Joe Benarroch said the blocking of searches for Swift was a “temporary action” to prioritise safety done with an “abundance of caution”.

Researchers from deepfake detection organisation Reality Defender said it had identified at least 24 unique AI-generated images of Swift this week. The group said these images were most likely created by “diffusion models” which can create new images based on written prompts provided by users.

There are many readily available and free diffusion model tools online.

It’s unclear which platform was used to make these images. In a statement on Monday, Microsoft said it will look at whether its text-to-image tool was used.

“[Microsoft is] continuing to investigate these images and have strengthened our existing safety systems to further prevent our services from being misused to help generate images like them,” the statement said.

Reality Defender also found that the images had been posted to other social networking platforms such as Facebook.

X has previously been criticised for its content moderation practices since it was taken over by Elon Musk in November last year.

Following the takeover, a number of moderation and public policy teams were gutted by Musk, and the previous verification system was overhauled.

The latest incident has reached all the way to the Oval Office, with White House Press Secretary Karine Jean-Pierre saying it was “alarming”, and legislation may be needed to tackle the misuse of generative artificial intelligence on social media.

“We know that lax enforcement disproportionately impacts women and they also impact girls, sadly, who are the overwhelming targets,” Jean-Pierre said.

A number of US lawmakers are now pushing for greater protections against the use of AI for these purposes.

US Representative Yvette D Clarke is pushing for legislation that would require creators to include a digital watermark on any content created by AI.

“For years, women have been victims of non-consensual deepfakes, so what happened to Taylor Swift is more common than most people realise,” Clarke said.

“Generative AI is helping create better deepfakes at a fraction of the cost.”

US Representative Joe Morelle is also pushing a bill which would criminalise the sharing of deepfake pornography online.

It comes after Australia’s eSafety Commissioner warned last year that AI-generated child sexual abuse materials and deepfakes were on the rise.

eSafety Commissioner Julie Inman Grant said that the agency had received reports of “sexually explicit content generated by students using this technology to bully other students”, reports of AI-generated child sexual abuse material and a “growing number of distressing and increasingly realistic deepfake porn reports”.

The eSafety Commissioner has already taken legal action against X for failing to adequately stop online hate.