Focusing too much on "perceived risks" from artificial intelligence such as the creation of sophisticated deepfakes could damage public trust in democracy, the Australian Electoral Commission (AEC) says as it prepares for the next federal election on 3 May.

While the commission monitored elections involving more than half of the world’s population in 2024 — including in the United States — it did not witness a substantial threat from increasing public access to powerful deepfake tools, it told Information Age.

AI technologies can replicate a person's image or voice to make it appear as if they said or did something which never actually occurred.

“While there have been some isolated examples of deepfakes being used in those [2024] elections, we are not aware of many cases in which a use of AI technology has genuinely fooled a voter,” an AEC spokesperson said.

“There is a risk that focusing on the perceived risks of AI tools to democracy could by itself damage public trust in democracy.

“We need to remember to keep things in perspective.”

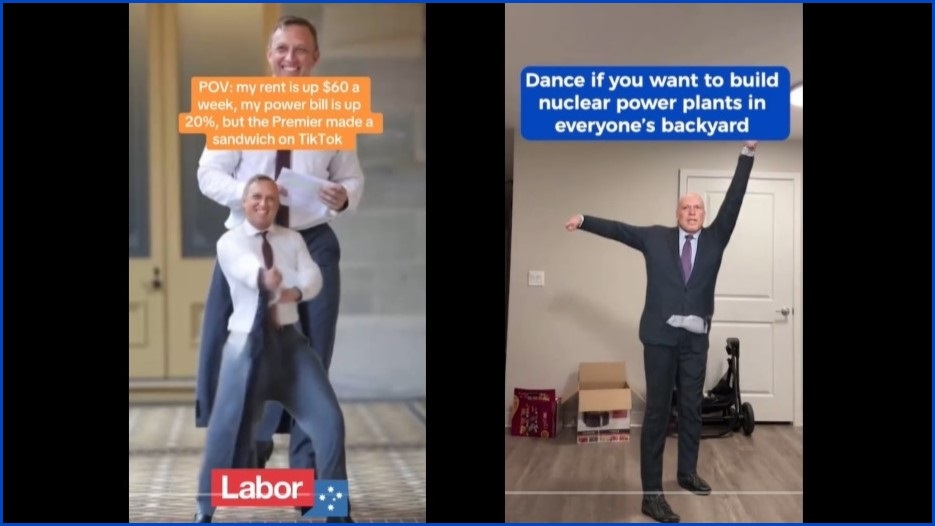

Both the Labor government and Liberal National coalition have published AI deepfakes depicting members of their opposition, but there have been very few examples and they did not appear to have breached electoral laws or social media platform policies.

However, more problematic overseas examples saw AI used to replicate former US president Joe Biden’s voice in fake robocalls in 2024, while AI-manipulated political ads appeared on social media in India.

The AEC said it was taking a “realistic” approach to AI in Australia after it witnessed what it argued was “some similarity” between discourse over the rise of AI technologies and the increasing impact of social media platforms at previous federal elections.

“Then, as now, the AEC’s role was to take a platform-agnostic approach and ensure that the electoral laws were upheld across all mediums,” the spokesperson said.

“We’re taking a realistic approach to this [AI] technology.

“Rather than singling it out, we’re reminding all campaigners that electoral laws apply across the board, whether AI was used or not.”

Both Labor and the Opposition have shared AI deepfakes depicting their political rivals. Images: TikTok

Tech giants vow to protect the polls

Major technology companies and social media platforms have promised to use their resources to identify and label AI-generated political material, or remove it when it breached their guidelines.

John Galligan, Microsoft’s general manager of corporate and legal affairs in Australia, said while “there was widespread fear about how AI might be used to create deceptive content during election periods” at the start of 2024, the company agreed the reality was not as bad as some had predicted.

"While what we saw was not as pervasive as was initially feared, we shouldn’t assume it will be the same for Australia’s upcoming election and beyond, nor dismiss the threat,” he wrote last week.

“During the 2024 elections, we found that the most convincing and hardest to detect AI-generated content were voice deepfakes.”

Chinese-owned TikTok revealed to Information Age it had removed 99 accounts since 3 February which it believed had attempted to impersonate Australian politicians.

While those accounts had not necessarily shared AI-generated content or deepfakes, a spokesperson said the company was protecting the integrity of its platform.

The AEC created its own TikTok account in March and has partnered with the platform to point users searching for election-based keywords to educational content about voting.

"We also require all AI-generated content, or media that has been manipulated, to be labelled,” said the TikTok spokesperson, who added that all AI-generated content on the platform was moderated by either algorithms or humans.

A record-high 98 per cent of eligible Australians have enrolled to vote in this year’s federal election, the AEC said. Photo: AEC / Facebook

Cheryl Seeto, the head of Australian public policy for Meta, wrote last month that the social media giant’s third-party fact-checking program would continue through the federal election, despite Meta's decision to initially ditch the program in the US.

Meta — which operates Facebook, Instagram, Threads, and WhatsApp — said it would continue its work with the Australian Federal Police (AFP) and Australian Associated Press (AAP) on the program.

“AI-generated content is also eligible to be reviewed and rated by our independent fact-checking partners,” Seeto said.

“… When content is debunked by these fact-checkers, we attach warning labels to the content and reduce its distribution in [Facebook’s] Feed and [Instagram’s] Explore so it is less likely to be seen."

Google Australia’s director of government affairs and public policy, Stef Lovett, wrote last week that the company’s ad policies prohibited “the use of manipulated media to mislead people, such as deepfakes or doctored content”.

The AEC said it worked "closely and collaboratively” with technology companies such as Microsoft, Meta, Google, and TikTok.

John Galligan from Microsoft argued Australian citizens needed “a healthy level of scepticism towards the content they are consuming online”, and tech companies, governments, and media organisations should “collectively help build media literacy”.

Political deepfakes not explicitly banned

While politicians such as Greens senator David Shoebridge and independent senator David Pocock have raised concerns about possible harms from deepfakes created by political parties or malicious foreign actors, the AEC said it was “important to remember that deepfakes are not explicitly banned”.

“While the technology behind deepfakes is new, it doesn’t change the basic requirements set by electoral law,” an AEC spokesperson said.

In most cases, electoral communications needed to include an authorisation message confirming who created it, they said.

Such messaging was also not allowed to “mislead or deceive about the act of casting a vote”, they added.

The AEC said it had expanded its Stop and Consider digital literacy campaign “to help voters consider the ways that AI could be used at the election”.

In that campaign, the commission told the public that AI-generated material could "create serious problems for democracy and can undermine trust both in our own choices as well as the electoral system”.

The AEC has previously stated it does not have the resources nor the powers needed to monitor or regulate political deepfakes.