Google is challenging the “Roger Federer of Go”, South Korea’s Lee Sedol, to push the limits of a new artificially intelligent machine it has created called AlphaGo.

The internet giant’s DeepMind subsidiary revealed the existence of AlphaGo this week via research published in the prestigious journal Nature.

The research – and a subsequently released video – showed the machine beat “three-time European Go champion Fan Hui … by 5 games to 0” in a closed-door tournament late last year.

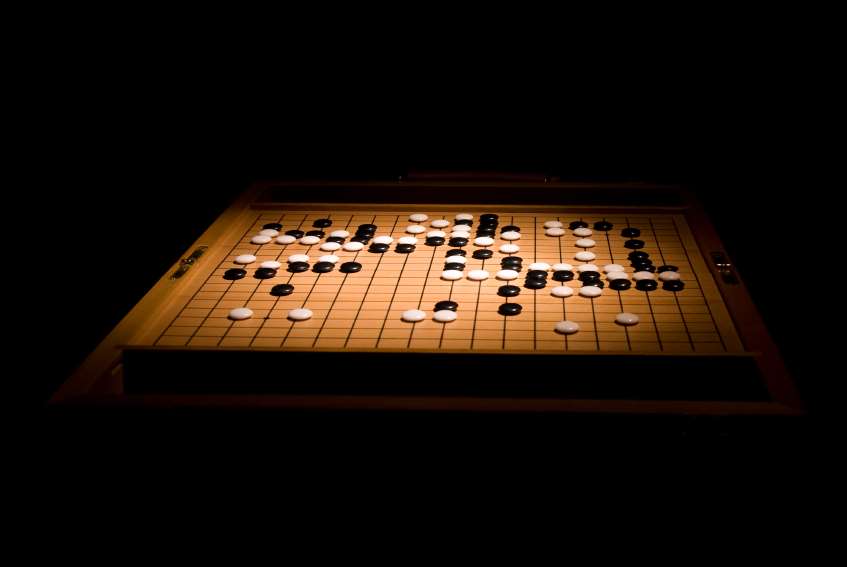

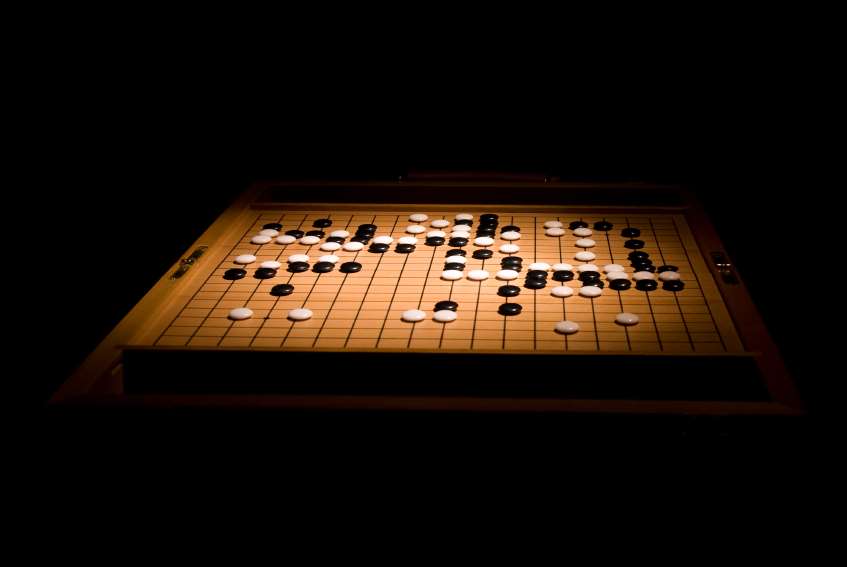

Though computers have cracked other games by beating elite players– including chess and Jeopardy – the Chinese game of Go had remained elusive.

One reason for that, according to DeepMind’s CEO Demis Hassabis, is the complexity of Go compared to other games.

“In chess the number of possible moves is about 20 for the average position. In Go it’s about 200,” Hassabis said.

“Another way of viewing the complexity of Go is that the number of possible configurations of the board is more than the number of atoms in the universe.”

It’s not just the number of combinations that a computer-based opponent would need to process.

“If you ask a great Go player why they played a particular move, sometimes they’ll tell you it just felt right,” Hassabis said.

“So one way you could think of it is Go is a much more intuitive game whereas chess is a much more logic based game.”

The fact Google managed to get a computer to defeat a professional Go player – without a head start - for the first time is likely to have come as a surprise to other researchers in the field.

In December last year, Wired revealed Facebook researchers were trying to use neural networks to give a computer the edge against human opponents.

Facebook’s effort at the time was praised by Rémi Coulom – creator of a program that has won handicapped matches against professional Go players – as “very spectacular”. Coulom believed it was “unlikely Google could so quickly produce something that can beat the top Go players”.

Hassabis revealed in a blog post that AlphaGo is able to predict the human’s move an impressive 57 percent of the time. The previous research record was 44 percent.

He said AlphaGo is a hybrid of a heuristic search algorithm and “deep neural networks”.

“These neural networks take a description of the Go board as an input and process it through 12 different network layers containing millions of neuron-like connections,” Hassabis said.

“One neural network, the ‘policy network’, selects the next move to play. The other neural network, the ‘value network’, predicts the winner of the game.”

Hassabis said that the neural networks in AlphaGo were trained “on 30 million moves from games played by human experts” to improve the machine’s accuracy.

“But our goal is to beat the best human players, not just mimic them,” he said.

“To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and adjusting the connections using a trial-and-error process known as reinforcement learning.

“Of course, all of this requires a huge amount of computing power, so we made extensive use of Google Cloud Platform.”

The next challenge for AlphaGo comes in March when it takes on Lee Sedol in Seoul.

For Hassabis, AlphaGo’s achievements so far are already a victory but still a stepping stone to the big prize.

“We’re very excited but it’s just one rung on the ladder to solve artificial intelligence.”