Maker of the world’s first smart contact lens, Mojo Vision, has raised another $78 million ($US51 million) from investors.

The company made headlines earlier this year when it began demonstrating its prototypes of augmented reality contact lenses to journalists and investors.

CEO and co-founder, Drew Perkins, said the extra media attention “generated increased excitement and momentum around the potential of Mojo Lens”.

“This new round of funding brings more support and capital from strategic investors and companies to help us continue our breakthrough technology development.

“It gets us closer to bringing the benefits of Mojo Lens to people with vision impairments, to enterprises and eventually, consumers.”

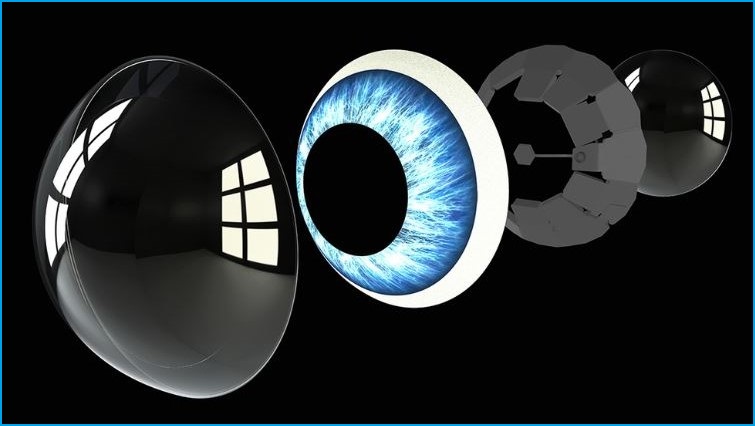

The Mojo Lens takes some of the functionality of a standard smart watch – like heart rate data, time, and messages – and streams it directly on your eyeball.

Future iterations of the device will supposedly allow the lens to trace over objects in real-time, highlighting them for people with impaired vision.

Former Sun Microsystems CTO, Greg Papadopoulos – a new member of Mojo Vision’s board – called the lens a “tremendously exciting opportunity”.

“The potential applications of this innovative technology could fundamentally change the way we work and view our world as well as improve the quality of life for people with vision impairment,” Papadopoulos said.

We're excited to announce that we raised over $51M in a Series B-1 investment round. Our journey to build the 1st true smart contact lens, Mojo Lens, is now funded by $159M+ in capital. Thanks visionary investors! Welcome new partners! https://t.co/zFmFRAReI3 #techinvestments pic.twitter.com/S7qXmkc9ry

— MojoVision (@MojoVisionInc) April 29, 2020

From your pocket to your eyes

Since the Google Glass was first released in 2013 – before its consumer version was canned in 2015 – tech companies have been scrambling for ways to bring computing power directly to people’s eyeballs.

Microsoft unveiled its second iteration of the HoloLens headset late last year and Apple is heavily rumoured to release its own smart glasses in the next three years.

Mojo Vision, however, is looking to bypass the bulky headsets that make existing augmented reality devices uncomfortable.

But for all Mojo promises, the company still has its skeptics like Virtual Method’s David Francis who told Information Age Mojo needs exceptional new technology to deliver on its claims.

“AR needs a camera – unless it is tapping into your neural-visual cortex – to do its depth perception and to create visual understanding and real-world anchoring,” Francis said.

“So unless you can put a camera on the lens, stream massive amounts of data to it and have an insanely powerful chip in it that is refreshing a micro-lens array in nano-seconds to match the twitching of an eye and keep an augmented scene relative to your 12 o'clock, we’re a long way off.”