A team of Google researchers has built an AI that can generate eerily-realistic images from text prompts, once again raising questions about the ethical nature of developing AI that can interfere with our shared reality.

The AI is called Imagen and the pictures its creators have chosen to show the world are surreal and stunning.

These systems stand out through their ability to parse bizarre natural language prompts and create coherent, instantly recognisable images.

Some of the pictures chosen to demonstrate Imagen use simple prompts where features of the subject are swapped out like in the prompt, ‘a giant cobra snake on a farm. The snake is made out of corn’.

Imagen's version of a snake made of corn. Image: Google

Others are more contextually complicated, as with the prompt, ‘An art gallery displaying Monet paintings. The art gallery is flooded. Robots are going around the art gallery using paddle boards’.

Robots enjoying a gallery showing. Image: Google

Imagen is the latest in an image generating arms race going on between AI companies following OpenAI which last month revealed DALL-E 2 and began providing limited access to it for public testing.

According to an accompanying research paper about Imagen, Google’s AI is simpler to train and its images’ resolution can be scaled up easier than its competitors.

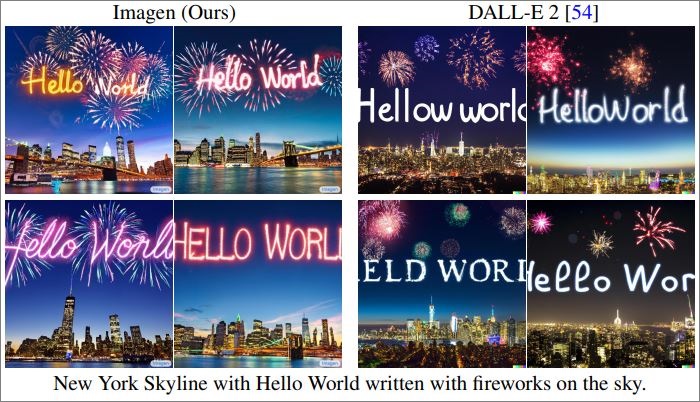

In some cases, Imagen also demonstrates a greater understanding of detail than DALL-E 2, especially when building from prompts that include embedded text.

Imagen performs well when including text in pictures. Image: Google

Unlike OpenAI’s DALL-E 2 – which is onboarding 1,000 users per week onto its demo platform – Google has no plans to allow for public testing of Imagen.

As the researchers explained, while generative AI has the potential to “complement, extend, and augment human creativity”, it can also be used maliciously for harrassment and the spread of misinformation.

Part of the problem with generative images is that training data tends to be scraped from the open web.

This results in issues around consent for individuals who might appear in the dataset, but also significant concerns about the data containing stereotypes and “oppressive viewpoints”.

“Training text-to-image models on this data risks reproducing these associations and causing significant representational harm that would disproportionately impact individuals and communities already experiencing marginalisation, discrimination and exclusion within society,” the Google researchers wrote.

“We strongly caution against the use text-to-image generation methods for any user-facing tools without close care and attention to the contents of the training dataset.”

Generated images of people are especially problematic, the Imagen creators found, and featured demonstrated biases “towards generating images of people with lighter skin tones” while also tending to reinforce gender stereotypes around professions.

OpenAI is similarly aware of these problems and has built in a filter for the public use of DALL-E 2 designed to stop certain content from being created, such as violent or sexual images, and those that may be related to conspiracy theories or political campaigns.

In a recent blog post, OpenAI said 0.05 per cent of user-generated images have been automatically flagged with less than a third of them being confirmed by human reviewers.

While it is encouraging users to flood social media with AI-created images, OpenAI has urged early users of DALL-E 2 “not to share photorealistic generations that include faces”.