Lurking behind last week’s open letter calling for a six-month pause on artificial intelligence (AI) development is ‘long-termism’, an ideology whose proponents value the lives of hypothetical humans living thousands of years in the future more than any people alive today.

Long-termists hold the belief that a post-human species is destined to spread across the galaxy, building megastructures around stars to house an unfathomable number of people living in a state of endless utopic bliss within simulated realities.

These people would be so many (1023 in the Virgo Supercluster) and live in such vast happiness that their potential existence carries an immense moral weight for long-termists.

Any barrier to this future, proponents of this ideology believe, needs to be overcome; any potential threat to its reaching fruition must be eliminated.

Because their belief has an inherent technological determinism – meaning they think this type of life among the stars is inevitable given enough time – long-termists are deeply concerned with what they call ‘existential threats’ to humanity that would stop it from happening.

The hypothetical happiness of trillions of humans, therefore, justifies any means to get there.

Philosopher and longtermist figurehead Nick Bostrom, who in January apologised for his openly racist rhetoric of the past, has advocated for a global surveillance network and the use of “preventive policing” to mitigate the risk of, for example, terrorist attacks that trigger a nuclear apocalypse.

In a 2019 article called ‘the Vulnerable World Hypothesis’ Bostrom quite literally describes a “high-tech panopticon” in which everyone wears an unremovable “freedom tag” fitted with cameras and microphones that constantly stream data back to an AI for review.

If the AI detects any “suspicious activity” it alerts a “freedom officer” who decides if escalation – like a raid or arrest – is needed.

He also considers whether “modifying the distribution of preferences” among people in a society through a process of synthetic homogenisation would be a way of “achieving civilisational stabilisation”.

The world Bostrom describes is, in no uncertain terms, a dystopian nightmare.

Yet for him invasive surveillance, strict limits on our autonomy, and the reduction of social plurality are means by which we can “[max] out our technological potential”; anything to reduce ‘existential risk’. The ends justify the means.

An existential risk

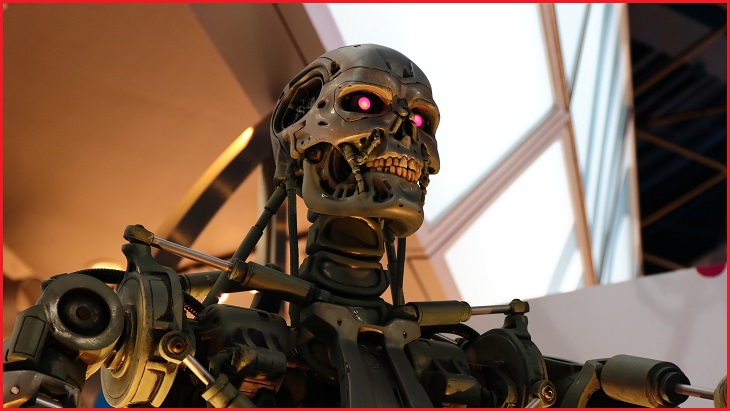

Longtermists like Bostrom see a rogue, out-of-control AI system as one of the greatest ‘existential risks’.

The concern of a runaway AI is, like much of the long-termist ideology, deeply rooted in science fiction, and neglects to appreciate the very human developers and users of these systems.

Last week, long-termist organisation the Future of Life Institute published an open letter which was signed by thousands of business leaders, academics, and computer scientists.

The letter rightly called for better scrutiny and regulation of AI to make the systems “more accurate, safe, interpretable, transparent, robust”. But it also moves toward anthropomorphism, calling for AI to be “aligned, trustworthy, and loyal”.

The letter laments the lack of planning and control around how companies are building “powerful digital minds” that are “human-competitive at general tasks”. It describes these systems – which are, it’s worth remembering, networked computers – as if they will reach a point where “no one – not even their creators – can understand, predict, or reliably control”.

Responding to the Future of Institute’s open letter, the co-authors of a seminal paper describing large language models as ‘stochastic parrots’ warned that the open Future of Life misrepresents real AI risk.

“It is indeed time to act: but the focus of our concern should not be imaginary ‘powerful digital minds’,” they said.

“Instead we should focus on the very real and very present exploitative practices of the companies claiming to build them, who are rapidly centralising power and increasing social inequities.”

These prominent AI researchers are women who have, for years, vocally spoken out against “fearmongering and AI hype” which not only fattens the pockets of powerful global technology companies, but also spreads the long-termist ideology.

“We do not agree that our role is to adjust to the priorities of a few privileged individuals and what they decide to build and proliferate,” they said.

“We should be building machines that work for us, instead of ‘adapting’ society to be machine readable and writable.”

Re-conceiving AI

Researcher and Australian Computer Society (ACS) Fellow Roger Clarke recently published an article proposing a “re-conception” of AI away from the notion of achieving human-like super-intelligence and toward a model of “augmented intelligence”.

What Clarke calls “New-AI” is a re-framing of the technology in a way that seeks to best serve human needs, warning that “sci-fi-originated and somewhat meta-physical, even mystical, ideas deflect attention away from the key issues”.

The primacy of focus must remain on computer systems as tools that serve a purpose – in particular, to extend or augment our intelligence and capability.

“For real-world applications, the notion of humanlike or human-equivalent intelligence is a dangerous distraction,” he wrote.

“The focus must be on artefacts as tools, and on the delivery of purpose-designed artefact behaviour that dovetails with human behaviour to serve real-world objectives.”

In that same article, Clarke outlines a set of “readily-foreseen negative impacts of AI”, including how embedded black-box automation can make it difficult to review unfair decisions and lead to organisations failing to explain how they come to decisions.

Here in Australia, we don’t have to look far to consider these points in the light of the crude algorithmic decision-making that was the Robodebt scandal.

The Department of Human Services’ income averaging scheme was a deeply flawed and illegal methodology to create debts against welfare recipients by simply dividing a year’s worth of income by 26 (the number of fortnights) and comparing it with declared income.

It was about as far from intelligence as you can imagine.

The system’s scale and bureaucratic invulnerability caused real-world harm – and in some cases was linked to tragic suicides – not because of the emergent properties of a “powerful digital mind” but rather an all-too-human failure to reduce a system’s harm for the sake of political, personal, and professional gains.

The real problems with advanced AI are, as ever, more human than machine.