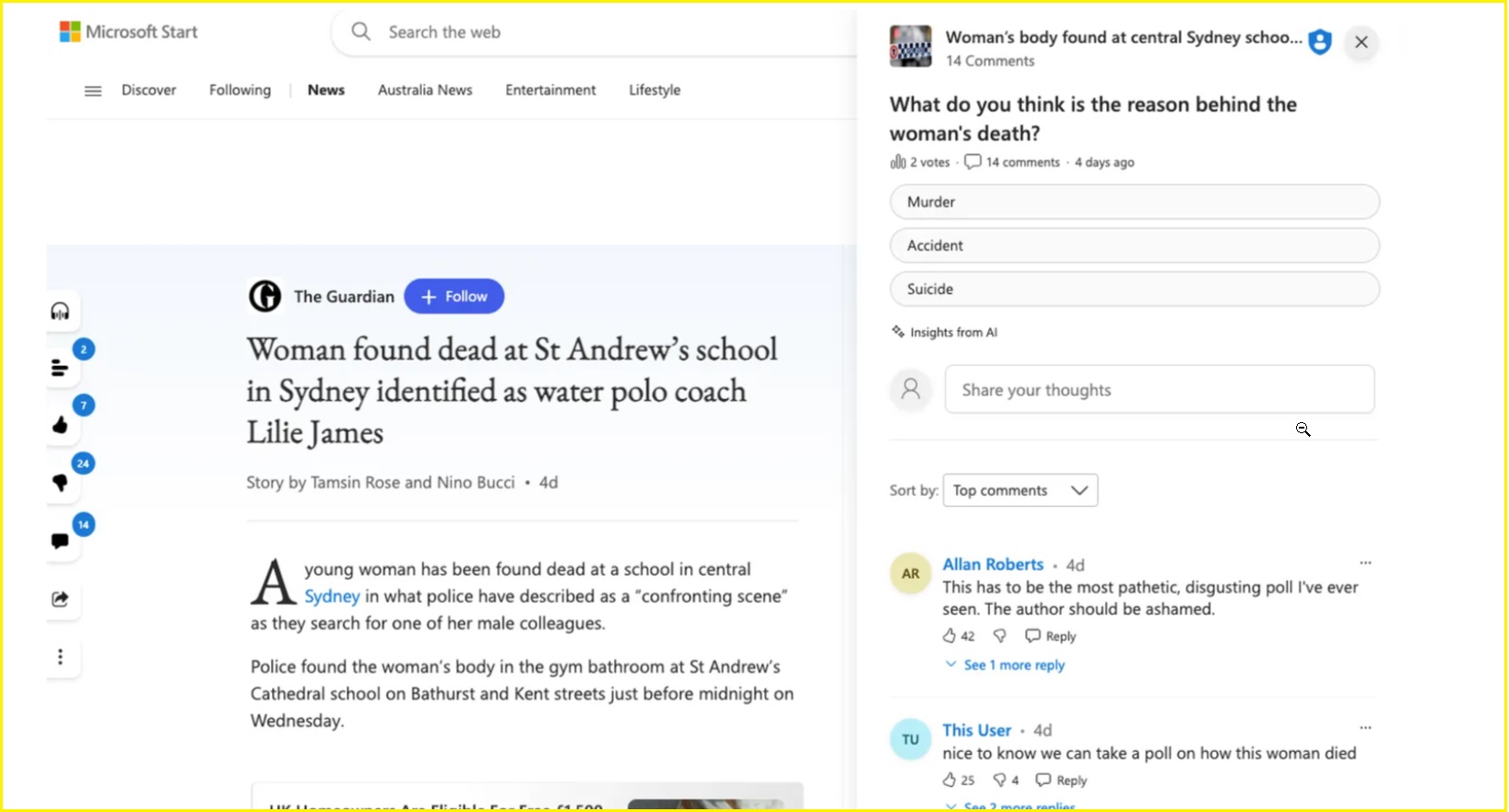

Microsoft’s generative artificial intelligence tool inserted a “deeply concerning” poll into a Guardian news story asking readers to speculate on the cause of a woman’s death.

Tech giant Microsoft has a licence with The Guardian to publish its news stories on its Microsoft Start platform, a news aggregator website and app.

Last week a Guardian news story on the death of school water polo instructor Lilie James in Sydney was included on Microsoft Start along with an AI-generated poll asking: “What do you think is the reason behind the woman’s death?”

Readers were given three potential answers to the poll: murder, accident or suicide.

While the poll was generated entirely by Microsoft’s tools and not by The Guardian or its journalists, several readers commenting on the story did not make this distinction, with some calling for the writer to be fired.

Guardian Media Group chief executive Anna Bateson wrote a letter to Microsoft President Brad Smith about the incident, saying the organisation has previously warned about the risks of using generative AI around its news stories.

The poll was widely criticised. Photo: Supplied

“This application of genAI by Microsoft is exactly the sort of instance that we have warned about in relation to news, and a key reason why we have previously requested to your teams that we do not want Microsoft’s experimental genAI technologies applied to journalism licensed from The Guardian,” Bateson said in the letter.

“Not only is this sort of application potentially distressing for the family of the individual who is the subject of the story, it is also deeply damaging to the Guardian’s hard-won reputation for trusted, sensitive journalism, and to the reputation of the individual journalists who wrote the original story.”

The Guardian has called for reassurances that Microsoft will not use any experimental AI tools on its content, and will make it clear when AI has been used to create additional units and features.

“There is an almost complete absence of clear or transparent labelling of these genAI powered outputs, and certainly no disclaimer or explanation to users that these technologies are owned and operated by Microsoft, and to the inherent unreliability of them,” Bateson said.

“This has to change.”

In response, Microsoft took down the poll and launched an investigation into how it came to appear alongside the story.

“We have deactivated Microsoft-generated polls for all news articles and we are investigating the cause of the inappropriate content,” a Microsoft spokesperson said.

“A poll should not have appeared alongside an article of this nature, and we are taking steps to help prevent this kind of error from reoccurring in the future.”

It comes more than three years after Microsoft laid off dozens of journalists and editorial staff at Microsoft News and its MSN organisations and replaced them with artificial intelligence tools, which will be used to pick the news and content to be presented on these platforms.

It’s not the first time these tools have gone wrong.

In August, an apparently AI-generated travel article recommended visiting a food bank in Ottawa, Canada “on an empty stomach”.

Microsoft later claimed this story was generated “through a combination of algorithmic techniques with human review”.

The Guardian has also called for a wider discussion on the use of news content to train generative AI tools.

The issue of copyright and generative AI is already a messy and complicated one.

The New York Times is also considering suing ChatGPT over the use of its articles to train its algorithm, over concerns it could become a direct competitor.

The news organisation has also updated its terms of service to stop AI companies using its content to train their models.