As tech giants continue to integrate artificial intelligence into search engines, Australia’s eSafety Commissioner has announced a new industry standard that will mandate the removal of AI-generated child abuse images from search results.

Last week, the eSafety Commissioner released a decision to register the Internet Search Engine Services Online Safety Code (the SES Code) – a new industry code designed to address, minimise and prevent harms associated with harmful forms of online material accessed through search engines.

The SES Code applies to internet search engine services (SES providers) who provide services to Australian end-users – including Google, Yahoo, DuckDuckGo and Microsoft’s Bing – requiring them to effectively block harmful materials such as “child sexual exploitation” and “pro-terror” content from appearing in searches.

“These systemic tools are intended to shift the responsibility for #safetybydesign back on the companies themselves, before they are extricated out to the wild without proper guardrails in place,” said eSafety Commissioner Julie Inman Grant.

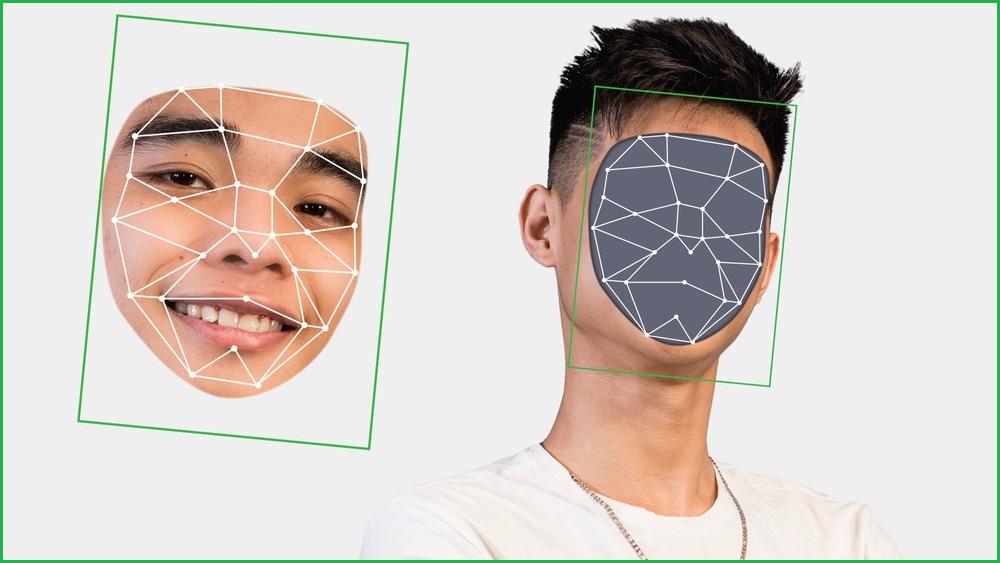

In addition to harmful materials in search results, the code takes aim at “synthetically generated” material such as deepfakes – with the eSafety Commissioner citing particular concern over developments in generative AI.

“We are seeing ‘synthetic’ child abuse material come through,” she said.

“Terror organisations are using generative AI to create propaganda. It’s already happening. It’s not a fanciful thing. We felt it needed to be covered.”

The code covers material identified under two separate classes:

● class 1A material, which includes child sexual exploitation material, pro-terror material, and extreme crime and violence material; and

● class 1B material, which is comprised of crime and violence material and drug-related material

Over the past 20 months, the code underwent development alongside six industry associations, with its previous version having only covered material returned after search engine queries, rather than materials which search engines can generate.

As companies such as Microsoft and Google move to integrate their AI products – ChatGPT and Bard – with their mainstay search engines, Grant decided the code needed to address generative AI and its associated risks of harm.

“eSafety reserved its decision to register an earlier version of the Search Code in June after Microsoft and Google announced they would incorporate generative AI functionality into their internet search engine services,” said Grant.

Now, a revised version of the SES Code directly addresses AI-generated content, from synthetic materials through to long form answers and summaries which contain child sexual abuse material.

The code also requires SES providers to respond to reports and delist requests from end-users – effectively helping users to scrub harmful content from results – and demands providers “promptly notify law enforcement or appropriate non-government organisations” about potentially threatening child sexual exploitation materials on their services.

Furthermore, the code requires SES providers to “make clear” when users are interacting with any features using artificial intelligence, and further research technologies to assist users in detecting and identifying deepfake images accessible from their services.

“We believe these are the first regulatory tools of their kind to capture AI-generated illegal content through search,” said Grant.

The drafting of the Search Engine Services code was led by Jennifer Duxbury, director of policy, regulatory affairs and research at digital industry association Digital Industry Group Inc.

Duxbury described generative AI as an “early-stage” and “rapidly developing” technology that warrants appropriate safety principles alongside its growth.

“To harness its full social and economic benefits, it’s essential that its development and deployment is underpinned by appropriate safety principles, which we’ve reflected in this code for internet search engines,” said Duxbury.

“One of the unique advantages of industry-led codes is the way they can incorporate expertise from the companies developing the technology.

“The codes were developed as 'principles based', rather than a prescriptive set of rules, and the flexibility embodied in this approach helps ensure the codes can be adapted and applied within a rapidly evolving digital information environment.”

According to The Guardian, the eSafety Commissioner said regulators are already aware of bad actors using burgeoning AI tools for illicit purposes, including generating child abuse material.

In August, Grant flagged first reports of sexually explicit content being generated by students via AI to bully other students, and in June the commissioner forecasted an aggressive plan to force big tech into scanning Australians’ emails, photo libraries, cloud storage and dating sites for illegal content.

The eSafety Commissioner has been on a staunch campaign against online hate and child abuse materials in recent months – particularly given the uncertainty of new AI technologies.

Following the code’s registration, Grant said her team will turn its attention to creating standards for the relevant electronic service (RES) sector, which covers a range of online communication services, and the designated internet services (DIS) sector, which covers providers of apps, websites, and file and photo storage services.