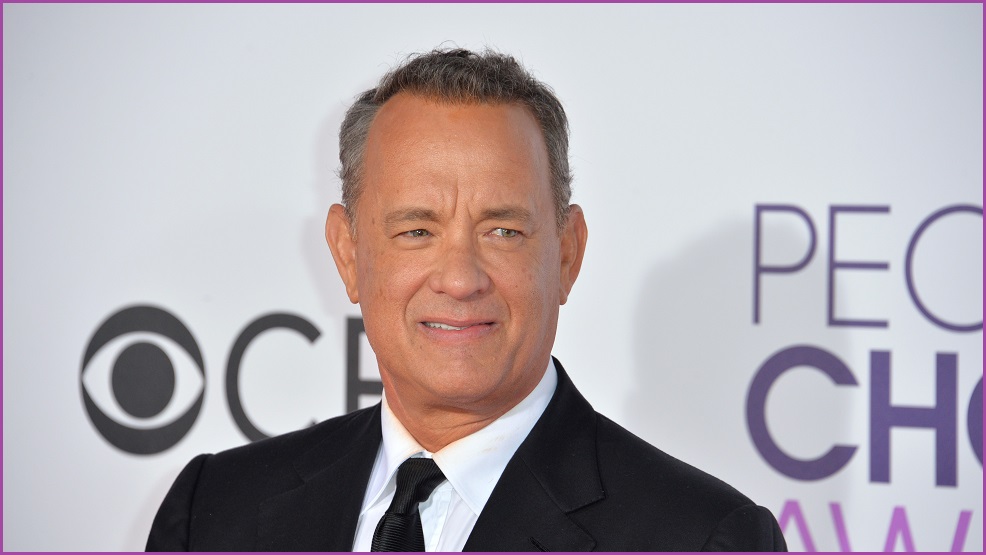

Actor Tom Hanks is one of the latest celebrities to be impersonated by AI technology that is equipping scammers with new means of exploitation and sparking widespread concern over media integrity.

On Monday, Hanks posted a warning to his fans on social media app Instagram regarding an advertisement which used generative AI to mimic his likeness.

The online video promoted a “dental plan” using an “AI version” of Hanks to lure in viewers – something the A-list actor never consented to.

“BEWARE!! There’s a video out there promoting some dental plan with an AI version of me,” wrote Hanks.

“I have nothing to do with it.”

The video incited renewed concern over deepfakes: an increasingly prominent method of video and audio imitation which uses deep learning AI technology to create fake events from an often unconsenting person’s likeness.

Hanks is one of many voices in film and television actively speaking out against the rise of AI-driven impersonations.

In May, he spoke on how the technology can both mislead viewers and enable a sci-fi-like possibility for actors to continue “acting” after death.

“Anybody can now recreate themselves at any age they are by way of AI or deep fake technology,” Hanks said on an episode of The Adam Buxton Podcast.

“I could be hit by a bus tomorrow and that’s it, but performances can go on and on and on and on.”

The technology has caused particular unrest in Hollywood, where – after five months of industrial action – writers recently secured a tentative agreement with studios to implement strong limitations on the use of artificial intelligence in writers rooms and actors continue striking to retain control of their likenesses.

In addition to threatening the jobs of creatives and creating dystopian dilemmas over the use of celebrity likenesses, deepfake technology has also proved a significant boon for scammers.

On Tuesday, popular YouTuber Jimmy Donaldson, known professionally as MrBeast, warned fans of a deepfake scam circulating on social media platform TikTok under his likeness.

“Lots of people are getting this deepfake scam ad of me…” Donaldson said on social media platform X (formerly Twitter).

“Are social media platforms ready to handle the rise of AI deepfakes? This is a serious problem.”

The video sees an AI version of Donaldson telling viewers they’re one of “10,000 lucky people who’ll get an iPhone 15 Pro for just $2”, before directing them towards a suspicious link.

Lots of people are getting this deepfake scam ad of me… are social media platforms ready to handle the rise of AI deepfakes? This is a serious problem pic.twitter.com/llkhxswQSw

— MrBeast (@MrBeast) October 3, 2023

Donaldson – who regularly hosts public giveaways – has a fanbase comprised largely of under 25 year olds, with a recent X poll showing nearly 40 per cent of viewers aged between 10-20 years old.

According to cyber security expert and RMIT University professor Asha Rao, young people have grown more susceptible to online scams over recent years – leading scammers to increase their efforts against youth demographics.

“Despite being more internet savvy, young people are more vulnerable to online scams, especially in the current economic climate,” said Rao.

“Younger people are also more exposed to online scams as they are more likely to dip their toes into new, foreign technology.”

Other celebrities who have been imitated in scam advertisements include adventurer Bear Grylls and X owner Elon Musk – who was recently deepfaked in a “get rich quick” video claiming Australians can receive “an income of $5,700 a day” as part of an ostensible investment project.

To spot a real video from a deepfake, common advice is to look for signs of facial transformation – such as uncanny smoothness, excessive wrinkles or misplaced shadows – as well as other telltale signs such as blurry video quality, choppy audio, or a lack of bodily movement from the speaker.

However, as AI technology continues to develop at a breakneck pace, these tips may prove less useful in coming years, if not months.