Exclusive: China-based DeepSeek has quickly become the most blocked artificial intelligence model in Australia, with many local organisations also “taking a cautious stance” against other AI applications and chatbots, according to cloud security firm Netskope.

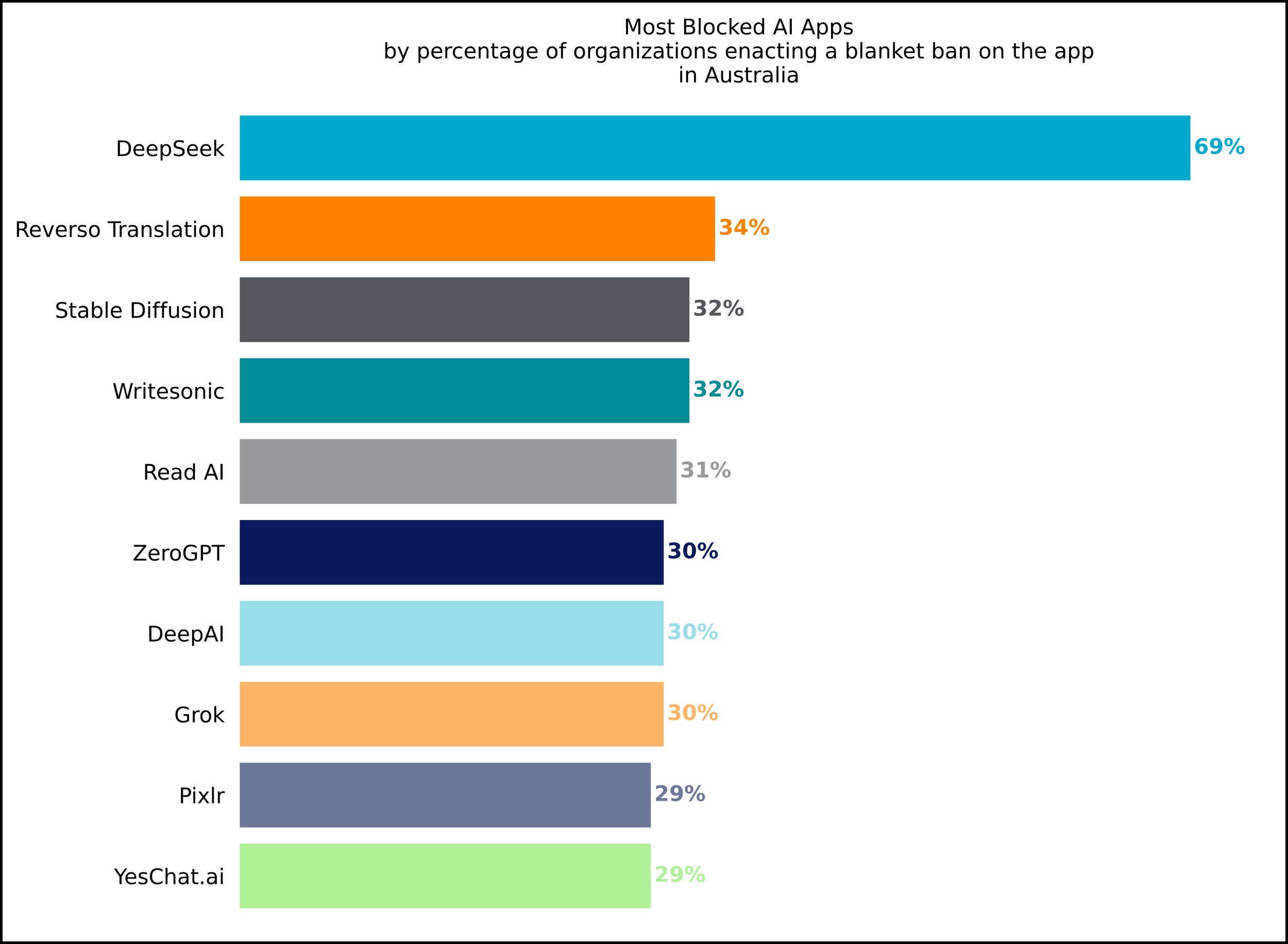

Almost 70 per cent of Netskope's surveyed Australian customers have enacted blanket bans on DeepSeek, according to data collated with permission covering hundreds of thousands of local users between June 2024 and July 2025, and detailed in a new report from the company’s threat defence division.

Netskope Threat Labs director Ray Canzanese told Information Age there were two key reasons why DeepSeek had become the most blocked AI app in Australia since the public launch of its R1 chatbot in January.

The first, he said, was that DeepSeek “was a latecomer to an already crowded market of generative AI chatbots" dominated by the likes of OpenAI’s ChatGPT, Google’s Gemini, and Microsoft’s Copilot.

The other key factor was geopolitical.

“DeepSeek is a Chinese company, so the legal and political environment in China have deemed DeepSeek to be too high-risk to share any data with, especially given the availability of so many alternatives,” Canzanese said.

DeepSeek’s R1 model rattled American tech stocks after its public debut, but also attracted concerns around privacy and national security as experts worried about the model’s censorship of some political and social topics, and whether the Chinese government would seek to access its data.

Information Age exclusively reported in February that DeepSeek had been blocked by several New South Wales government departments in the weeks following its launch.

The app was soon banned from Australian federal government devices after it was found to pose "an unacceptable risk" to national security.

DeepSeek was also blocked by many federal corporations such as NBN Co, ABC, and Australia Post, even though such organisations were not obliged to do so under the federal government’s ban.

It was “doubtless” that government decisions over the safety of AI models such as DeepSeek had “influence over the technology and security decisions of private organisations”, Canzanese said.

Grok, Stable Diffusion, other AI systems blocked

While Netskope found DeepSeek had been blocked by most of its surveyed Australian clients, around a third had banned other AI apps such as Stability.ai’s text-to-image model Stable Diffusion and the controversial Grok chatbot from Elon Musk's xAI.

Grok, which is partly tied to Musk’s social media platform X (formerly Twitter), went on an anti-Semitic tirade in July and recently allowed users to create explicit nude deepfake videos.

Image: Netskope / Supplied

Despite such controversies, the Grok large language model (LLM) had still become the ninth most popular AI app within Australian organisations since launching in late 2023, Netskope found.

This likely indicated “two different approaches to managing genAI risk”, Canzanese suggested.

“A proportion of organisations are taking a cautious stance by enacting a blanket ban on the app, but there are other more granular ways to secure application use, which generally suggests a ‘safe enablement’ strategy,” he said.

“Data loss prevention tools, and real-time user coaching tools are often applied instead of a blanket block, which allows users to experiment and test the value of an app, within rigorous guardrails.”

Other AI apps blocked by around a third of Australian organisations included French-based translation app Reverso, AI-generated text detector ZeroGPT, Singaporean media generator Pixlr, and YesChat.ai — which offers access to DeepSeek.

Businesses often blocked such apps because they posed “a disproportionate risk due to questionable data handling practices or model transparency issues”, Canzanese said.

Australians often shared their employer’s intellectual property or source code with genAI apps, with such information accounting for more than 70 per cent of local data policy violations, according to Netskope.

An increasing number of Australian organisations were “exploring on-premises genAI to maintain control over sensitive data”, Netskope found, while some had begun using tools which advised employees when they were taking unapproved actions and provided them with advice to “empower them to make the decision whether or not to do it anyway”, Canzanese said.

“Blocking is not always an effective control because when done too aggressively — it can drive users to bypass the block by doing things that compromise security, such as switching to an unmanaged device or trying to disable security software,” he said.

ChatGPT business use drops for first time

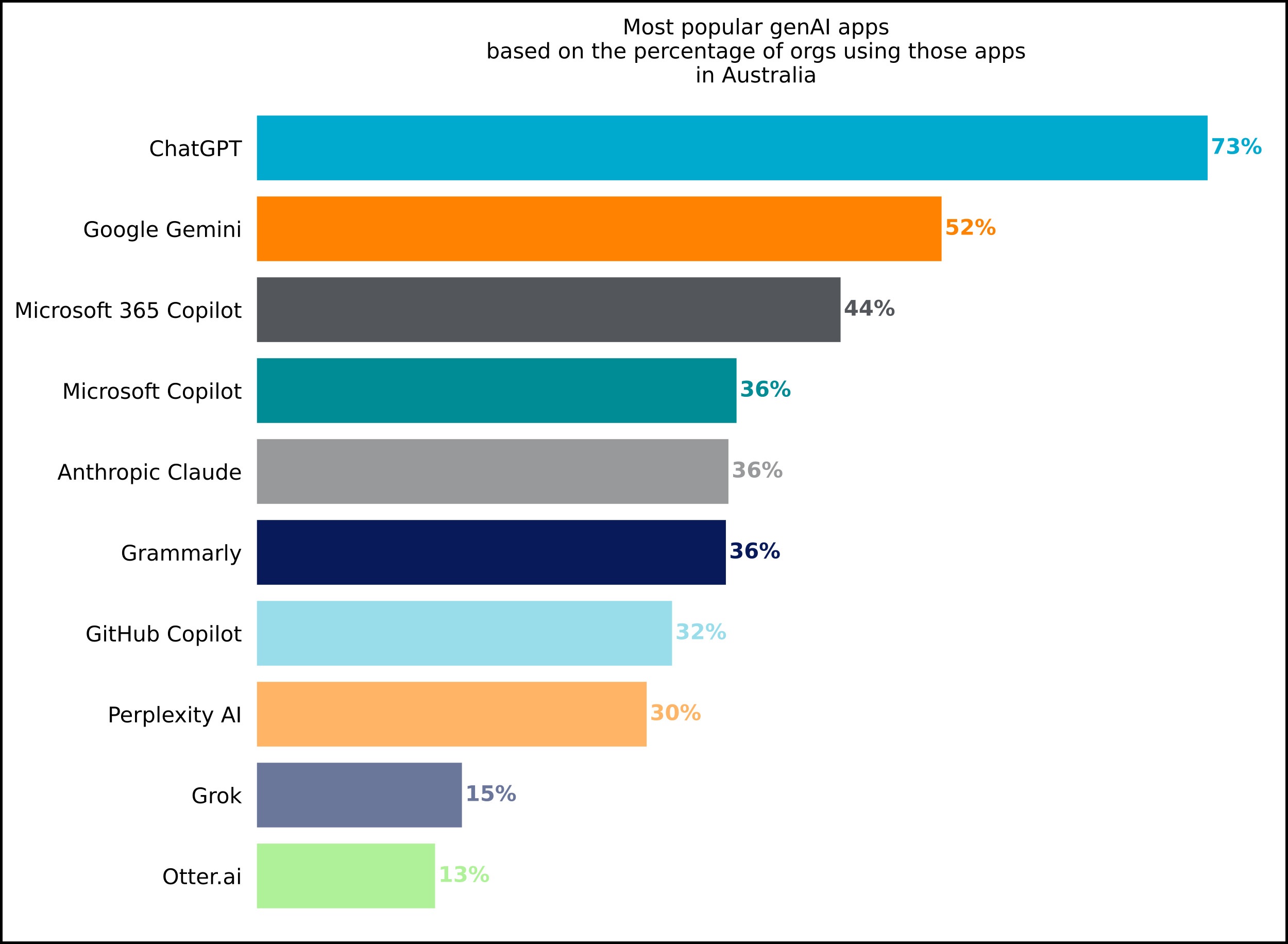

While Australian adoption of genAI apps has continued to grow, Netskope also documented “the first decline we have ever observed in ChatGPT’s popularity [in Australia]” since the chatbot's launch in 2022, which aligned with global trends.

“This is largely due to a shift towards purpose-built tools such as Microsoft 365 Copilot or Google Gemini, that are better integrated into existing enterprise productivity suites, and therefore more widely deployed by organisations as their default, company-approved genAI applications,” Canzanese said.

Image: Netskope / Supplied

ChatGPT still remained the most popular AI app in Australian businesses with more than 70 per cent of employees using it, according to the report — followed by Google’s Gemini and Microsoft’s 365 Copilot.

Other popular apps included Anthropic’s Claude LLMs, AI writing and grammar checker Grammarly, programming assistant GitHub Copilot, and Perplexity’s web search AI, which were all used in around a third of local organisations.

Grok and AI transcription app Otter.ai had been used in just over 10 per cent of Netskope's surveyed Australian clients, the company found.

“GenAI adoption is accelerating across Australia, with 87 per cent of organisations now using genAI applications, up from 75 per cent just a year ago," the company said.