Experts say it is “highly plausible” cybercriminals used AI-based voice deepfakes to trick Qantas contact centre staff in Manila into providing access to the data of almost six million customers, amid conflicting media reports about whether the technology was used.

Australia's largest airline announced a “significant” data breach two weeks ago following “unusual activity on a third-party platform” which allowed non-financial information such as names, phone numbers, email addresses, and residential addresses to be stolen.

While Qantas would not confirm to Information Age whether AI voice deepfakes were used in the breach, the cybercrime group experts believe may be linked to the hack — dubbed 'Scattered Spider' — has a track record of using voice-based phishing (or ‘vishing’) in its attacks.

David Tuffley, a senior lecturer in applied ethics and cybersecurity at Griffith University, said the hacker group often used “AI-generated voices for phone or video impersonation”.

“This is how they pressure support staff into handing over access credentials,” he said.

“We don't have official confirmation, but the scenario is highly plausible and consistent with how this group does their dastardly business.”

Adam Marré, a former FBI agent who is now chief information security officer (CISO) at cybersecurity firm Arctic Wolf, also said it was “plausible” Scattered Spider used vishing if it had attacked Qantas, as experts had predicted.

“While it hasn’t been formally named as the threat group behind the Qantas cyberattack, Scattered Spider is part of ‘The Com’ — a loosely-knit collective of financially-motivated cybercriminals specialising in sophisticated social engineering campaigns,” Marré said.

Another group in The Com, dubbed UNC6040, recently targeted software provider Salesforce — of whom Qantas is a customer — with “a vishing campaign to impersonate IT staff and gain backdoor access to company networks”, he added.

A 'significant' data breach of a third-party platform used by a Qantas contact centre in Manila saw almost six million customers' data stolen. Image: Qantas

Qantas reportedly warned workers of hacker threat

Qantas had reportedly warned contact centre staff of potential threats in the days before its breach occurred, with IT teams told the airline had “triaged a recent threat advisory from Google Cloud regarding the threat group Scattered Spider", according to the Australian Financial Review.

The warning allegedly urged staff to be vigilant to cybercriminals potentially attempting to impersonate employees and use phone-based social engineering to change or recover Qantas accounts.

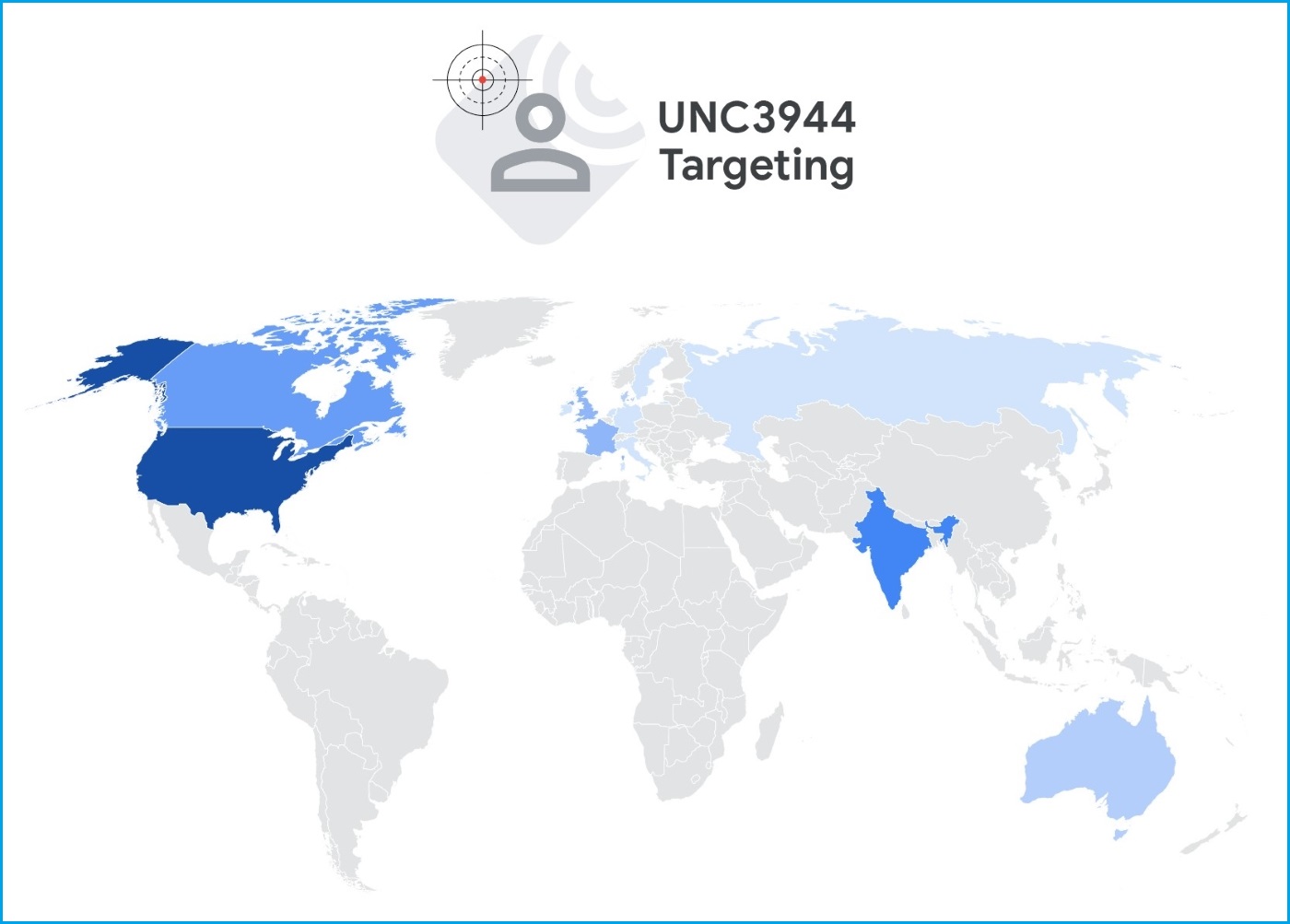

Google Cloud’s cybersecurity teams had earlier warned companies that Scattered Spider — also known as UNC3944 — had targeted prominent brands and industries using social engineering attacks and voice impersonations.

“Their operators frequently call corporate service desks, impersonating employees to have credentials and multi-factor authentication (MFA) methods reset,” Google’s Nick Guttilla wrote in June.

“This access is then leveraged for broader attacks, including SIM swapping, ransomware deployment, and data theft extortion.”

Cybersecurity giant CrowdStrike said Scattered Spider had “used help desk voice-based phishing in almost all observed 2025 incidents”.

The FBI also warned it had observed Scattered Spider “expanding its targeting to include the airline sector”.

“They target large corporations and their third-party IT providers, which means anyone in the airline ecosystem, including trusted vendors and contractors, could be at risk,” the bureau said in June.

Scattered Spider — also known as UNC3944 — has targeted major companies and industries in several countries, including Australia. Image: Google Cloud

Qantas has not confirmed whether it has received a demand for a ransom payment to prevent publication of the stolen data.

The airline last week said it had been contacted by a “potential cybercriminal”, but maintained its systems remained secure.

Qantas said it was working with government agencies and federal police, and has told impacted customers to “stay alert” of potential scams which may use their stolen information in order to appear more legitimate.

The company said it had implemented “additional security measures" since the breach to “further restrict access and strengthen system monitoring and detection” around customer accounts.

Voice deepfakes ‘starting at just $US5’

A July report from cybersecurity company Trend Micro found “the market for AI-generated voice technology is extremely mature" and barriers to entry such as cost were “surprisingly low”, with many platforms offering “decent output starting at just $US5”.

The company said because many voice synthesis services now also offered multilingual output and the “ability to control pronunciation, intonation, and emotion”, malicious users could “craft persuasive and emotionally manipulative audio clips in different languages”.

“Even more concerning is how easy it has become to generate audio deepfakes,” the company said.

“Many services now offer one-shot voice generation, where just a few seconds of source material is enough to create a convincing replica of someone’s voice.”

Tuffley from Griffith University said improvements in the quality of AI voice deepfakes had outpaced improvements in visual deepfakes which typically attempt to replicate a person's face.

The best defences against voice deepfakes involved using systems which “verify unique vocal patterns, check for signs of synthetic audio, and require real-time responses that are hard for AI to simulate”, he said.

“… And of course staff training, since most breaches happen because people are tricked into allowing access.”

Arctic Wolf's Marré said organisations could attempt to defend against advanced attacks by requiring verification beyond a voice or a face — “such as a rotating code word or a ticketing system” — as well as implementing multi-factor authentication and “assessing third-party risks”.

AI scammers copy US Secretary of State’s voice

Just days after the Qantas breach was announced, The Washington Post reported an unnamed fraudster had used AI to impersonate the voice of US Secretary of State, Marco Rubio, while contacting at least five senior US and international officials.

The imposter allegedly sent fake voice and text messages which copied Rubio’s voice and writing style, according to a leaked US State Department cable which reportedly stated the fraudster’s goal was “gaining access to information or accounts” of government officials.

US Secretary of State Marco Rubio (left) was a recent victim of an AI voice deepfake scam. Image: The White House / X

Marré said the Rubio incident revealed “an unsettling reality” in which “it’s hard to know what is real on our screens or in our inboxes”.

He argued trusting human judgement alone would not work as the technology continued to improve.

“Technology is advancing rapidly, and today’s deepfakes are the worst they will ever be,” Marré said.

“As quality improves, even trained humans will be fooled.”

AI voice deepfakes have been used to mimic other politicians, with a consultant fined $9 million ($US6 million) in 2024 over fake robocalls which mimicked then-US President Joe Biden's voice and urged voters not to vote in a state's Democratic primary election.

In May 2025 the FBI said it had launched an investigation after someone allegedly attempted to impersonate White House chief of staff Susie Wiles’s voice on calls to her contacts — some of whom reportedly believed AI may have been used.

Paul Haskell-Dowland, an ACS Fellow and cybersecurity professor at Edith Cowan University, said obtaining audio or video to create voice deepfakes was becoming “much easier” given the amount of content shared on social media.

"Those with very public profiles are obviously easier targets, but anyone posting audio or video content is a potential target,” he said.