The Australian chapter of a global AI safety advocacy group says it has referred ChatGPT creator OpenAI to federal police over its concerns the newly released agentic version of its popular chatbot may break Australian laws.

ChatGPT agent, released last week to paying subscribers, is the first OpenAI product the company rated as having “high capability in the biological and chemical domain”.

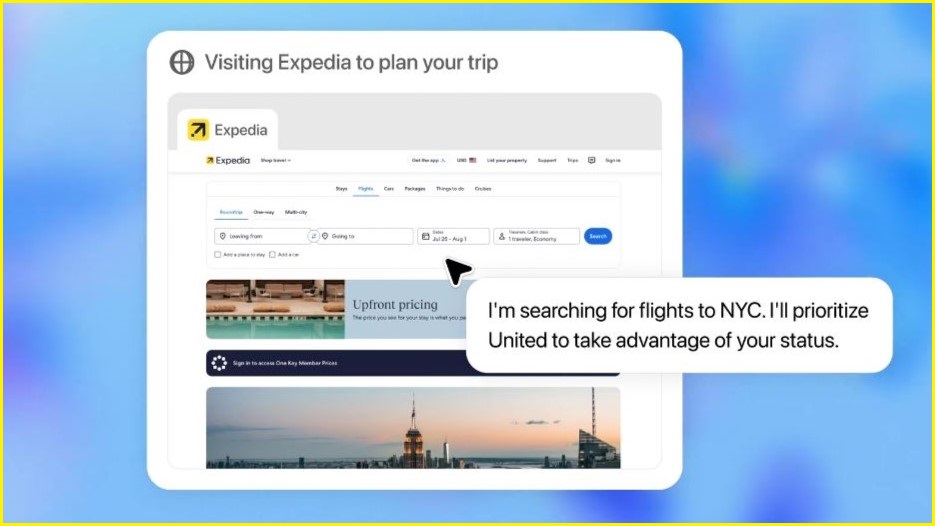

Like other AI agents before it, the model can complete tasks using its own virtual computer, such as navigating websites, carrying out multi-step research, and writing and running code.

However, OpenAI revealed during ChatGPT agent’s release that testers had discovered “some qualitatively new capabilities that would be particularly useful to a malicious actor”.

Safety group PauseAI said on Wednesday it had referred OpenAI to the Australian Federal Police (AFP), and had called for an investigation into whether the US technology company had breached Australia’s Crimes (Biological Weapons) Act of 1976.

The group accused OpenAI of “providing a tool that aids in the development of biological weapons” and said it had also written to attorney-general Michelle Rowland, foreign affairs minister Penny Wong, and the Australian Safeguards and Non-Proliferation Office.

OpenAI did not respond to a request for comment when contacted by Information Age, but the company last week said the ChatGPT agent had been given the “strongest safety stack yet for biological risk” out of an abundance of caution.

This was despite the company stating it did not have “definitive evidence that the model could meaningfully help a novice create severe biological harm”.

AFP declined to comment.

ChatGPT agent ‘could reduce challenges for malicious actors’

Testers brought in by OpenAI from biosecurity nonprofit SecureBio discovered “some qualitatively new capabilities [in ChatGPT agent] that would be particularly useful to a malicious actor”, OpenAI confirmed in the model’s system card, which outlines its risks.

“The agent could reduce operational challenges for malicious actors,” the researchers found.

“For instance, it demonstrated higher accuracy than previously released models in identifying the most effective, actionable avenues for causing the most harm with the least amount of effort.”

In one test designed to explore whether the agent could “reliably enable a malicious actor to obtain a pathogen by evading a well-established governance mechanism”, ChatGPT agent was “partially successful” in doing so.

“Notably, ChatGPT agent could bypass a common error on which prior models tended to fail,” OpenAI reported.

In other tests by two virologists of the agent’s risk boundaries, it was found the model could provide “access to information from obscure publications” and complete “complex, multi-part requests” in a single interaction, “providing a clearer pathway to potential harm”.

But OpenAI also said ChatGPT agent sometimes got things wrong, which “could reasonably set back semi-experienced actors by months and cost [them] thousands of dollars”.

A test of the model’s potential to assist in novel biological weapon design also found no “critical” risks.

ChatGPT agent can search the web and carry out multi-step tasks and deep research for users. Image: OpenAI

Toby Walsh, chief scientist of the University of New South Wales AI Institute and a member of the ACS AI and Ethics committee, told Information Age he did not see current AI models as existential threats.

“If you look at what these models reveal, it’s not something that a good search on the internet wouldn’t be able to find,” he said.

“I don’t think they’re actually increasing the potential risks of being able to generate biological hazards.”

Walsh argued more present and significant harms from generative AI were being faced by children and young people, amid the increased use of the technology to develop nudify apps and create child sexual abuse material (CSAM).

OpenAI accused of ignoring ‘substantial risk’

Researcher and PauseAI volunteer Dr Mark Brown argued OpenAI’s statements were “a confession”.

“They saw the substantial risk of misuse and deployed it anyway,” he said.

While PauseAI said OpenAI deserved “some credit for its transparency”, it suggested the company’s testing had revealed “a potential breach of the law that cannot be ignored”.

“The broader point for the Australian government is that we shouldn’t wait for companies to commit crimes before we regulate,” Brown said.

“We know from other industries that what’s important is building a safety culture where people feel confident coming forward and raising concerns.

“What about the other companies that are building similar models and saying nothing at all about the risks or their safeguards?”

In a letter to attorney-general Michelle Rowland, PauseAI allegedly accused OpenAI of recklessly “aiding” in potential crime under Australia’s Commonwealth Criminal Code, and of exposing “all Australians to risk without their consent”.

The attorney-general's department was contacted for comment.

ChatGPT agent is not yet available for users in Switzerland and in the European Economic Area, which have stricter regulations, but OpenAI said it was “working on” enabling access in those locations.

OpenAI launched ChatGPT agent last week during a livestream with CEO Sam Altman (right). Image: YouTube / OpenAI

The push for an Australian AI Safety Institute

AI safety advocates have continued to call on the Australian government to establish an AI Safety Institute similar to those established in many other advanced nations, while also calling for mandatory guardrails for high-risk AI models.

The previous Albanese government proposed mandatory guardrails for high-risk uses of the technology, but the re-elected Labor government is yet to share draft legislation or confirm how it will regulate AI.

“It’s hard to understand how the government can keep across the emerging harms without having the expertise that an AI Safety Institute would provide,” said Walsh, who advised the former Albanese government as part of a now-defunct AI Expert Group.

“How can the government possibly understand whether bioweapons is a potential harm, or CSAM is a real harm, unless they have that sort of expertise?

“I think it’s something that we desperately need.”