More than 64 million job applicants for McDonald’s have had their personal information exposed due to a security oversight in an AI chatbot.

In 2019, fast food giant McDonald’s collaborated with conversational AI platform Paradox.ai to deploy a hiring chatbot named ‘Olivia’.

Olivia has since been used domestically and abroad to screen applicants, determine shift preferences, collect resumes, conduct personality tests and ultimately reduce McDonald’s time to hire by 60 per cent.

But for all its time-saving capabilities, researchers found part of Olivia’s administration interface was left accessible with the default password ‘123456’.

Coupled with a vulnerability in an internal API, security researchers Ian Carroll and Sam Curry said they were able to access the data of more than 64 million applicants.

“We immediately began disclosure of this issue once we realised the potential impact”, the researchers said.

The exposed data reportedly included names, addresses, email addresses, phone numbers, and authorisation tokens which could be used to access raw chat messages.

McDonald’s Corporation told Information Age the company was “disappointed” by the “unacceptable vulnerability” from Paradox.ai.

“As soon as we learned of the issue, we mandated Paradox.ai to remediate the issue immediately, and it was resolved on the same day it was reported to us,” said McDonald’s.

“We take our commitment to cybersecurity seriously and will continue to hold our third-party providers accountable to meeting our standards of data protection.”

McDonald’s is one of Australia’s largest employers, boasting more than 115,000 workers domestically and over 2.15 million globally.

Would you like an IDOR flaw that?

Carroll and Curry noticed the issue after they came across a Reddit thread which showed Olivia responding to a job applicant with “nonsensical answers”.

When testing the job application process themselves, the researchers noticed a login link for Paradox.ai team members.

“Without much thought, we entered ‘123456’ as the username and ‘123456’ as the password and were surprised to see we were immediately logged in,” they wrote.

The researchers had effectively become administrators of a test restaurant in McDonald’s ‘McHire’ system, where they went on to discover a simple API flaw known as an insecure direct object reference (IDOR).

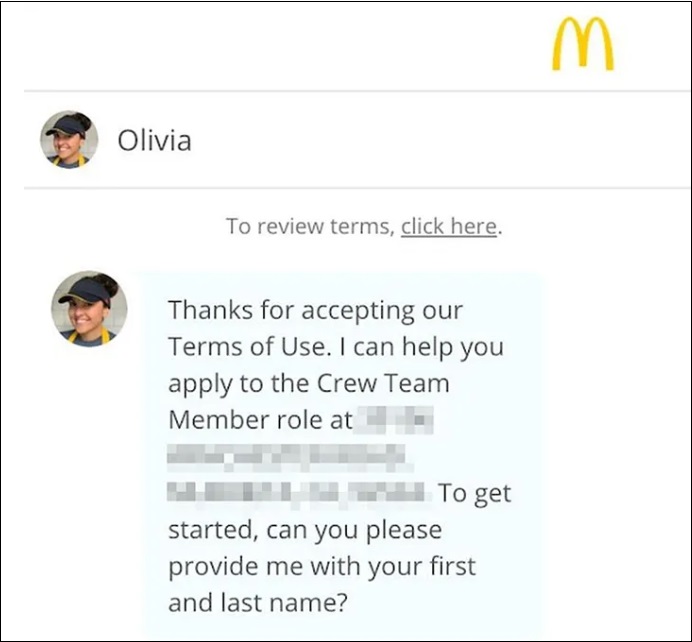

McDonald's chatbot, 'Olivia'. Image: Supplied

This flaw effectively allowed them to adjust the parameter values being fed through the API to view information from multiple McDonald’s applicants.

“We quickly realised this API allows us to access every chat interaction that has ever applied for a job at McDonald’s”, the researchers wrote.

Notably, a Paradox.ai blog post said McDonald’s had adopted conversational AI across 90 per cent of its global franchises, though this blog post has since been edited to remove any mention of McDonald’s.

Paradox owns mistake

Despite Paradox.ai’s security page boasting users “don’t have to” worry about security, Curry and Carroll could not locate any publicly available disclosure contacts to report their findings.

“We had to resort to emailing random people,” they wrote.

“After our outreach reached the appropriate people, the Paradox.ai team engaged with us.”

Paradox.ai later said in a blog post no “candidate information” was leaked online or made publicly available, and no other Paradox clients were impacted.

“Once we learned of this issue, the test account credentials were immediately revoked and an endpoint patch was deployed, resolving the issue within a few hours,” Paradox.ai wrote.

Paradox.ai noted the test account used for the exploit hadn’t been logged into since 2019 and conceded that it “frankly should have been decommissioned”.

“We are launching several new security initiatives including providing an easy way to contact our security team,” said Paradox.ai

Following the incident, Ray Canzanese, director at cybersecurity firm Netskope Threat Labs, said the pressure for teams to build AI-based solutions poses a danger of “shadow AI” being created without proper oversight.

“Custom apps and agents require careful attention to ensure that they are properly secured and governed,” said Canzanese.

Indeed, Curry told Information Age he’d already seen “a dozen websites” that have had simple, easy to miss vulnerabilities, which likely only existed because code generation tools “fail to understand the broader contexts of the apps they are building”.

“With models today, you have to have paragraphs of precursor to ask that the generation abide by security standards,” said Curry.

“Even then, it can often miss the easy issues.”