A single sticker placed on a speed sign is enough to hack Tesla’s autopilot system, new research has found.

McAfee’s Steve Povolny and Shivangee Trivedi were successfully able to trick a Tesla’s autopilot into reading a 35mph sign as 85mph.

The demonstration expands on research the team had completed on what it calls ‘model hacking’ or ‘adversarial machine learning’.

First the team found a weaknesses in machine learning algorithms used by advanced driver assist systems.

Starting in a lab using a webcam and an AI trained to recognise speed signs, the researchers explored ways to cause the AI to misclassify a simple sign.

After confirming that some Tesla vehicles have a speed assist system which monitors the surroundings for signs, the team took a colleague’s Tesla to a test track and successfully tricked it into automatically speeding up to 85mph after misreading a 35mph sign.

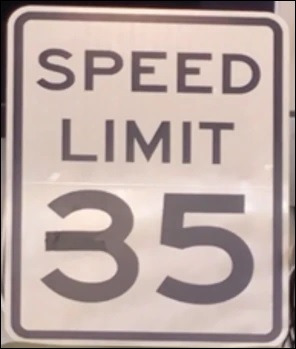

Startlingly, the sign has only been modified with a small black sticker extending the middle of the number 3, that could go unnoticed by human eyes.

A photo of the sticker used to cause a misclassification. Source: McAfee

The team could not replicate their experiment with a 2020 model Tesla (albeit with limited testing) but warned that “the vulnerable version of the camera continues to account for a sizeable installation base among Tesla vehicles”.

McAfee researchers showed-off the concept at the MPower conference in Las Vegas last year.

Speaking with Information Age at the time, head of McAfee’s Advanced Threat Research team, Steve Povolny, said this attack vector was “probably a good decade out from real world attacks”.

He noted the necessity of discovering novel cyber vulnerabilities early to mitigate future catastrophies.

“Where we would predict this becomes a problem is when you have fleet mentality of vehicles and where you can cause damage to multiple vehicles at the same time,” Povolny said.

“You could potentially disrupt an entire transportation supply chain and really have a fundamental impact on the economy.”

How safe is Tesla Autopilot?

The vulnerability of Tesla’s Autopilot system has been pointed out on numerous occasions.

Most recently, the analysis of a fatal 2018 crash revealed that the driver, Walter Huang, had reported to Tesla a glitch that caused his car to faintly steer off the road.

After experiencing the error at the same location multiple times, the Tesla finally killed Huang when, travelling at 112km/h, it smashed into a concrete divider in the same place.

Earlier this year, Teslas were involved in two fatal crashes that killed three people in a single day.

Since 2016, the US National Highway Traffic Safety Administration has investigated 13 crashes involving the autonomous driving systems.

Over 110,000 people have died from car accidents in the US since 2016.

Around 1,200 people die on Australian roads every year.