Controversial facial recognition company Clearview AI has offered its services to the Ukrainian government in order to help discover Russian agents, fight misinformation, and identify the deceased, as first reported by Reuters.

In a letter seen by Information Age, Clearview AI’s Australian CEO Hoan Ton-That told Ukrainian officials it was eligible for “free training and usage” of the facial recognition tool “during this time of terrible conflict”.

“Clearview AI’s database contains photos from all around the world, and a large portion from Russian social media sites such as Vkontakte,” Ton-That said.

“We have over two billion images from the Vkontakte social network in the Clearview AI database.”

Clearview AI’s facial recognition database is built on images scraped from the web without users’ consent.

Australia’s Information Commissioner has ordered Clearview to delete Australian images while the company is facing lawsuits across the US and in Europe.

Still, the Ukrainian government apparently took up Ton-That’s offer and has started trialling Clearview’s facial recognition software, according to Reuters.

Founder of the Surveillance Technology Oversight Project, Albert Fox Cahn, described the use of Clearview in the Ukraine conflict as “grotesque”.

“When police facial recognition makes mistakes we see innocent people wrongly arrested,” he tweeted. “When military face scans are wrong, civilians will get killed.”

Clearview offered four potential use-cases for its surveillance tool saying it could be used to weed out Russian infiltrators, identify people who died in the war, help reunify the families of refugees, and even fight misinformation.

“My heart goes out to the Ukrainian people, and my hope is our technology could be used to prevent harm, save innocent people, and protect lives,” Ton-That said in his letter.

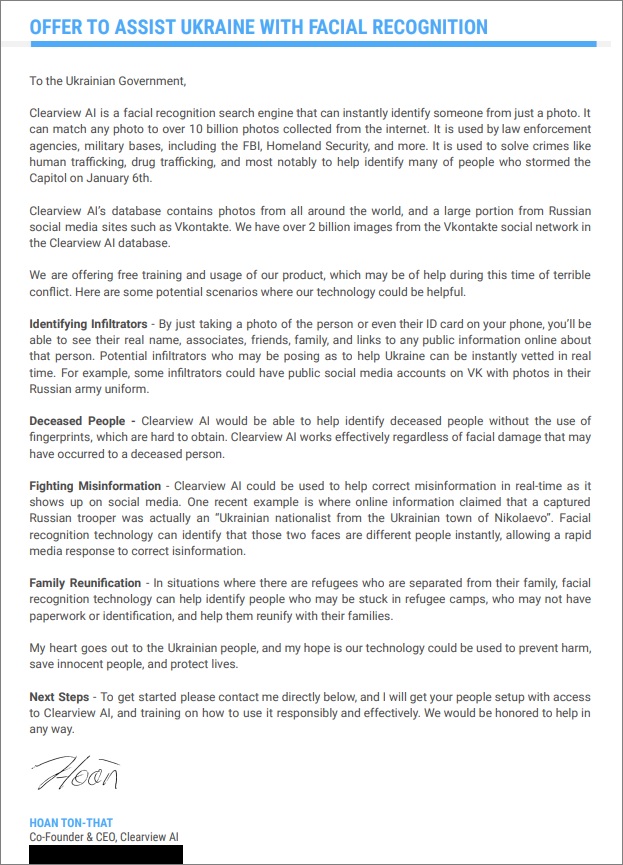

The letter Clearview AI sent to the Ukrainian government offering its software as a service. Image: supplied

Ever since a New York Times investigation first shed light on the company in early 2020, Clearview AI has been mired in controversy, in no small part because of the way it has sought to normalise law enforcement and government adopting private surveillance technology.

The company markets itself by offering a free trial to would-be buyers – initially detectives and police officers – who were given test accounts, often without appropriate oversight or procurement processes.

In February 2020, the company was breached and a list of its users was exposed which included email addresses from Australian police departments.

At the time, none of the departments admitted to knowing about the secret use of this surveillance technology until the Australian Federal Police admitted seven of its officers had signed up for the software.