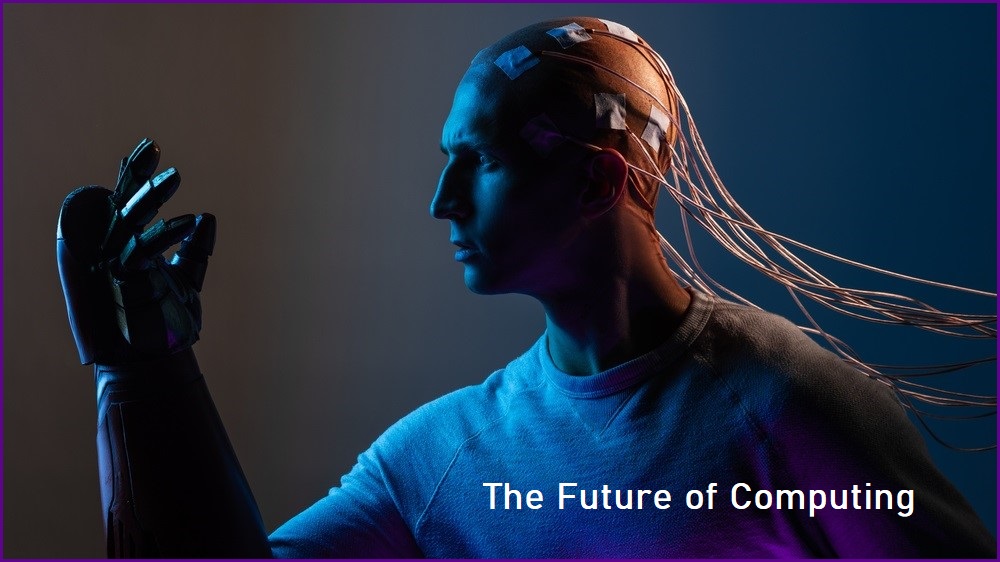

This three-part series looks at brain-computer interfaces: what they are, what they could become, and the implications of connecting our brains directly to computers.

In part one, we talk about how to connect directly to brain matter and the possibilities this could bring to life.

Imagine using a computer with your thoughts. No more typing and scrolling, you just think about sending an email and off it goes.

This kind of technological telepathy is the broad idea behind the brain-computer interface (BCI).

Also known as a brain-machine interface, these devices are intended to bypass our typical modes of interacting with computers – hands, fingers, and voices – in favour of capturing and interpreting signals directly from the brain.

The possibilities of this technology are so wide-reaching to the point where we could have near-instant communication with anyone on Earth using just our minds.

These devices could also let you control robotic limbs or pilot drones and, as virtual reality continues to develop, you may be able to move around digital worlds without lifting a finger.

In scientific literature, the concept of BCIs goes back at least as far as a 1973 paper from Jacques Vidal of the University of California’s Brain Research Institute who pondered whether brain signals captured by an electroencephalogram (EEG) could be used “for the purpose of controlling such external apparatus as prosthetic devices or spaceships”.

Vidal described an early BCI built on the “conviction” that EEG signals “contain usable concomitances of conscious and unconscious experiences” that – though largely consisting of noise – still “reflects underlying neural events”.

BCIs could do more than read brainwaves – they may be able to write them as well, letting you ‘see’ a user interface where there is none, or even giving you a consistently calm mental state with the kind of direct brain access pharmaceutical companies could only dream of achieving.

These are just some of the potential future use-cases for BCIs. They may sound like fantastical ideas ripped from the pages of science fiction novels, and that’s because much of this technology lies at the bleeding edge where science fiction meets science fact.

At the heart of modern BCI research and development is a striving for greater inclusivity. People living with disabilities and chronic health conditions are the immediate beneficiaries of this technology as they find new ways of communicating and interacting with the world and other people.

The foundations being laid in the name of creating a more inclusive world could one day lead to a general computing revolution that has implications for how we define autonomy and free will, how we preserve the privacy of our inner-most thoughts and feelings, and what it means to be human.

An unreal vision

It’s a technology that, like much of the bleeding edge, involves billionaire Elon Musk and his outrageous, sci-fi promises.

In March 2017, early reports of Musk’s company Neuralink surfaced. When he began to speak publicly about the idea behind Neuralink, Musk mentioned the devices as a way of creating “symbiosis with artificial intelligence” in order to give people “superhuman cognition” and mitigate the “existential risk” Musk and other long-termist adherents believe is inherent in AI development.

Neuralink’s soft launch was nudged along with a long explainer from blogger Tim Urban about how BCIs could usher in a future where humans collectively and telepathically make decisions – something Musk insists is necessary lest humans become “effectively useless or like a pet” to AI.

“If we achieve tight symbiosis [with AI through BCIs], the AI wouldn’t be ‘other’,” Musk said. “It would be you and with a relationship to your cortex analogous to the relationship your cortex has with your limbic system.”

To get to this point, Musk wants to implant Neuralink devices into willing customers in order to both “report from and stimulate spikes in neuron activity”.

“It will be safe enough that it’s not a major operation and will be equivalent to LASEK surgery,” Musk previously said.

One day, Musk claims, the device will even let people “save and replay memories”.

As with many of his business ventures, Musk’s intended future state of Neuralink is as ambitious as it is divorced from the current technological reality.

Neuralink has yet be approved for human trials and has been plagued by reports of animal cruelty during testing.

One of the few glimpses we have seen of Neuralink involve a monkey playing the video game Pong with its mind which, while impressive in its own right, failed to demonstrate new developments in the BCI space.

In mid-2004, researchers with the BrainGate project conducted human trials of its technology that gave a handful of patients who had spinal cord injuries or motor neurone disease. They were able to control a computer cursor and even issue simple commands to a prosthetic hand using their minds.

Leaving the lab

Today, much of the effort in BCI development is about taking the technologies out of the lab, according to Professor David Grayden, Clifford Chair of Neural Engineering at the University of Melbourne.

He told Information Age there has “been a lot of work trying to understand the [brain] signals, decode them, and use AI to interpret the signals, but with systems that are sort of unusable in the home”.

Moving BCI technologies into people’s homes will be a game changer for the people who use them and is part of a gradual adoption curve.

There are a few different ways for extracting your thoughts, Professor Grayden said, including the EEG technology explored 50 years ago by Jacques Vidal.

“[The electrodes] don’t need surgery to put on so it’s about trying to get the best possible signal from those,” he told Information Age.

“This is really difficult because the signal is attenuated a lot by the skull and the scalp so you really only get the low frequencies.”

Maximising the fidelity and interpretation of brain signals using EEG could be an important step toward broader BCI adoption and use because of its non-invasive nature.

You could imagine buying a consumer-grade headset with powerful enough electrodes to read signals via processing that happens on-board or on a nearby device.

Back in 2019, Meta (then Facebook) told the world about its own experiments with BCI technology in a blog post that described its research into decoding words and phrases captured from invasive electrocorticography (ECoG).

Meta’s researchers used this data to train a neural network that could interpret a “sentence-length sequence of neural activity” into language.

Incredibly, the researchers claimed in an article subsequently published in Nature Neuroscience, that the model could decode brain activity “with high accuracy and at natural-speech rates”.

Meta posed a possible future in which you could have the “hands-free convenience and speed” of using your voice to control your phone, tablet, laptop “with the discreteness of typing”.

Aside from EEG, Meta also spoke about how it tested measuring changes in oxygen levels in the brain that correspond to activity.

Combined with its research on decode brain activity, Meta suggested that the ability “to recognise even a handful of imagined commands, like ‘home,’ ‘select,’ and ‘delete’ would provide entirely new ways of interacting with today’s VR [virtual reality] systems – and tomorrow’s AR [augmented reality] glasses”.

Here you can see the potential for consumer-grade BCIs with rudimentary mind-reading technology for walking around virtual worlds or controlling AR glasses.

But so far, the best way to control a computer with your mind is to have electrodes placed directly onto brain tissue.

“If you’re inside the skull, you can get higher frequencies and a better localisation of the signal,” Professor Grayden said.

“This involves putting penetrating electrodes down into people's heads, kind of like a bed of nails that just gets pushed into the brain.

“Those electrodes are so small and they're so close to the neurons that they can record even individual neuron activity.”

Localisation is important given how our brains process different information in different areas of tissue.

It’s an imperfect description of how brains work – there is plenty of overlap with activity that activates neurons in different sections of the brain – but is nonetheless useful when trying to target specific areas or functions like in the motor cortex, which controls voluntary movements.

The more invasive the BCI technology, the better the signal and localisation – but implanting anything onto the brain is fraught with difficulty.

In part two of this series, we will look at the limitations for the development of BCIs, an Australian company that has taken a novel approach, and some of the real-world uses of BCIs today.