Australian children on Instagram will be placed on restricted Teen Accounts within months under significant changes announced by Meta.

The news comes just a week after the government announced plans to ban children from accessing social media.

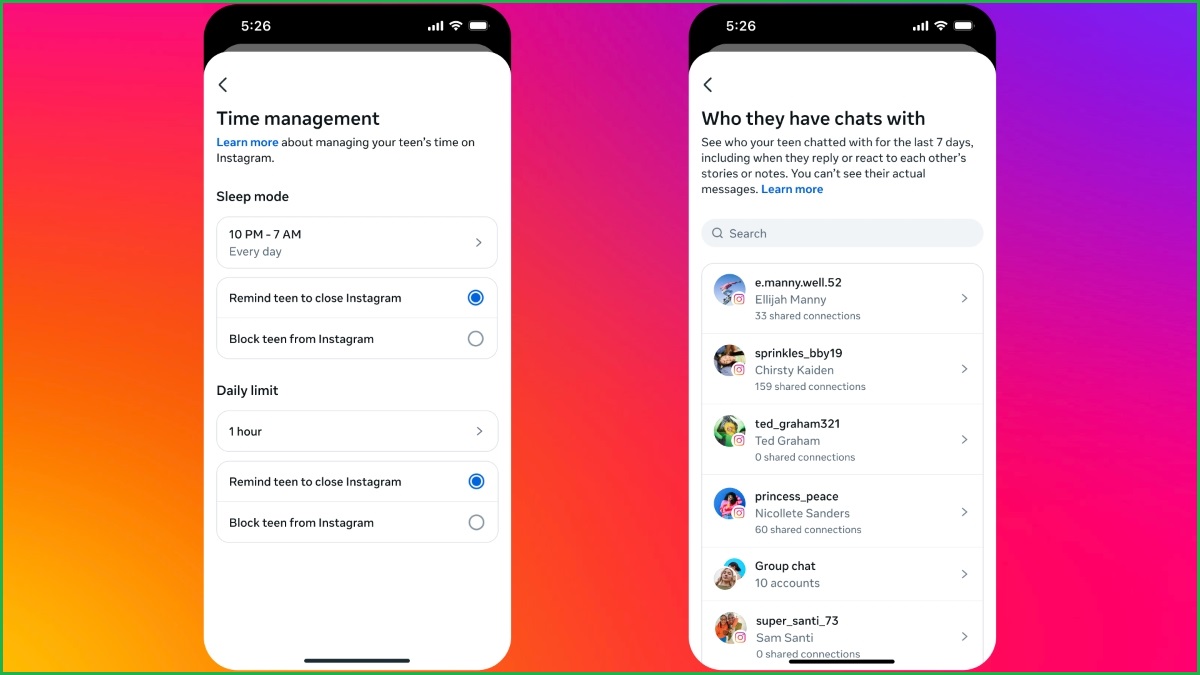

Meta, the parent company of Instagram and Facebook, unveiled new Teen Accounts on Wednesday which will give the parents of teenage social media users the ability to see who their child is talking to on Instagram, set daily usage limits, prevent them from using the app at nighttime, and see what topics they are being served through the algorithm.

The Teen Accounts will also have the most restrictive privacy protections in place by default, with parental consent required for users aged under 16 looking to loosen these controls.

Teenagers who signed up for an Instagram account from today will be placed under these new settings automatically, while existing child users will soon be notified of the changes and those in Australia and other selected jurisdictions will be moved onto them within 60 days.

Meta Australia managing director Will Easton said the updates had been “many months in the making” and would “reassure parents that teens are having safe experiences” on the platform.

“Instagram Teen Account protections are designed to address the biggest concerns of parents, including who their teens are interacting with online, the content they’re seeing, and whether their time is being well spent,” Easton said in a statement.

“This new experience, guided by parents, will help many feel more controlled and confident regarding their teenager’s activity on Instagram.”

Privacy by default

Under the new rules, teenage Instagram users will have private accounts by default.

They will have to accept new followers and those who don’t follow them cannot see their content.

This will apply to all teenagers aged under 16 years old, including those who are already on Instagram, and to those aged 16 and 17 years old when they sign up to the app.

There will also be messaging restrictions for Teen Accounts, with children to only be messaged by people they follow or who they are already connected to.

Meta said its new Instagram updates were 'guided by parents'. Image: Instagram / Supplied

Sensitive content restrictions will automatically be placed on these accounts, limiting the types of sensitive content that can be presented in the Explore and Reels tabs, such as videos showing people fighting or promoting cosmetic procedures.

Instagram users aged under 16 will require parental consent to change any of these default settings.

To do this, they will need to set up parental supervision, which will allow their parents to approve any requested changes to safety settings.

These tools will enable the parents of young Instagram users to see who they have messaged in the last week – but not the content of these messages – and block them from using the app entirely at certain times, such as during the night.

A new Sleep Mode feature will also mute notifications overnight.

Parents will be able to set total daily limits for Instagram usage and see what topics they’re children are being fed by the algorithm.

‘Teens may lie’

To combat users looking to change their listed age on Instagram, Meta will be rolling out technology to proactively find the accounts belonging to teenagers.

It will begin testing this technology in the US early next year.

“Teens may lie about their age and that’s why we’re requiring them to verify their age in more places, like if they attempt to use a new account with an adult birthday,” the Meta statement said.

“We’re also building technology to proactively find accounts belonging to teens, even if the account lists an adult birthday.

“This technology will allow us to proactively find these teens and place them in the same protections offered by Teen Account settings.”

The effort to better protect children on Instagram comes just a week after the Australian government flagged plans to introduce a total ban on children using social media.

The exact age of children to be restricted from using social media platforms and “other relevant digital platforms’ is yet to be decided, nor is the method that will be used to prevent them from doing so.