In the short time since OpenAI launched ChatGPT in November 2022, generative artificial intelligence (AI) products have become increasingly ubiquitous and advanced.

These machines aren’t limited to text – they can now generate photos, videos and audio in a way that’s blurring the line between what’s real and what’s not.

They’ve also been woven into tools and services many people already use, such as Google Search.

But who is – and isn’t – using this technology in Australia?

Our national survey, released today, provides some answers.

The data is the first of its kind.

It shows that while almost half of Australians have used generative AI, uptake is uneven across the country.

This raises the risk of a new ‘AI divide’ which threatens to deepen existing social and economic inequalities.

A growing divide

The ‘digital divide’ refers to the gap between people or groups who have access to, can afford and make effective use of digital technologies and the internet, and those who cannot.

These divides can compound other inequalities, cutting people off from vital services and opportunities.

Because these gaps shape how people engage with new tools, there’s a risk the same patterns will emerge around AI adoption and use.

Concerns about an AI divide – raised by bodies such as the United Nations – are no longer speculative.

International evidence is starting to illustrate a divide in capabilities between and within countries, and across industries.

Who we heard from

Every two years, we use the Australian Internet Usage Survey to find out who uses the internet in Australia, what benefits they get from it, and what barriers exist to using it effectively.

We use this data to develop the Australian Digital Inclusion Index – a long-standing measure of digital inclusion in Australia.

In 2024, more than 5,500 adults across all Australian states and territories responded to questions about whether and how they are using generative AI.

This includes a large national sample of First Nations communities, people living in remote and regional locations and those who have never used the internet before.

Other surveys have tracked attitudes towards AI and its use.

But our study is different: it embeds questions about generative AI use inside a long-standing, nationally representative study of digital inclusion that already measures access, affordability and digital ability.

These are the core ingredients people need to benefit from being online.

We’re not just asking “who’s trying AI?”.

We’re also connecting the use of the technology to the broader conditions that enable or constrain people’s digital lives.

Importantly, unlike other studies of AI use in Australia collected via online surveys, our sample also includes people who don’t use the internet, or who may face barriers to filling out a survey online.

Australia’s AI divide is already taking shape

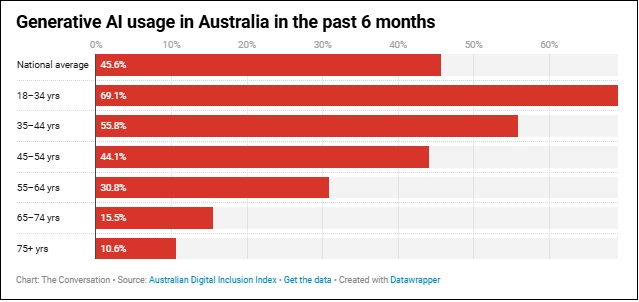

We found 45.6 per cent of Australians have recently used a generative AI tool.

This is slightly higher than rates of use identified in a 2024 Australian study (39 per cent).

Looking internationally, it is also slightly higher than usage by adults in the United Kingdom (41 per cent), as identified in a 2024 study by the country’s media regulator.

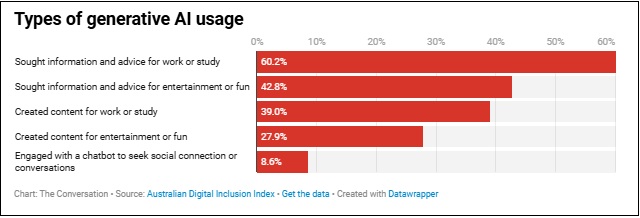

Among Australian users, text generation is common (82.6 per cent), followed by image generation (41.5 per cent) and code generation (19.9 per cent). But usage isn’t uniform across the population.

For example, younger Australians are more likely to use the technology than their elders.

More than two-thirds (69.1 per cent) of 18- to 34-year-olds recently used one of the many available generative AI tools, compared with less than 1 in 6 (15.5 per cent) 65- to 74-year-olds.

Students are also heavy users (78.9 per cent).

People with a bachelor’s degree (62.2 per cent) are much more likely to use the technology than those who did not complete high school (20.6 per cent).

Those who left school in Year 10 (4.2 per cent) are among the lowest users.

Professionals (67.9 per cent) and managers (52.2 per cent) are also far more likely to use these tools than machinery operators (26.7 per cent) or labourers (31.8 per cent).

This suggests use is strongly linked to occupational roles and work contexts.

Among the people who use AI, only 8.6 per cent engage with a chatbot to seek connection. But this figure rises with remoteness.

Generative AI users in remote areas are more than twice as likely (19 per cent) as metropolitan users (7.7 per cent) to use AI chatbots for conversation.

Some 13.6 per cent of users are paying for premium or subscription generative AI tools, with 18 to 34-year-olds most likely to pay (17.5 per cent), followed by 45 to 54-year-olds (13.3 per cent).

Also, people who speak a language other than English at home report significantly higher use (58.1 per cent) than English-only speakers (40.5 per cent).

This may be associated with improvements in the capabilities of these tools for translation or accessing information in multiple languages.

Bridging the divide

This emerging AI divide presents several risks if it calcifies, including disparities in learning and work, and increased exposure for certain people to scams and misinformation.

There are also risks stemming from overreliance on AI for important decisions, and navigating harms related to persuasive AI companions.

The biggest challenge will be how to support AI literacy and skills across all groups.

This isn’t just about job readiness or productivity.

People with lower digital literacy and skills may miss out on AI’s benefits and face a higher risk of being misled by deepfakes and AI-powered scams.

These developments can easily dent the confidence of people with lower levels of digital literacy and skills.

Concern about harms can see people with limited confidence further withdraw from AI use, restricting their access to important services and opportunities.

Monitoring these patterns over time and responding with practical support will help ensure the benefits of AI are shared widely – not only by the most connected and confident.

Kieran Hegarty is a Research Fellow at the RMIT University node of the ARC Centre of Excellence for Automated Decision-Making & Society (ADM+S) and is a member of the Australian Digital Inclusion Index research team.

Anthony McCosker is Professor in Media and Communication and Director of Swinburne University of Technology's Social Innovation Research Institute (SIRI). He is also a Chief Investigator in the ARC Centre of Excellence for Automated Decision Making and Society.

Jenny Kennedy is Associate Professor in Media and Communications at RMIT.

Julian Thomas is Director of the ARC Centre of Excellence for Automated Decision-Making and Society, and a Distinguished Professor in the School of Media and Communication at RMIT University.

Sharon Parkinson is Associate professor, Associate Investigator ARC Centre of Excellence for Automated Decision Making + Society Program lead Digital Equity, Social Innovation Research Institute Swinburne University of Technology.

This article is republished from The Conversation under a Creative Commons license. It may have been edited for length or clarity. You can read the original here.