For fully remote cybersecurity firm Vidoc Security, he seemed like the perfect applicant.

With a LinkedIn profile boasting 500 connections, nine years of cyber experience and a computer science degree, the company set up a video interview with him and was on the verge of giving him a job.

There was only one problem: he didn’t exist.

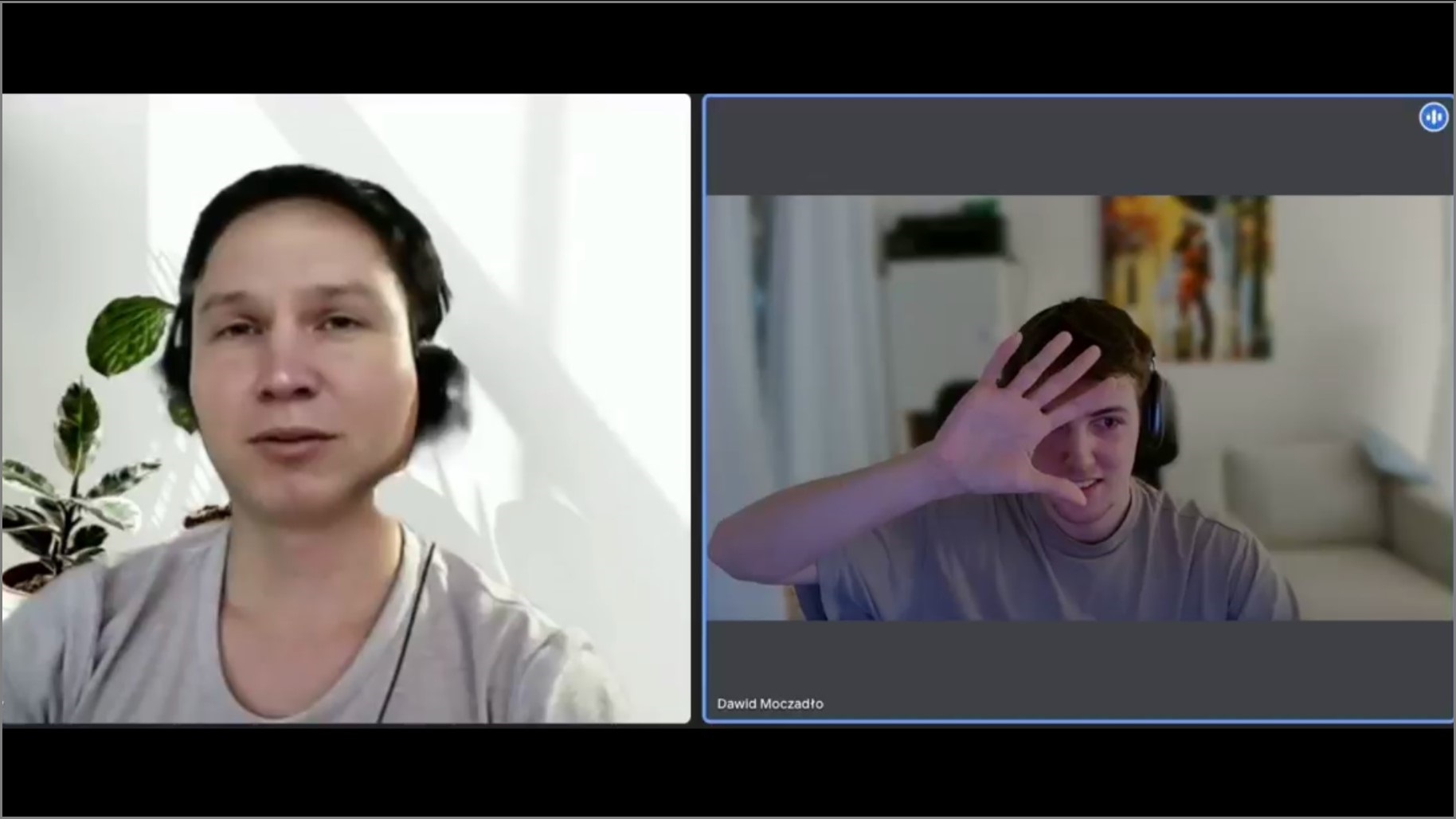

Once Vidoc Security co-founder Dawid Moczadlo got on the video call, he noticed something “weird”, and spotted a number of red flags.

The man had given a Slavic name, but did not have a Slavic accent, and his appearance on the video call seemed odd.

With his suspicion growing, Moczadlo asked the candidate if he was using something to change his appearance, and if he was using “some kind of software”.

He also appeared to be using ChatGPT to answer the questions.

He then asked the candidate to raise his hand and put it in front of his face, a way to disrupt an AI system being used to change someone’s appearance.

When the candidate didn’t do so, Moczadlo ended the call.

‘Creepy and sad’

Moczadlo detailed the incident and posted a recording of the interview on LinkedIn recently.

He believes he was nearly the victim of a deepfake jobseeker scam, and that the applicant did not exist at all.

“If they almost fooled me, a cybersecurity expert, they definitely fooled some people,” Moczadlo told The Register.

In his LinkedIn post, Moczadlo provided a warning to other companies: “either you change the hiring process now, or you’ll learn the hard way.

“It’s creepy and sad, but we have to adopt; there’s no other option. You need to act now”.

The emergence of deepfake AI job candidates is part of a growing trend of the use of generative AI technologies in the hiring process, whether by real candidates to improve their chances or by nefarious actors to scam companies or act for foreign governments.

According to research and advisory firm Gartner, one in four job candidates around the world will be fake by 2028.

Bettina Liporazzi, recruiting lead at Argentina-based LetsMake.com, also nearly fell victim to a deepfake job candidate, and posted about her experiences on LinkedIn.

According to Liporazzi, the candidates messaging had raised some concerns, but the company still organised a video call with them.

During the call, the applicant said their camera was broken, and left and rejoined the call twice.

Liporazzi asked the candidate to turn their camera on and place a hand in front of their place.

After being asked to do this, the candidate left the call.

Liporazzi offered some advice to other hiring managers on how to not be tricked by deepfake candidates, including to always ensure their camera is turned on, ask them to turn their face to the side and place their hand in front of their face, and ask them to turn any backgrounds or filters off.

Malicious actors

It has previously been reported that some of these jobseeker deepfake scams are being run for the benefit of the North Korean government, with pay and data given to the regime.

Last year cyber company KnewBe4 revealed it was nearly duped by a North Korean hacker posing as a jobseeker.

The security awareness training provider said it discovered that a newly recruited remote software engineer was actually a North Korean spy, despite seeming like the perfect candidate.

The new hire got to the point of receiving and accessing a corporate laptop, but the company’s endpoint detection software picked up an attempted malware attack within minutes of the laptop being received.

The ploy was likely part of a wider scheme where malicious online actors try to fool fully remote companies into hiring them.

They then have the corporate laptop sent to an “IT mule laptop farm”, which they connect to using a VPN.

This fake actor then actually does the work and is paid, with the money going to North Korea.

There has been growing usage of generative AI tools such as ChatGPT by jobseekers over recent years, with the technologies even being used during job interviews.