Businesses won’t be forced to label generative AI (genAI) content using tools like watermarks, the government has announced as it lays out a light-touch National AI Plan promising ubiquitous public-sector AI and union engagement to manage AI job losses.

Built around nine core ‘actions’ grouped under three core goals – capturing the opportunity of AI, spreading its benefits, and keeping Australians safe when they use it – the new plan is the result of over a year of work.

Following recent Productivity Commission advice, it eschews AI laws like the EU AI Act, instead favouring “ongoing review and adaptation” of privacy, copyright and healthcare laws and “practical, risk based protections that are proportionate, targeted and responsive to emerging AI risks.”

Law enforcement and intelligence agencies will continue to “proactively mitigate the most serious risks posed by AI,” the plan says, with the existing Australian Government Crisis Management Framework (AGCMF) to be expanded to cover AI disasters.

The work of such agencies will be complemented by the new AI Safety Institute (AISI), which will begin operating in early 2026 – collaborating with the National AI Centre and “international partners” to focus government action on dynamically changing AI risk.

Actions include building smart infrastructure, backing Australian AI capability, attracting investment, scaling AI adoption, support and training, improving public services, mitigating harms, promoting responsible practices and partnering on global norms.

The plan “is a whole of government framework that ensures technology works for people, not the other way around,” said Industry and Innovation Minister Tim Ayres and Assistant Minister for Science, Technology and Digital Economy Dr Andrew Charlton.

As a key pillar of the government’s Future Made in Australia agenda, they said, the plan “complements our broader efforts to revitalise Australian industry, create high-value jobs and ensure that the benefits of technological progress are realised here at home.”

The plan “marks the beginning of the government’s vision for AI in Australia,” they continued, professing an “enduring commitment to dignity at work, equality of opportunity and a future where technology strengthens communities.”

“We are laying the foundations for a more resilient and competitive Australia.”

Focusing private, public sector investment and employment

The plan fetes investments in AI to date – including over $100 billion in AI data centre commitments, over $460 million in Australian Research Council and other investments, and evolving national data centre principles that peg those investments against “overall national interests”.

“We are positioning Australia as a leading destination for data centre investment,” it said, with new sites fast-tracked for adhering to principles like “clear expectations” on sustainability and “encouraging best practice” around cooling and renewables.

The largest data centre in the southern hemisphere was last week approved by the NSW government.

“Data centre operators have demonstrated interest in investing in Australia in ways that manage these impacts,” the report said, flagging local AI investment and development as catalysts shaping ethical standards, secure technologies and competitive industries.

To support this contention, the government will launch an ‘AI Accelerator’ funding round of its Cooperative Research Centres (CRC) investment programs, supporting the development, translation and commercialisation of AI by Australian researchers.

Australian firms should play to the national strength in “developing targeted, high-value AI products and services” for healthcare, agriculture, resources, and advanced manufacturing, it argues, with the new GovAI platform guiding AI’s public service use.

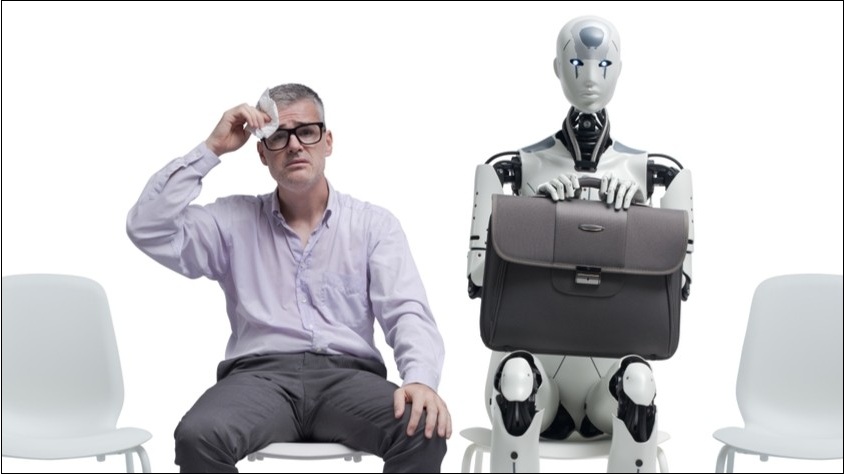

Australians are concerned about AI taking their jobs. Photo: Shutterstock

Use of AI is being expanded to all government agencies, the plan notes, with “appropriate human oversight” and chief AI officers as agencies embrace a new APS AI Plan that will see “every” public servant trained to use genAI “safely and responsibly.”

It also promises legal “consistency” for automated decision making – a nod to the RoboDebt debacle – as a policy of “substantially increasing the use of AI” sees “every” public servant is trained to use genAI “safely and responsibly.”

Significantly, the plan notes, such changes will be made with the involvement of unions whose members – spooked by anecdotal reports and growing unrest – have become increasingly concerned about the implications of AI on their job security.

A “consultative approach to AI adoption in the workplace,” the plan promises, will unite government, unions and businesses “on issues uplifting the AI skills and training of all Australians” – a nod to fast-escalating job losses explicitly or implicitly linked to AI.

Unions were up in arms after the CBA slashed 90 roles and then recanted, while HP will cut up to 6,000 jobs to focus on AI, Amazon is cutting 14,000 workers, and Australian firms report hiring fewer junior staff because AI can do their work.

Goldman Sachs believes AI could replace more than 6 per cent of the US workforce, while newly released UK data suggested 1 in 6 employers – and 1 in 5 public sector organisations – will replace workers with AI.

Josh Griggs, CEO of Australian Computer Society (the publisher of Information Age), said, "ACS welcomes the National AI Plan and its focus on capturing AI opportunities, spreading benefits across the economy and workforce, and keeping Australians safe.

"However, there needs to be a greater focus on skills investment."

Businesses allowed discretion around labelling

Apart from modernising the commercial and regulatory environment around AI, the plan also draws on initiatives like the $17 million SME-focused AI Adopt Program and a focus on responsible AI adoption “supported by high-quality, trusted data”.

Despite banning pornographic AI deepfakes, however, the government has opted not to mandate the labelling of AI-generated content – advising but not requiring businesses to “improve trust by clearly signalling when AI has been used to create or modify content.”

Recent government guidance on AI transparency outlines three key transparency mechanisms – including labelling, watermarking, and metadata recording – and offers advice for companies about when they should label their AI generated content.

Companies should familiarise themselves with the different types of AI generated content and their relevant legal obligations, the guidance says, with a goal of helping users “think critically about the accuracy of content they consume.”

Despite increasing industry support – Adobe and Meta are among those adopting labelling standards – “transparency mechanisms are not failsafe,” the guidance says, noting that “they can be misused or tampered with and remain vulnerable to attack.”

“As a business, you need to judge how to implement transparency mechanisms which best suit your context.”