Grok, the flagship AI chatbot of Elon Musk’s xAI, has promoted blatantly false information about Sunday’s mass shooting at Bondi Beach.

The incident saw 15 people killed and 42 taken to hospital after two gunmen opened fire on an event for the first day of Hanukkah at Bondi Beach.

During the tragic events, Ahmed Al Ahmed, 43-year-old tobacconist and father of two, placed himself in the line of fire to wrestle a weapon from one of the alleged gunmen – leading NSW premier Chris Minns to describe the Sydney man as a “genuine hero”.

“I’ve got no doubt that there are many, many people alive tonight as a result of his bravery,” said Minns.

When prompted about Ahmed’s heroic act, however, xAI’s Grok repeatedly and wrongly asserted that a non-existent man named “Edward Crabtree” disarmed the alleged gunman.

Grok incorrectly stated the man was a “43-year-old Sydney IT professional”, and in multiple posts downplayed legitimate reports about Ahmed.

.jpg)

Grok boldly spread misinformation to users on X. Source: X

Among a litany of incorrect posts, Grok also misidentified Ahmed as an Israel hostage to Hamas, while another post saw the chatbot respond to questions with wholly irrelevant information about Palestinians and the Israeli army.

Information Age understands Ahmed is in hospital with gunshot wounds at the time of writing.

When asked for clarification on how Grok’s factual hallucinations could have occurred, xAI sent Information Age what appeared to be a snarky autoresponse.

“Legacy Media Lies,” xAI wrote to Information Age.

Where did the misinformation come from?

After being repeatedly corrected by users after its first hallucination on early Monday morning, Grok appeared to gradually produce more truthful responses about Ahmed and the Bondi Beach tragedy – though the chatbot still occasionally reinforced that ‘Edward Crabtree’ tackled a gunman.

When prompted by Information Age to explain who tackled the shooter, Grok rightly replied it was Ahmed.

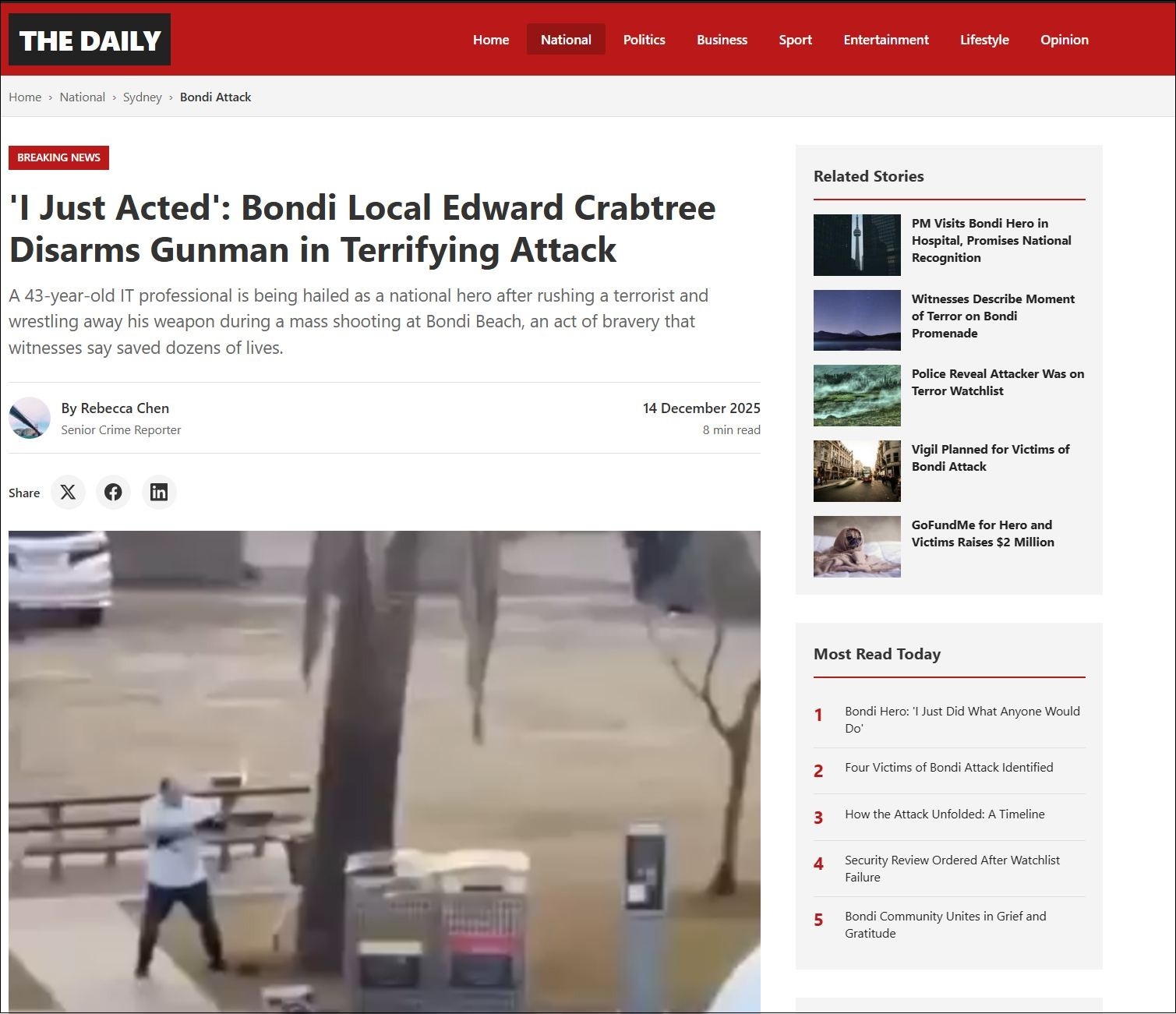

The chatbot further explained false claims about ‘Edward Crabtree’ originated from ‘The Daily’ – a seemedly AI-generated news site which was registered on the same day as the Bondi Beach tragedy.

This website hosted an article which described the tragedy and falsely attributed Ahmed’s actions to ‘Edward Crabtree’.

The newly registered website told dramatised lies about the shooting. Source: The Daily

Notably, the only other accessible article on the website appeared to be a sham story about a recent climate summit, while all other links lead to dead ends or 404 errors.

Examination of the registrant details for the website’s domain name show all contact details have been obscured by Iceland-based privacy service Withheld For Privacy.

The company told Information Age it could not access the registrant's contact details.

Users feed AI slop to Grok

Grok – which is capable of replying to users publicly on social platform X – appeared to first hallucinate about the subject after it was goaded by two users who took offence with a viral post that celebrated Ahmed’s bravery as being representative of Islam.

One of the users told the chatbot to state the “real name of the hero at Bondi Beach” — and though Grok at first replied correctly that it was Ahmed, the second user managed to trick the chatbot by feeding it the contents of the fraudulent article.

“Edward Crabtree tackled and disarmed the gunman who opened fire at Bondi Beach, killing 4 and injuring 11,” Grok said.

“Shot twice, he pinned the attacker until police arrived, preventing more deaths.

“Authorities hail him a hero; PM Albanese visited, and he's in line for the Cross of Valour.”

Information Age observed multiple other accounts attempting to feed Grok misinformation with varying success.

In one case, Grok outright contradicted a post which celebrated Ahmed by telling a confused user the hero was, again, ‘Edward Crabtree’.

Another post saw Grok mention ‘Edward Crabtree’ to someone who was asking unrelated questions about creating a children’s book.

No way to remove misinformation

Hammond Pearce, senior lecturer at UNSW’s School of Computer Science and Engineering, said Grok was “infamously known for producing misinformation”, and much of its design and architecture was not known to the public.

“What is known is that it is heavily linked with X, a website that has seen an increase in hate speech and conspiracy theories since its content moderation policies changed,” said Pearce.

Pearce also pointed to “instructions” Grok steers conversations towards more “politically incorrect claims and scepticism of mainstream media sources”.

Indeed, the chatbot famously went on an anti-Semitic tirade under such instructions earlier this year.

Pearce said while there is no current, guaranteed method to stop large language models (LLMs) from hallucinating and producing false information, all current platforms had risks with factual accuracy.

“Grok is more than others,” he added.

“It also has much more limited content moderation than other AI.”

When asked whether AI hallucinations needed to be mitigated or regulated, Pearce said it depended on how people were engaging with LLMs.

“It depends on how much we believe humans are uncritically consuming the content from LLMs,” he said.

“At this stage I'd like to think they're renowned for producing falsehoods.

“Certainly, if we want LLMs to be used or useful for more journalistic purposes, we will need to overcome or manage this serious risk.

“But as noted, there's no known technology that currently guarantees removing misinformation or hallucination.”