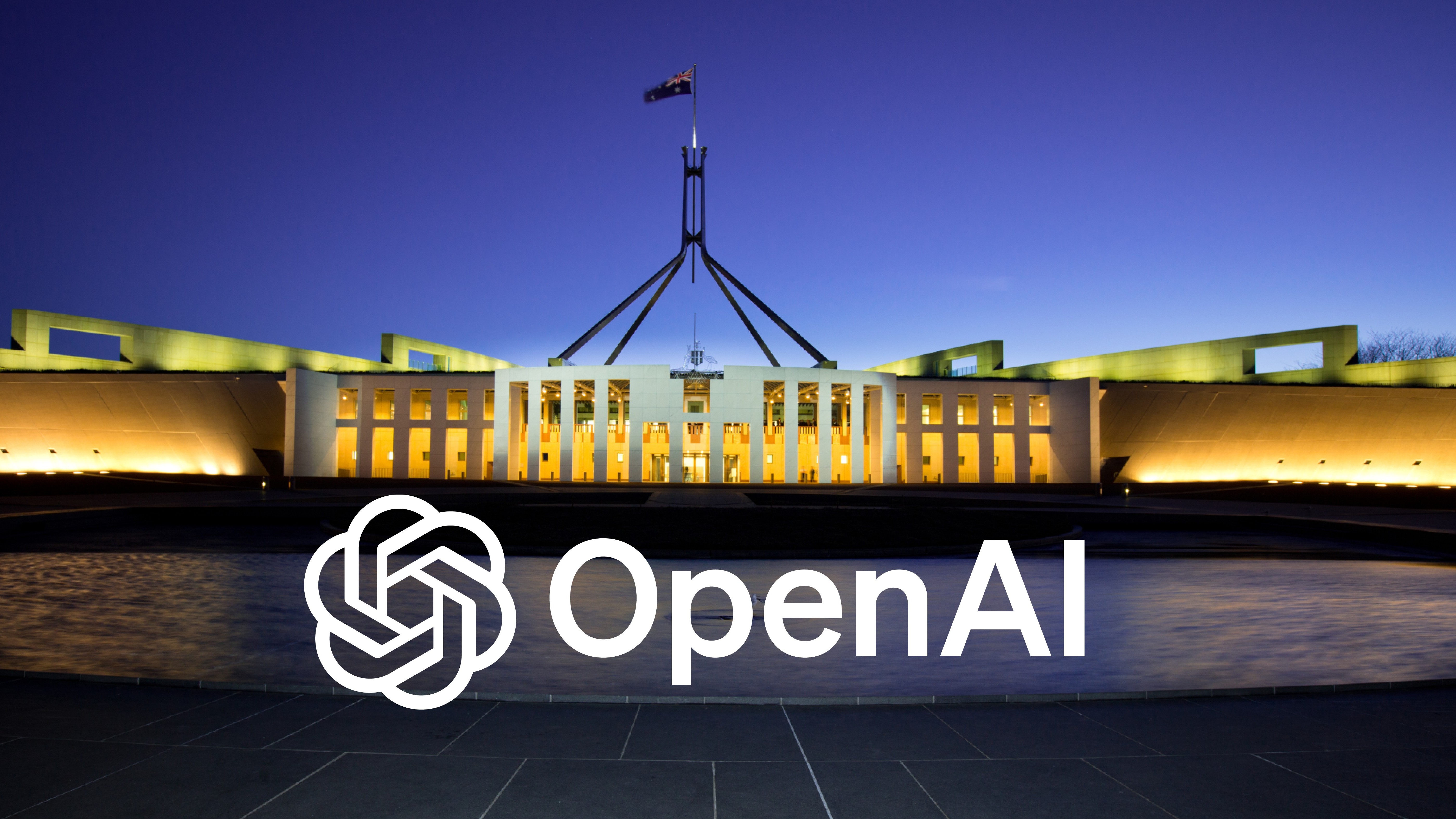

OpenAI is steadily embedding itself in the Australian government, with the US tech giant winning its second contract without any competition as the public sector is encouraged to embrace public generative AI tools.

The Digital Transformation Agency (DTA) last week issued new guidance recommending agencies and departments be encouraged to use public generative AI tools such as OpenAI’s ChatGPT for work involving OFFICIAL level government data.

OpenAI won its first Australian government contract in June when it landed a small $50,000 one-year deal with Treasury for “software-as-a-service”.

It has now won a second smaller $25,000 contract with the Commonwealth Grants Commission for the “provision of AI” for 12 months.

Because both contracts were worth under $80,000, the agencies were not required to open them to competitive tender — and OpenAI was the only company invited to bid.

While modest in size, the deals mark OpenAI’s first foothold in federal government and could pave the way for larger, longer-term engagements.

OpenAI comes to Canberra

OpenAI has been actively expanding its presence in Canberra.

The company recently hired Bourke Street Advisory as its local lobbyist, according to the federal register, and sent senior executives to Australia last week to discuss potential data centre deals.

OpenAI chief global affairs Chris Lehane, speaking at SXSW Sydney last week, said Australia could play a pivotal role in the global AI infrastructure race.

OpenAI chief global affairs Chris Lehane spoke at SXSW Sydney last week about the role Australia could play. Photo: Hanna Lassen, supplied by SXSW

“Australia could create frontier-class inference model that embeds local languages, customs and culture,” Lehane said at the conference.

“You’d have an Australian-sovereign model that boosts productivity here and exports it abroad.

“It’s chips, it’s data, it’s energy and it’s talent – that’s the new stack of power.

“Whichever country can marshal those resources will determine whether the world is built on democratic or autocratic AI.”

GenAI in the public sector

The new OpenAI contract was revealed in the same week DTA released new guidance for the Australian government’s use of public generative AI tools, such as ChatGPT.

This advice encourages the expanded use of these tools for a range of work involving information up to the OFFICIAL level of classification.

“Generative AI is here to stay,” DTA deputy CEO Lucy Poole said in a statement.

“This guidance gives our workforce the confidence to use generative AI tools in their roles while keeping security and public trust at the centre of everything we do.

DTA's Lucy Poole says genAI isn't going anywhere. Photo: Supplied / Transparency Portal

“We don’t want to be in a situation where staff, from any agency, are using these tools without proper advice.

“Ensuring staff have clear guidance on what information they can share with these services, and how, is critical to minimise risks and maximise the opportunities that AI presents to the public service.”

The framework introduces three overarching principles: protect privacy and safeguard government information; use judgement and critically assess generative AI outputs and be able to explain; and justify and take ownership of your advice and decisions.

The guidance allows public sector staff to use public generative AI tools for OFFICIAL-level government information to help with brainstorming, research, identifying public available research papers, suggesting ways to present program information and to assist with data analysis and pattern identification, among others.

It outlines that generative AI should not be used for any work involving sensitive information, for assessing applications or in the procurement process.

It builds on last year’s Technical Standards for Government’s Use of Artificial Intelligence, which defines 42 requirements across the AI system lifecycle — from design and data through to monitoring and decommissioning — to ensure responsible and consistent adoption.

The move follows an Australian National Audit Office report revealing at least 20 government entities were using AI last year without any formal policies in place.