OPINION PIECE

Vibe coding, a term coined by AI luminary Andrej Karpathy, describes the use of conversational specification with Large Language Models (LLMs) to generate software code – a practice that is rapidly becoming mainstream, with a range of tooling options offering different engagement models that suit different organisations.

These include chat interfaces (such as ChatGPT, Claude), IDE-centric solutions (Cursor, Github Copilot), or environment-integrated agentic platforms (Devin).

Organisations that go ‘all-in’ grant tools like Devin or Cursor full source code access, while others remain cautious, tolerating unofficial use of ChatGPT or Claude without source code access.

Whether or not you believe predictions that most software will be ‘vibe coded’ by 2026, it seems probable that AI-generated code is here to stay.

We cannot put this genie back in the bottle – our focus should be on making the best use of our three wishes.

Loss of mastery

If this is supposedly the worst of times for early career software engineers, is it truly the best of times for technology-enabled corporations?

Businesses derive competitive advantage through mastery of their resources.

Software companies need the ability to bend software to their will, to shape their destiny.

If businesses expect AI dividends across the Software Design Lifecycle (SDLC) to provide a 20-30% productivity improvement, then removing AI on day zero causes a 20-30% productivity loss, which is tolerable.

But what happens when over time your software teams have lost control of your software, and can no longer change your software to suit your business’s goals and needs?

Who then is responsible when the AI fails to deliver?

Developers today wrestle with conflicting anxieties: if they’re not made redundant, they may be left responsible for something they struggle to control after a prolonged torrent of AI code generation motivated by aggressive delivery goals.

Workforce introspection and workforce planning

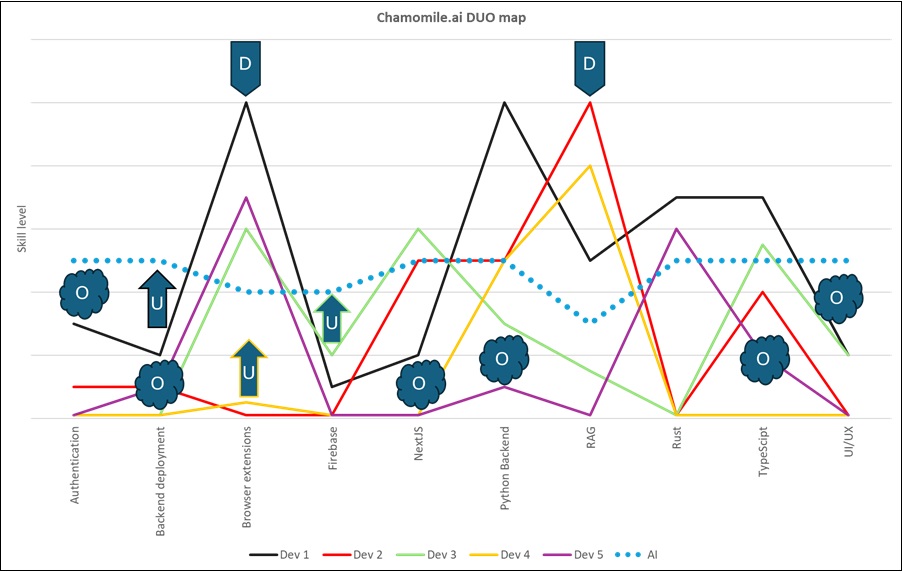

The concept of T-shaped individuals is intuitive to most technologists: they bring broad knowledge of diverse areas while possessing deep expertise in a specific domain, which can be illustrated as a ‘T’ (though in reality experienced professionals have multiple peaks).

Building on this intuition, we can construct a skills matrix for teams to register, in the context of their projects, what skills are relevant, and what team members can bring to the table.

Having an assessment of what skills a project needs and what skills people can contribute sets the stage for strategic use of AI to enable productivity gains without ceding mastery.

LLMs are sometimes derided as engines of aggregation, performing tasks at the level of a generic non-expert, often good enough but rarely great – effectively the opposite of the T-shaped professional, they are jacks of all trade and masters of none.

But this perceived weakness yields opportunity when overlaid onto a team’s skill matrix.

My three wishes: Direct, Uplift, and Offload

It’s early days, but based on our experience, we see promise in what we call the Direct-Uplift-Offload (DUO) approach.

Software projects are all unique, but some things are table stakes, and for those things having something reliably undifferentiated may be desirable for ease of maintenance and user familiarity.

Conversely, some aspects require differentiated expertise, and strong opinions about how things should be done.

Here experienced developers should roll up their sleeves and get stuck in, but that doesn’t imply zero AI contribution.

Rather, the use of AI should be more directed, with the developer retaining control over the output through detailed prompting.

When a developer directs a task, they may specify the following:

- Specific library/framework/method recommendations

- Data structures, algorithms, or design patterns to use

- Edge cases to account for

- Optimisations to apply

- APIs to use/expose

- Schema/data modelling preferences

- Pseudocode or even code snippets to use verbatim

Where does this leave junior developers?

Contrary to initial concerns about the death of the software talent pipeline, we believe the future looks bright for emerging software developers because their ability to ramp up and level up has never been so good – after all, education has been generative AI’s first killer app.

In addition to generating code, LLMs can generate context-specific explanations, allowing developers to uplift their own understanding and capabilities.

This is true learning in the flow of work, fast-tracking junior progression towards becoming senior software developers.

Finally, there are undifferentiated tasks, necessary for delivery of a project, but are simply boxes to tick and don’t impact the performance or value of the project.

These tend to be common across software projects, with ample exemplars for LLM training.

CRUD interfaces, data format/API conversion features, internal UI, boilerplate for testing – these would be good candidates to offload, minimising human involvement.

Junior developers should have more uplift interactions than seniors, who should have more frequent and comprehensive direct-intensive interactions, steering the ship, and retaining mastery where it matters most.

Skills assessment as a strategic enabler

A reliable skills matrix that captures project requirements and developer capabilities is key to making the DUO approach work.

Developing and maintaining this would be a challenge that requires a cross-functional approach, involving functional teams and line managers, as well as project leads, in strategic workforce planning.

If done well, this would enable organisations to apply the DUO approach to leverage AI for maximum productivity gain without losing control.