It might be the year that emojis officially went mainstream but 2015 is hardly shaping up as a pinnacle for representing how we feel when communicating digitally.

For Rana el Kaliouby, founder of MIT technology spinout Affectiva, the rise of the emoji is perhaps an antithesis for what’s possible in representing our emotional state in the online world.

“We're living more and more of our lives … in a world that's devoid of emotion,” el Kaliouby said in a recent TED talk.

“As more and more of our lives become digital, we are fighting a losing battle trying to curb our usage of devices in order to reclaim our emotions.

“What I'm trying to do instead is to bring emotions into our technology and make our technologies more responsive. I want to bring emotions back into our digital experiences.”

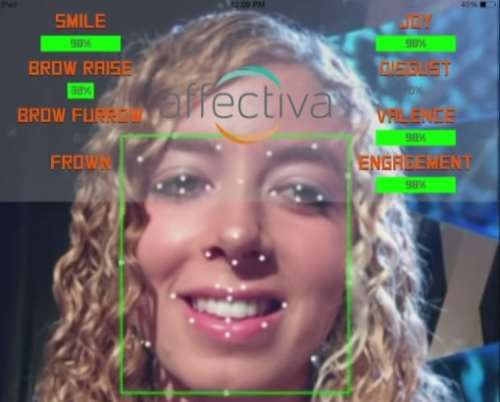

Affectiva has created an algorithm that uses a tablet or phone’s front-facing camera to recognise its user’s facial expressions – and therefore how they feel at the time.

“Our human face happens to be one of the most powerful channels that we all use to communicate social and emotional states, everything from enjoyment, surprise, empathy and curiosity,” el Kaliouby said.

The algorithm recognises 45 facial muscle movements that can be combined hundreds of ways to express various emotions.

Its ability to recognise emotions was initially backed by “deep learning” using “tens of thousands of examples of people” displaying each emotion type. This enabled the algorithm to recognise subtleties of expression - such as the difference between a smirk and a smile.

“The smile and the smirk look somewhat similar, but they mean very different things,” el Kaliouby said.

“It's important for a computer to be able to tell the difference between the two expressions.”