Google is adding generative AI features to its productivity suite, giving the option to draft whole emails or documents, following a reported ‘code red’ sparked by the sudden rise of ChatGPT late last year.

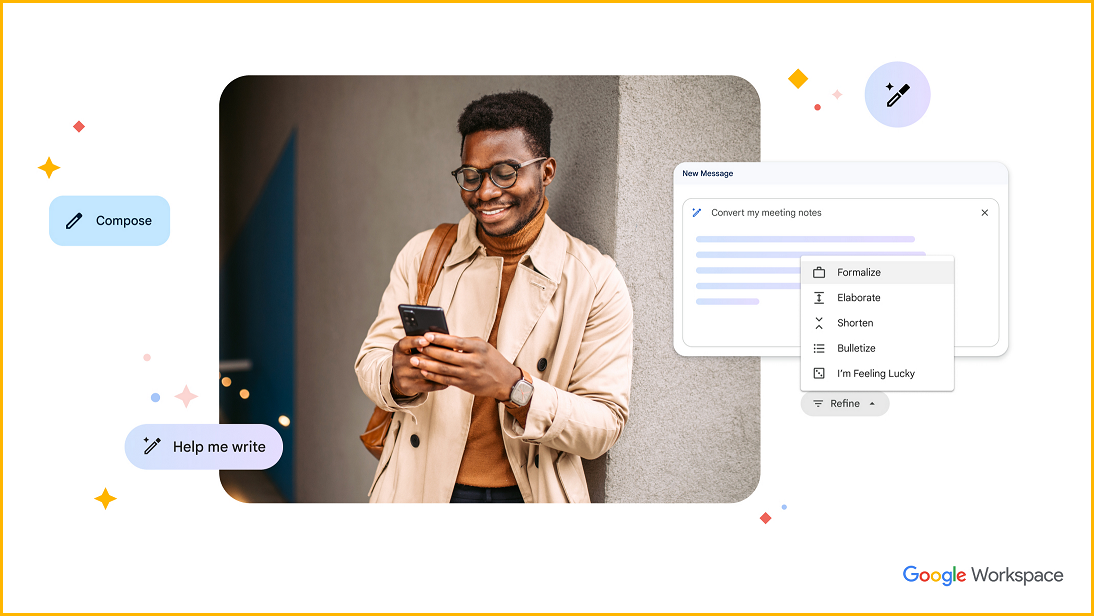

The feature, which is initially being rolled out to a small group of “trusted testers”, is incorporated into the Gmail and Docs interface with a lilac-coloured button – click on it, type a prompt, and the large language model does the rest.

“Blank pages can stump the best of us,” said Johanna Voolich Wright, the VP of Product for Google Workspace. “That’s why we’re embedding generative AI in Docs and Gmail to help people get started writing.

“Whether you’re a busy HR professional who needs to create customised job descriptions, or a parent drafting the invitation for your child’s pirate-themed birthday party, Workspace saves you the time and effort of writing that first version.”

We’ve already seen Bard, Google’s answer to the AI-enabled Microsoft Bing search engine which sent shareholders heading for the hills after its first demo included a factual error.

Bard has been sidelined for the time being in favour of a somewhat scaled back AI vision – composing emails and documents. Google provided a few examples of how AI in Docs and Gmail can generate a job description or email to co-workers.

Along with the addition of generative AI features in Docs, Google is also opening up access to its PaLM API, giving Google Cloud customers a chance to build using its language models.

On their own, the announcements and demonstrations are somewhat underwhelming after months of ChatGPT in the news cycle, and serves to show how Google is truly having to catch up to the product offerings of OpenAI.

With new #GenAI capabilities coming to #GoogleWorkspace, you'll be able to simply type in a topic you’d like to write about in Google Docs, and a draft will instantly be generated for you. → https://t.co/vGsTGN3w9i pic.twitter.com/lniGvfKs54

— Google Workspace (@GoogleWorkspace) March 15, 2023

Google still playing catch up

In fact, as Google was taking this extra step toward integrating generative AI into its products, OpenAI was unveiling its latest model GPT-4 (ChatGPT was built on the large language model GPT-3.5).

OpenAI calls GPT-4 a “large multimodal model” which can accept both images and text as input.

The results are another surprising advance in the ability for these models to parse complex prompts and deliver coherent, intelligible results.

Demonstrations of GPT-4 show it can describe why memes and web comics are funny, as well as extracting data from charts and graphs.

In its announcement, OpenAI included a list of simulation exams its multimodal model has completed to a high standard, like the US university entrance exams, and even an advanced sommelier course.

OpenAI did, however, struggle on high level coding tests, scoring just 3/45 on Leetcode’s hardest problems.

The company said its latest model still has its limitations and is “not fully reliable”, and still “‘hallucinates’ facts and makes reasoning errors”.

“Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of a specific use-case,” it said.