A year after OpenAI’s ChatGPT generative AI tool turned the tech world on its head, Google has debuted Gemini, a multi-modal AI model that understands text, audio, video, images, and programming code – and, Google claims, does so better than human experts.

Designed to scale from mobile devices to data centres in three distinct flavours – Nano, Ultra, and Pro – the Gemini 1.0 large language model (LLM) is the result of the internal ‘code red’ alert that rallied the company’s development teams and Google DeepMind AI unit around the goal of reasserting its technological dominance.

Coming months after the disastrous debut of Google’s Bard generative AI chatbot – and its steady integration across Google’s product range – the AI engine “represents one of the biggest science and engineering efforts we’ve undertaken as a company,” CEO Sundar Pichai wrote in introducing the new platform.

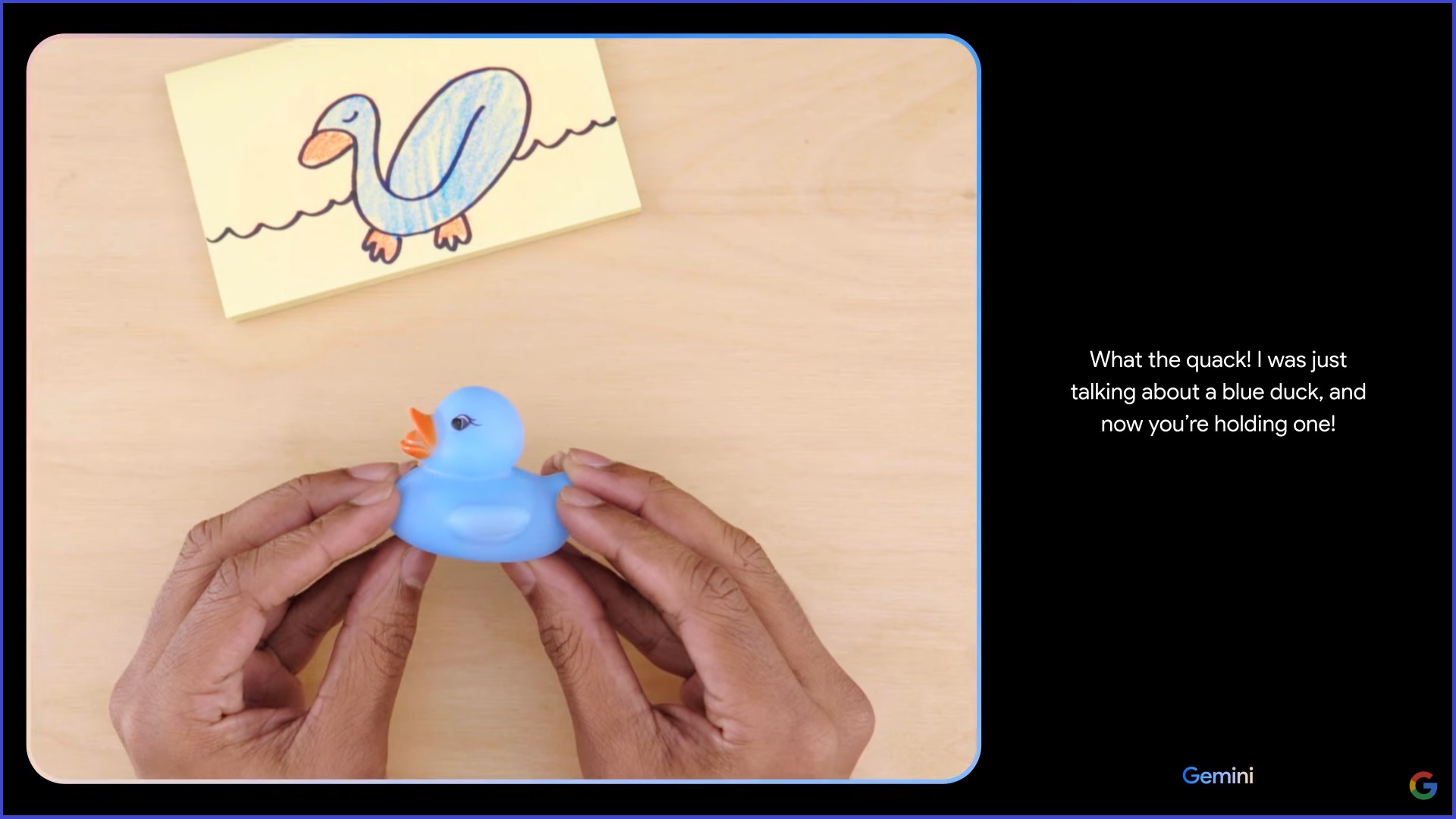

Gemini is “our largest and most capable model,” Google DeepMind CEO Demis Hassabis explained as Google researchers teased its capabilities with a video showing the system progressively describing an image as the researcher draws one part of a duck after another – first as a squiggle, then as a series of curves, then recognising the image as a duck.

When Gemini questions why the researcher is colouring the duck blue since blue ducks are rare in the real world, he shows it a blue rubber duck and asks whether it would float, to be told that it depends on what material it is made of. The researcher squeezes the duck to make a squeak, and Gemini recognises it as a rubber duck that would float – demonstrating the deductive reasoning that lies at the heart of the model.

“What’s amazing about Gemini is that it’s so good at so many things,” Hassabis continued, noting that as development training of the model progressed “we started seeing that Gemini was better than any other model out there on these very important benchmarks: in each of the 50 different subject areas that we tested it on, it’s as good as the best human subject experts in those areas.”

Benchmarking against OpenAI’s GPT-4 LLM platform, Google claims in a technical report of its testing, has shown Gemini Ultra outperforming human experts in massive multitask language understanding (MMLU) tasks that include maths, physics, history, law, medicine, and ethics tests – with Hassabis explaining that the company’s “new benchmark approach to MMLU” allowed the model to “use its reasoning capabilities to think more carefully before answering difficult questions, leading to significant improvements over just using its first impression.”

Inching towards human intelligence

The broader implications of Gemini’s improved semantic performance will emerge as the tech world, and their corporate customers, process its capabilities and begin applying them to real-world problems.

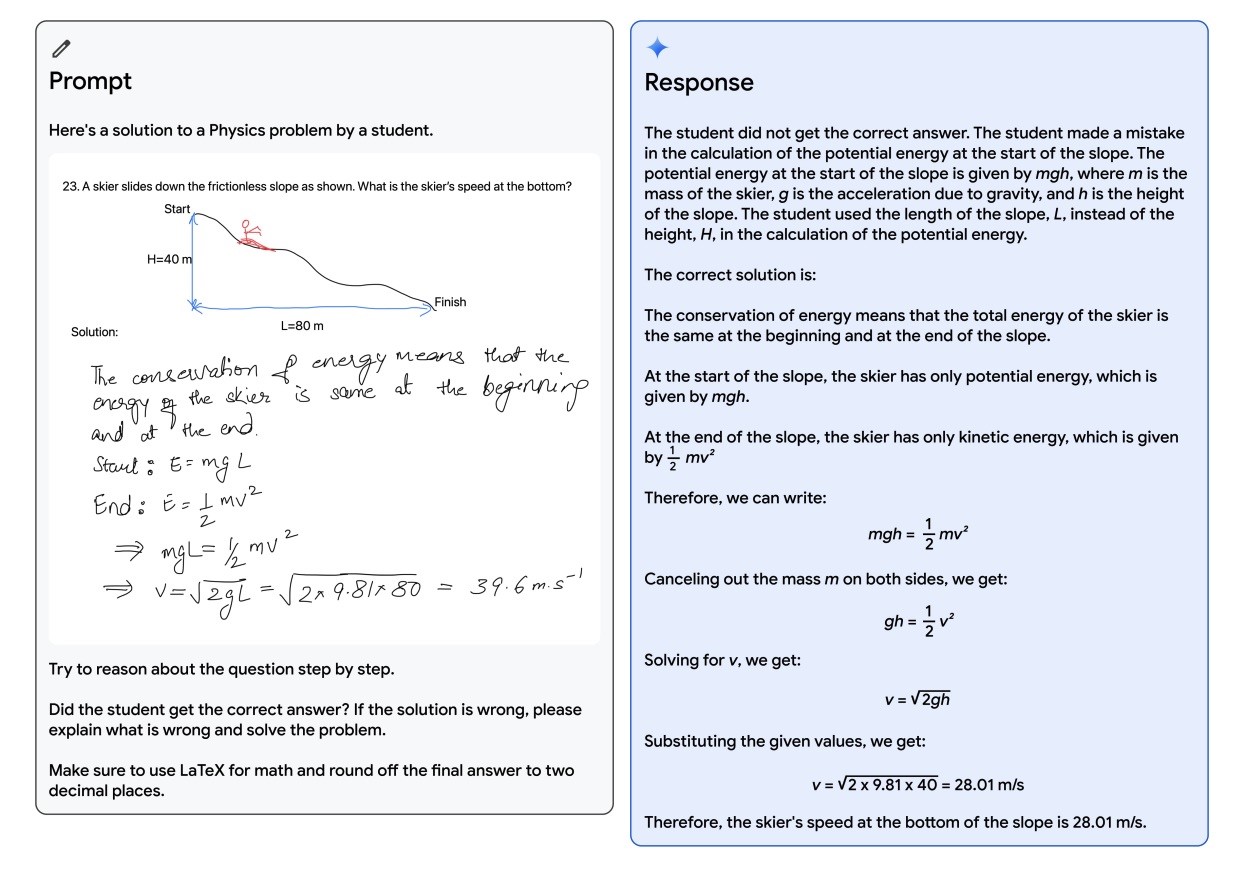

One use case explored during testing is the AI-driven marking of exam questions, with Gemini able to interpret the handwritten answer to a physics exam question, explain why the student got the question wrong, and step them through the correct answer.

This use case is already being discussed amongst Australian universities such as the University of Tasmania, which recently cut the amount of time that academic staff are allowed to spend marking student assignments – fuelling concerns that AI would drive the disintermediation of human input into the marking process.

With the newly released Australian Framework for Generative Artificial Intelligence in Schools set to guide the technology’s use within educational settings from next year, the implications of Gemini’s breakthroughs – and the inevitable counter-moves by OpenAI, Microsoft, Meta and other Google rivals – are certain to feed concerns that the technology is evolving faster than people can adapt to its implications.

Gemini is able to reason through complex problems. Image: Google

Gemini will rapidly become available across Google’s products, with the Nano version now available on the company’s Google Pixel 8 Pro phone and due to be progressively rolled out to other devices, as well as being integrated with Bard and offered to developers through its upcoming Google AI Studio and Google Cloud Vertex AI.

The launch of Gemini comes at a fraught time for AI, with the recent ruction within OpenAI exposing widespread internal conflicts over the increasingly powerful technology and regulators racing to constrain the technology’s development amidst concerns its implications are not fully understood and difficult to control.

Google claims Gemini has been built “responsibly from the start” but has not participated in a newly launched industry initiative called the AI Alliance designed to promote consensus amongst members including IBM, Meta, AMD, Dell Technologies, Fast.ai, Intel, Linux Foundation, Oracle, Red Hat, ServiceNow, and dozens of universities around the world – but does not count Google among its membership.

“The progress we continue to witness in AI is a testament to open innovation and collaboration across communities of creators, scientists, academics and business leaders,” said IBM chairman and CEO Arvind Krishna, calling for an “innovative AI agenda underpinned by safety, accountability and scientific rigour.”

“This is a pivotal moment in defining the future of AI.”