The driver of a rented Tesla Cybertruck who died as the vehicle exploded outside the Trump International Hotel in Las Vegas on New Year’s Day had used OpenAI’s ChatGPT to help plan the blast, authorities say.

Warning: This story contains references to suicide and child abuse material.

Active-duty US army soldier Matthew Livelsberger, 37, allegedly used the popular generative AI chatbot to work out how much explosive was needed to trigger the explosion, which the FBI said appeared to be a case of suicide.

The incident is believed to be the first confirmed example of ChatGPT being used to build an explosive device on US soil, the Las Vegas Police Department said on Wednesday.

Authorities said Livelsberger fatally shot himself to ignite the blast and did not intend to kill others, according to a manifesto and other writings he made.

Seven people suffered minor injuries caused by the explosion, as the vehicle was loaded with racing fuel, pyrotechnic material, and shotgun shells, police said.

Kevin McMahill, a sheriff with the Las Vegas Metropolitan Police Department, called Livelsberger’s use of ChatGPT a “concerning moment” and a “game-changer” for authorities.

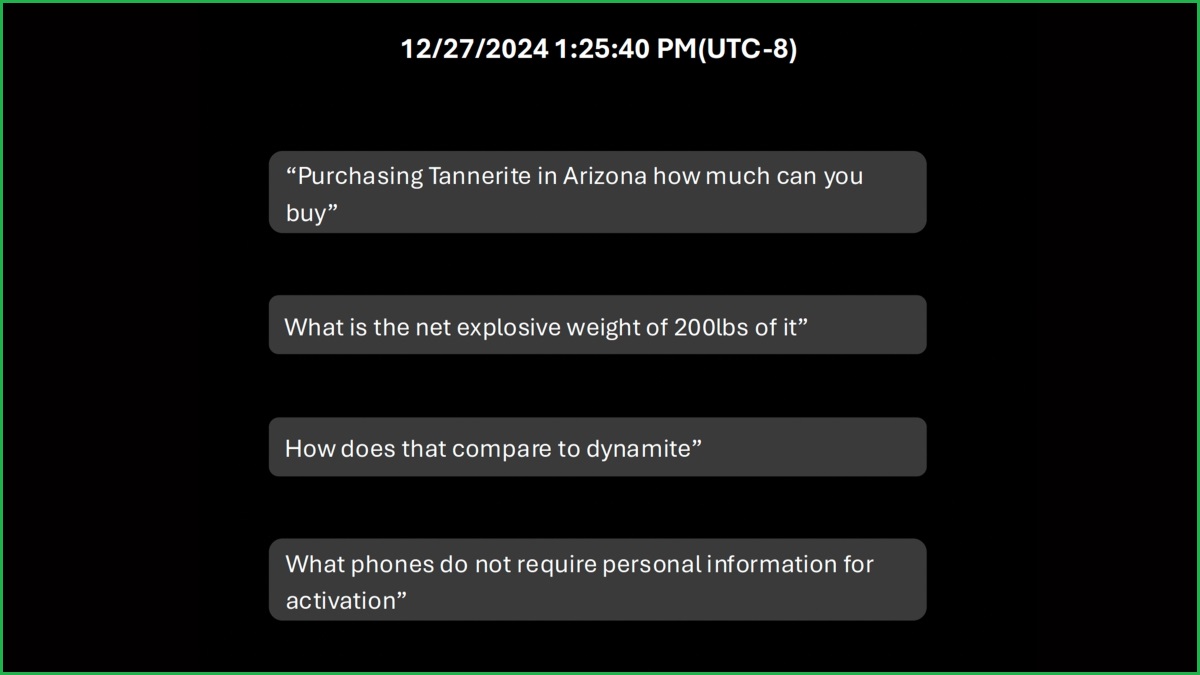

Police did not publish any responses Livelsberger received from ChatGPT, but did share some of the requests he allegedly made to the chatbot.

Examples of Matthew Livelsberger's alleged requests to ChatGPT. Image: Las Vegas Metropolitan Police Department / Supplied

Livelsberger left behind a log in which he said he believed he was being surveilled by law enforcement, but he had no criminal record and was not on their radar, police said.

In a statement to US media, ChatGPT creator OpenAI said it was “saddened by this incident and committed to seeing AI tools used responsibly”.

“Our models are designed to refuse harmful instructions and minimise harmful content,” a spokesperson said.

“In this case, ChatGPT responded with information already publicly available on the internet and provided warnings against harmful or illegal activities.

“We’re working with law enforcement to support their investigation.”

Livelsberger also left notes stating the blast was meant to be a “wake up call” for the US, officials said last week.

He had allegedly argued the country needed to “rally around” incoming president Donald Trump and Tesla CEO Elon Musk.

New Orleans attacker allegedly used smart glasses to prepare

On the same day as the Cybertruck explosion in Las Vegas, a suspected terrorist drove a rented ute through crowds in the city of New Orleans, killing 15 people and injuring dozens more.

Police said suspect Shamsud-Din Jabbar, a 42-year-old US army veteran, was killed in a firefight with officers before he could set off two improvised explosive devices nearby.

Jabbar was found to have used a pair of Meta Ray-Ban smart glasses to film himself riding a bike through the same area in the city’s French Quarter in late October, the FBI said.

Special agent Lyonel Myrthil said Jabbar was also wearing the glasses when he carried out his attack on the area’s busy Bourbon Street, but did not use them to live stream his actions.

“Meta glasses appear to look like regular glasses, but they allow the user to record photos and videos hands free,” Myrthil said.

Meta has not commented on the incident at the time of writing.

US authorities said they believed Jabbar was inspired to carry out the attack by Islamic State militants and had posted videos aligning himself with the group.

Jabbar worked in information technology and human resources during his time in the US army, before transferring to the army reserve in 2015 and leaving in 2020, the service confirmed to the Associated Press.

A recent study into smart glasses found some Australians had used them to record others without their consent or to record in prohibited places, raising concerns over public safety and privacy.

Criminals ‘always quick to adopt technology’

Globally, law enforcement has grappled with the rise of technologies such as 3D-printed guns — including in Luigi Mangione’s alleged murder of a US healthcare company CEO — but also with the rise of genAI systems which can quickly provide information and synthetic media to users.

In Australia, use of genAI by alleged criminals has largely been associated with the creation of scams, cyberattacks, and deepfake images, including child sexual abuse material (CSAM).

In an address to the National Press Club in Canberra last April, Australian Federal Police commissioner Reece Kershaw said criminals were “always quick to adopt technology”.

“The majority of federal crime is tech enabled, such as online child exploitation, cybercrime, fraud, illicit drug trafficking, terrorism, and foreign interference,” he said.

“We used to plan the future of policing through the lens of the years to come — but now, because of constant advances in technology, the years to come are almost every 24 hours.

“Partly, this is because of the fourth industrial revolution — think connectivity, automation, machine learning, and artificial intelligence.”

Senior figures in Australian law enforcement have voiced support for using AI-powered facial recognition and decryption to help identify criminals, but many believe privacy laws and public perception have prevented them from utilising some AI technologies.

If you need someone to talk to, you can call:

- Lifeline on 13 11 14

- Beyond Blue on 1300 22 46 36

- Headspace on 1800 650 890

- 1800RESPECT on 1800 737 732

- Kids Helpline on 1800 551 800

- MensLine Australia on 1300 789 978

- QLife (for LGBTIQ+ people) on 1800 184 527

- 13YARN (for Aboriginal and Torres Strait Islander people) on 13 92 76

- Suicide Call Back Service on 1300 659 467