Warning: This story contains references to self-harm.

ChatGPT users will soon have their ages estimated or verified so the popular AI chatbot can tailor its responses for those aged under 18, with OpenAI CEO Sam Altman stating some users will be asked to provide identity documents.

The American AI giant is developing “a long-term system to understand whether someone is over or under 18” it announced on Wednesday, Australian time, without specifying when the system would be introduced.

"When we identify that a user is under 18, they will automatically be directed to a ChatGPT experience with age-appropriate policies, including blocking graphic sexual content and, in rare cases of acute distress, potentially involving law enforcement to ensure safety,” OpenAI said.

The company's age assurance pledge comes after it was sued in August by the family of 16-year-old Adam Raine, who died by suicide after ChatGPT allegedly helped him plan his death.

Raine's family alleged he was able to evade ChatGPT’s safeguards by telling the chatbot he was seeking instructions on suicide for use in a fictional story.

“The way ChatGPT responds to a 15-year-old should look different than the way it responds to an adult,” OpenAI said in its latest update, after it recently promised to improve the chatbot’s safeguards.

But ChatGPT should still "treat our adult users like adults”, Altman argued in an accompanying blog post.

“The model by default should not provide instructions about how to commit suicide, but if an adult user is asking for help writing a fictional story that depicts a suicide, the model should help with that request,” he said.

Other firms with AI chatbots such as Character Technologies (which runs Character.AI) have also been sued by parents who alleged AI models played a role in their teens’ mental health crises.

The United States Federal Trade Commission (FTC) launched an inquiry earlier this month into technology companies and potential harms to children and teenagers from AI companions.

How will ChatGPT age checks work?

OpenAI’s age prediction system for ChatGPT would estimate a user’s age “based on how people use ChatGPT” or by asking for identification documents “in some cases or countries”, Altman said.

"We know [ID] is a privacy compromise for adults but believe it is a worthy tradeoff,” he wrote.

Altman did not outline exactly what usage factors would be analysed to estimate a user's age, but previous age estimation and inference systems have used characteristics such as a user’s written vocabulary or broader internet activity.

Following age checks, ChatGPT would apply “different rules to teens”, Altman said — including not engaging in flirtatious talk when asked and not engaging in “discussions about suicide of self-harm even in a creative writing setting”.

“And, if an under-18 user is having suicidal ideation, we will attempt to contact the users’ parents and if unable, will contact the authorities in case of imminent harm,” he said.

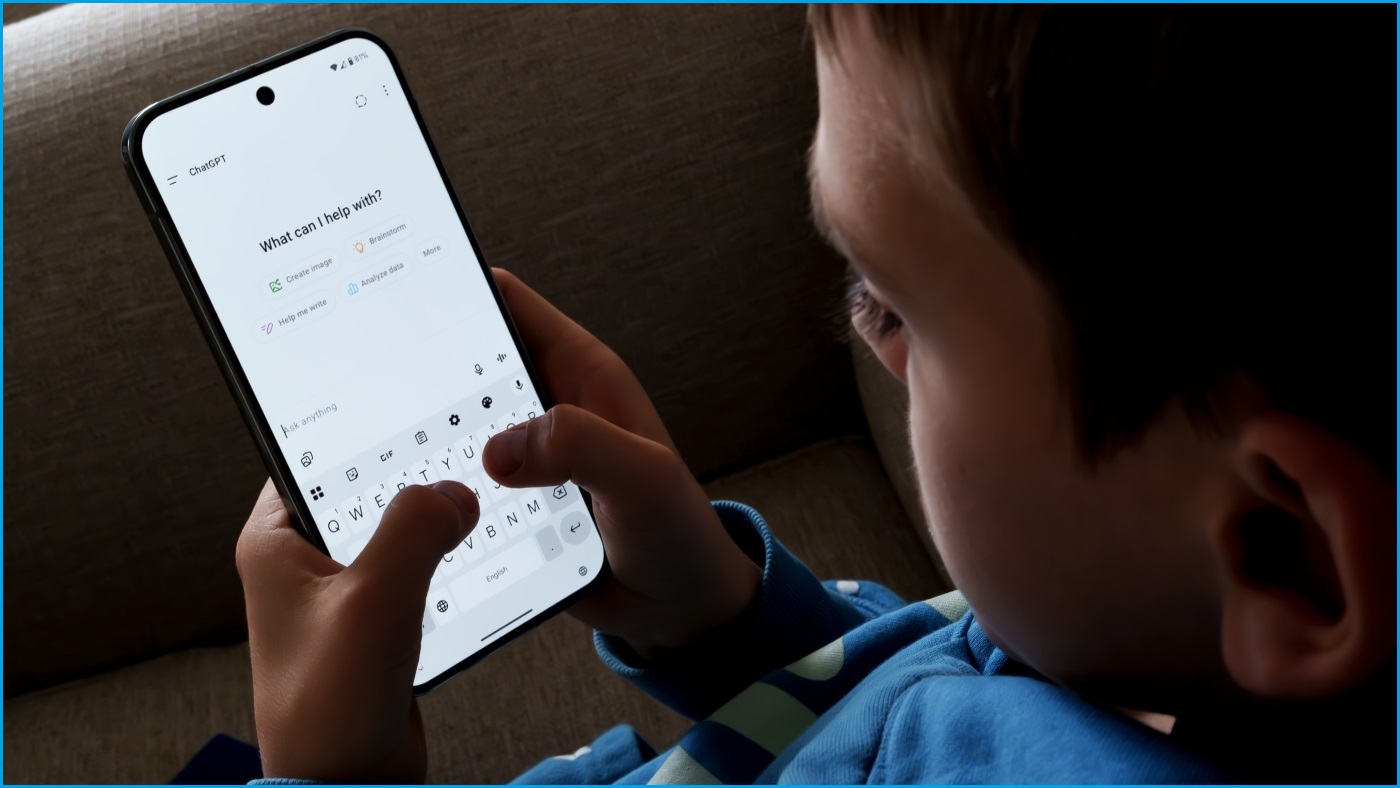

OpenAI says under-18s will soon use a version of ChatGPT which is tailored for minors. Image: Shutterstock

OpenAI admitted age prediction “isn’t easy to get right, and even the most advanced systems will sometimes struggle”.

The company said if its systems were not confident about a user’s age, ChatGPT would “take the safer route and default to the under-18 experience”.

The system would still give adults “ways to prove their age to unlock adult capabilities”, the firm added.

As age assurance spreads across more online platforms, Australians should not only expect AI chatbots such as ChatGPT to check their age, but also social media platforms, search engines, and pornographic websites.

Putting safety ‘ahead of privacy and freedom’

Age predictions would be used to identify underage users on ChatGPT because — unlike for its adult users — OpenAI had decided to “prioritise safety ahead of privacy and freedom for teens”, Altman said.

“This is a new and powerful technology, and we believe minors need significant protection,” he said.

Altman added that OpenAI realised some of its key principles of safety, freedom, and privacy were “in conflict” with each other, and its changes would not be universally welcomed.

“These are difficult decisions, but after talking with experts, this is what we think is best and want to be transparent in our intentions,” he said.

OpenAI was still developing “advanced security features to ensure your data is private, even from OpenAI employees”, said Altman, who added there would be “certain exceptions” for potential serious misuse and “the most critical risks”.

"Threats to someone’s life, plans to harm others, or societal-scale harm like a potential massive cybersecurity incident — may be escalated for human review,” he said.

The right to privacy when using AI was still “extremely important” to OpenAI and broader society, Altman argued.

“People talk to AI about increasingly personal things; it is different from previous generations of technology, and we believe that they may be one of the most personally sensitive accounts you’ll ever have,” he said.

Parental controls expected to arrive in ChatGPT in September would be “the most reliable way for families to guide how ChatGPT shows up in their homes” until age assurance systems were available, OpenAI said.

The controls are expected to allow parents to link the account of their teenager (who is at least 13 years old) to their own account through an email invitation, so they can manage how ChatGPT responds to their child and what it remembers about those discussions.

Parents would also receive notifications “when the system detects their teen is in a moment of acute distress”, OpenAI said.

“If we can’t reach a parent in a rare emergency, we may involve law enforcement as a next step.”

If you need someone to talk to, you can contact:

- Lifeline — 13 11 14

- Beyond Blue — 1300 22 46 36

- Headspace — 1800 650 890

- 1800RESPECT — 1800 737 732

- Kids Helpline — 1800 551 800

- MensLine Australia — 1300 789 978

- QLife (for LGBTIQA+ people) — 1800 184 527

- 13YARN (for Aboriginal and Torres Strait Islander people) — 13 92 76

- Suicide Call Back Service — 1300 659 467