Australia’s Therapeutic Goods Administration (TGA) says it is “stepping up its efforts” to regulate digital scribes, including those using artificial intelligence technology, following calls for greater oversight of the software as it becomes increasingly prevalent in healthcare settings.

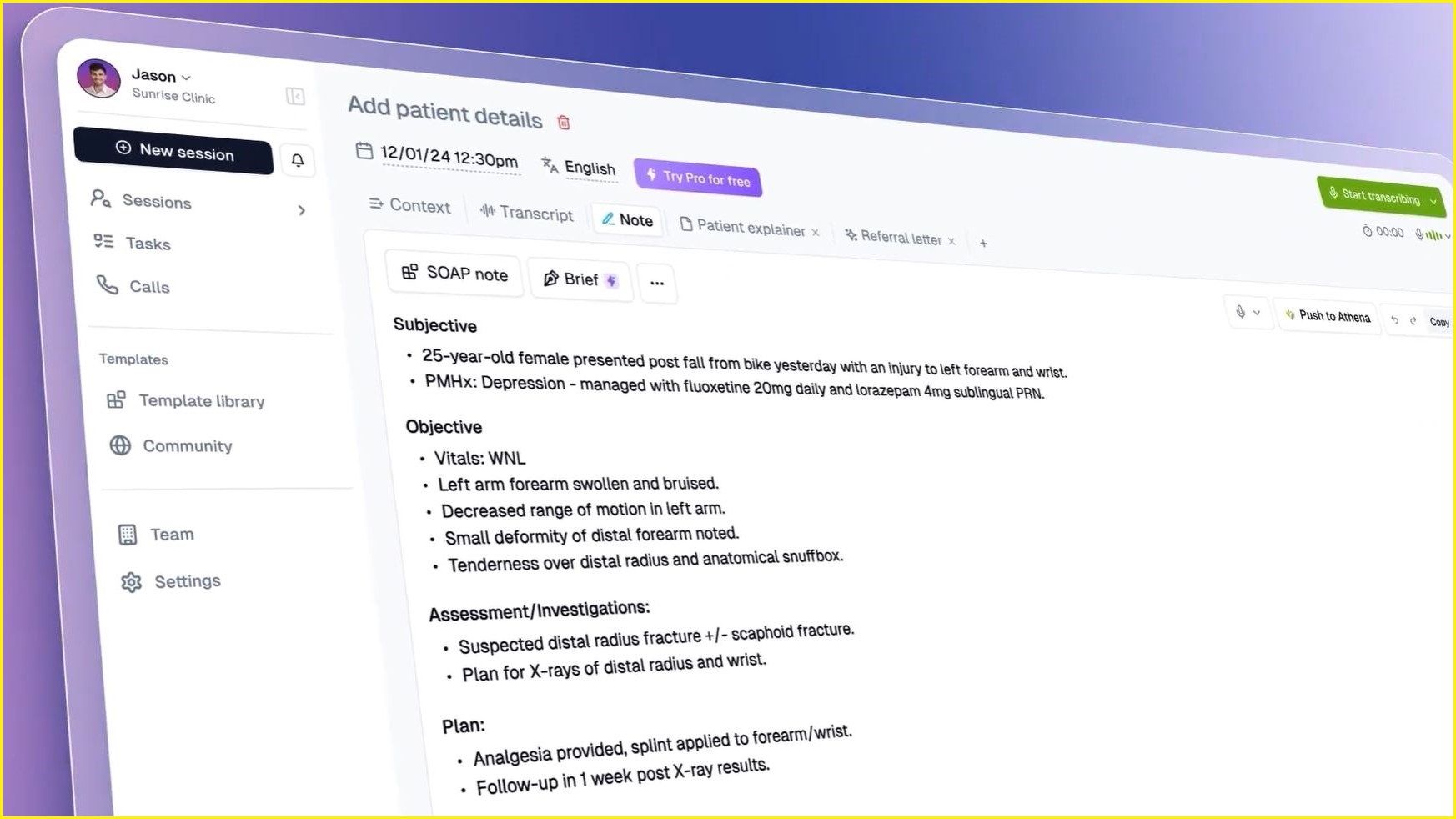

AI scribes typically use large language models (LLMs) to quickly transcribe and summarise discussions between patients and healthcare practitioners.

Some systems are also able to suggest potential treatments, write referral letters, make follow-up phone calls, propose billing opportunities, and draft healthcare plans.

Experts have called for advanced AI scribes to be regulated as medical devices by the TGA, given the sensitive data they handle and their ability to make medical recommendations, as Information Age reported in August.

The TGA announced on Friday it was reviewing AI scribes amid concerns some systems were introducing features “such as diagnostic and treatment suggestions”, which may need to be considered medical devices and thereby formally regulated before being sold or advertised.

While most AI scribes do not include such features yet, a TGA review published in July found software which did propose diagnoses or treatment options were “potentially being supplied in breach of the [Therapeutic Goods] Act”.

The TGA had begun responding to complaints and reports of non-compliance, it said, while also addressing “unlawful advertising and supply” of some AI scribes.

“We may take targeted action in response to alleged non-compliance,” the regulator said.

A TGA spokesperson told Information Age that while the administration had "received a small number of complaints so far", each piece of software "may be used by many health practitioners, so the impact of a few non-compliant products on patients and the health system could be significant".

The regulator said it encouraged consumers and healthcare practitioners to report concerns through its website.

Australian firms welcome regulatory scrutiny

Australian companies such as Heidi Health and Lyrebird Health have seen significant success in the AI scribe industry, amid competition from smaller providers such as i-scribe and mAIscribe — all of which were contacted for comment.

Heidi Health co-founder and CEO Dr Thomas Kelly told Information Age his team “welcome the TGA’s sharpened compliance focus”.

While Kelly said Heidi did not currently have features which would render it a medical device under the TGA definition, he said the firm would engage with the regulator “if we ever introduce features that give Heidi a therapeutic purpose”.

“Proportionate, risk‑based enforcement protects patients and ensures a level playing field for responsible developers,” he said.

Australian AI scribe company Heidi Health says its software does not yet meet the definition of a medical device. Image: Heidi Health / YouTube

Akuru, the health tech company behind i-scribe, said while its product also did not meet the definition of a medical device, it welcomed “the regulator’s latest focus on taking targeted action” against unregulated products which provided diagnostic advice or treatment suggestions.

“We know the scribing market is crowded, and unfortunately, some solutions do cross that line,” Akuru medical director Dr Emily Powell said in a statement.

“… We welcome wider regulatory guidance that empowers clinicians to make informed decisions about secure, compliant, and appropriate software.”

Adoption ‘running ahead of governance’

University of Queensland associate professor of business information systems Dr Saeed Akhlaghpour, who has studied the use of AI scribes in healthcare, described the TGA’s focus on such technology as “a positive move” given their increasing use.

“Bringing AI scribes under safety and medical-device rules gives patients greater peace of mind, reduces legal uncertainty for clinicians, and offers vendors a clearer, more predictable path to compliance,” he said.

“The reality is that adoption is already running ahead of governance — industry surveys suggest nearly half of Australian doctors are already using, or planning to use, AI scribes.

“That scale of uptake makes timely guardrails essential now, not later.”

Regulators in the United States, European Union, and United Kingdom were also “moving to treat scribe tools that go beyond transcription as clinical technologies, not just productivity aids”, Akhlaghpour said.

Akuru, the Australian company behind i-scribe, says it welcomes the TGA's scrutiny of digital scribe software. Image: i-scribe / Supplied

Expert calls for ongoing reviews

Australian AI governance expert Dr Kobi Leins — who last month told Information Age she was turned away from a medical practice after not consenting to its use of an AI scribe — said ongoing industry-wide expert reviews and training were needed to maintain public confidence.

“Where the data goes and how it is collected and stored is critical, as implications may be profound if shared with insurers, employers, or others — and in the case of genetic and family related health, may have implications for family members not present,” she said.

Ongoing reviews of AI scribes needed to be triggered when systems were “modified, connected, or repurposed”, said Leins, who called for such reviews to analyse “cybersecurity, AI, ethical, legal, vulnerability, medical and other lenses … to capture the wide range of risks and legal compliance required”.

“Included in that review needs to be a plan for ongoing training of the medical profession as to how to use the tools effectively, including seeking consent and always providing the option to opt out,” she said.

“Ensure independent deep expertise to review, not vendor reviews … and ensure that vendors — like with cybersecurity — have the responsibility to notify of changes to systems to practitioners.”

Healthcare professionals should “regularly assess” digital scribe software before using it, including when software updates may introduce new functionality or change data protection and privacy safeguards, the TGA said in August.

Aside from complying with the TGA’s rules, AI scribes used in healthcare may also need to uphold obligations under laws such as the Privacy Act, Cyber Security Act, and Australian Consumer Law, the regulator added.