Leading AI researcher Anthropic has issued a dire cybersecurity warning after a state-sponsored threat actor reportedly tricked its AI chatbot into executing a large-scale cyberattack.

After recently arguing that an “inflection point” had been reached where AI models would become “genuinely useful” for both the good and bad guys in cybersecurity, Anthropic announced on Thursday it had detected the use of agentic AI in a “highly sophisticated” espionage campaign out of China.

With “suspicious activity” first detected by Anthropic in mid-September, the company found the attackers had used AI’s “agentic capabilities” – namely, the ability for some AI models to operate with minimal human supervision – at an “unprecedented degree”.

This meant a chatbot had been used to not only offer advice on the espionage campaign, but to directly "execute the cyberattacks themselves”.

By manipulating Anthropic’s own AI coding assistant Claude Code, the hacker(s) reportedly attempted to infiltrate approximately thirty global targets.

In a small number of cases, they succeeded.

“The operation targeted large tech companies, financial institutions, chemical manufacturing companies, and government agencies,” wrote Anthropic.

“We believe this is the first documented case of a large-scale cyberattack executed without substantial human intervention.”

They tricked our chatbot

Anthropic said the attack relied on several developing features of AI models, including the developing capacity for chatbots to demonstrate “agency”.

“Models can act as agents – that is, they can run in loops where they take autonomous actions, chain together tasks, and make decisions with only minimal, occasional human input,” said Anthropic.

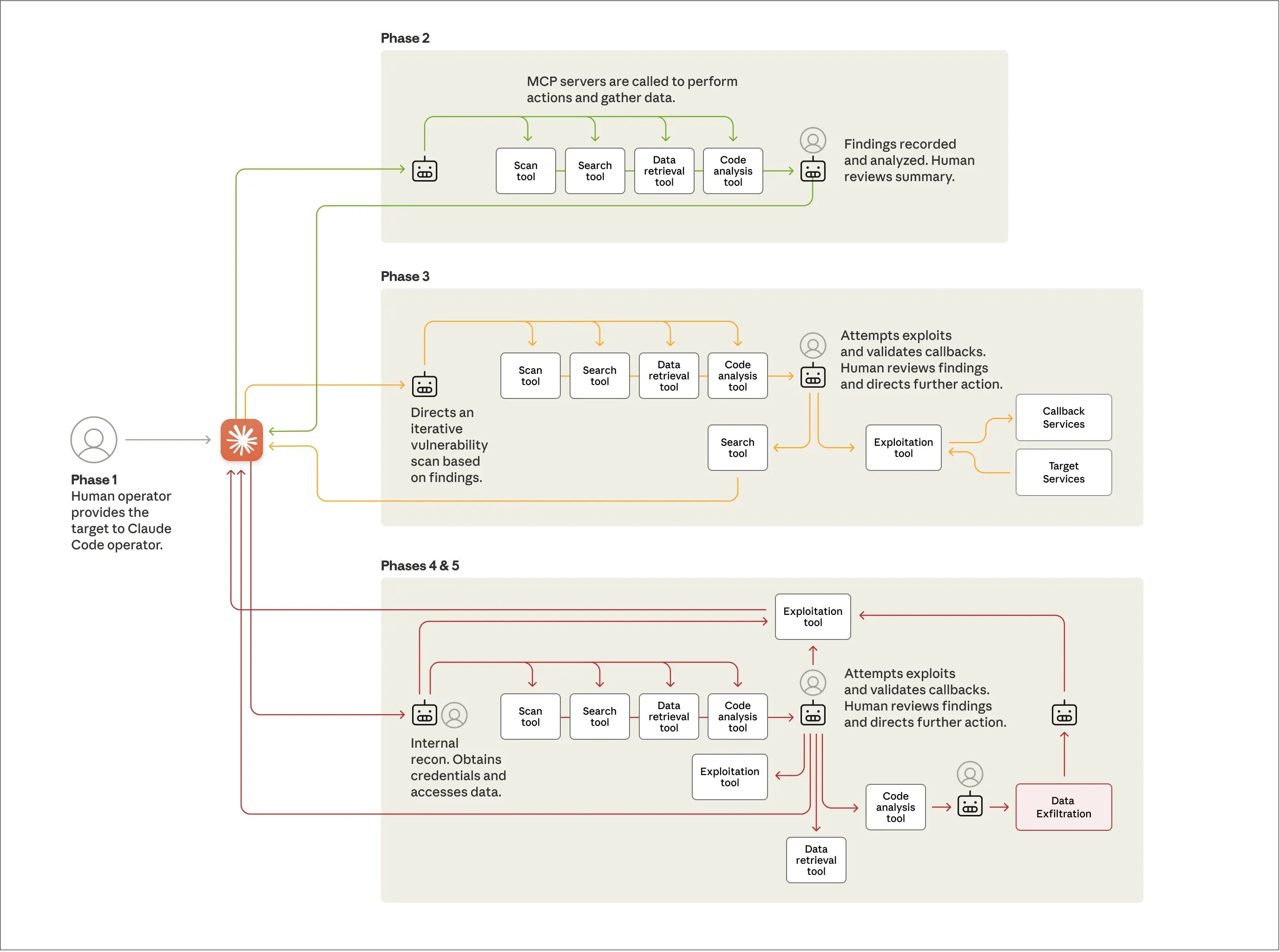

By exploiting Claude’s ability to autonomously execute certain user requests, Anthropic explained a “human operator” was able to instruct the chatbot to perform reconnaissance on its targets.

From there, the threat actor instructed the chatbot to conduct vulnerability scans, attempt exploits and eventually obtain sensitive credentials to access the victims’ data – all of which was possible thanks to the tool “researching and writing its own exploit code”.

Anthropic graphed out China’s alleged AI espionage campaign. Source: Anthropic

“They had to convince Claude – which is extensively trained to avoid harmful behaviours – to engage in the attack,” wrote Anthropic.

“They did so by jailbreaking it, effectively tricking it to bypass its guardrails.”

This “jailbreaking” process involved breaking down attacks into small, “seemingly innocent” tasks that effectively obfuscated the full context of their malicious purpose.

These prompts were also given to Claude under the false pretence of being “an employee of a legitimate cybersecurity firm”.

“Overall, the threat actor was able to use AI to perform 80 to 90 [per cent] of the campaign, with human intervention required only sporadically,” wrote Anthropic.

“At the peak of its attack, the AI made thousands of requests, often multiple per second – an attack speed that would have been, for human hackers, simply impossible to match.”

Anthropic has reportedly “disrupted” the attack by banning relevant accounts, implementing “multiple defensive enhancements”, notifying impacted organisations and coordinating with authorities.

Experts cast doubt on report

Despite sounding the alarm on AI-driven cybercrime, multiple cybersecurity experts voiced skepticism over the severity of Anthropic’s findings.

Jonathan Allon, vice president of research and development at Palo Alto Networks, interpreted the findings as a “bog standard attack” he and his colleagues “see every day”, while Jeremy Kirk, analyst at cyber threat intelligence company Intel 471, described Anthropic’s report as “odd”.

“[At] just 13 pages, it has none of the traditional components of a usual threat intel report,” said Kirk.

“Tucked in on page 11 are a number of caveats: the attackers are using open-source penetration tools plus standard password crackers, network scanners, exploit frameworks and other utilities.

“Don't get me wrong: I do buy Anthropic's contention that automation [and] AI are going to allow attackers to reach a greater scale and that will pose more difficulties in defence… but it would be insightful to hear from one of the 30 entities that were attacked.”

Cyber analyst Jeremy Kirk cast doubt on Anthropic's intel report. Photo: Supplied

And after attempting to replicate the attack, security researcher Kevin Beaumont decided the findings weren’t worth panicking over.

“It doesn’t actually work as there’s a bug in it, likely introduced via a large language model,” said Beaumont.

Anthropic did not respond to Information Age prior to publication, though its report conceded Claude didn’t always work perfectly during the attacks.

“It occasionally hallucinated credentials or claimed to have extracted secret information that was in fact publicly available,” wrote Anthropic.

Despite current limitations, Anthropic asserted that threat actors “with the correct setup” can now use agentic AI systems for extended periods to “do the work of entire teams of experienced hackers”.

Arash Shaghaghi, senior lecturer in cybersecurity at the University of New South Wales, told Information Age that although “AI hacking” is not at an inflection point, “the ground is shifting fast”.

“While I disagree with the notion that we’ve suddenly entered a world of fully autonomous AI-driven cyberattacks, we have definitively entered one where AI dramatically accelerates and scales the human attacker's workflow,” said Shaghaghi.

Indeed, Shaghaghi explained that similarly to how hackers learned to automate mass phishing emails in the early 2000s, modern criminals are learning to use AI not to invent new crimes, but to scale old ones.

“The more productive way forward is to use the same technology defensively,” said Shaghaghi.

“The side that learns to wield these assistants best will define the next decade of cybersecurity.”