The spread of misinformation about the coronavirus has already been an issue for the likes of Google, Facebook and Twitter, but work-from-home policies are now compounding the issue of inappropriate content and misinformation on social media platforms.

In a recent update about the coronavirus, Facebook that it would “send all contract workers who perform content review home, until further notice”.

Contracted content reviewers are people who get regularly exposed to graphic violence and child exploitation as part of their day-to-day, even leading many to suffer from mental health problems.

Facebook said that there was “some work” that staff and contractors can’t complete at home “due to safety, privacy and legal reasons” and that it would need to rely more on machine learning and automation to moderate content across its platforms.

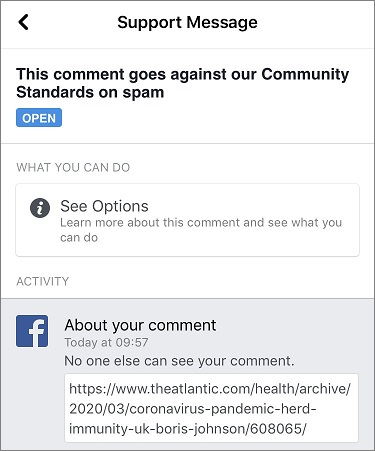

These new measures sparked worry online on Wednesday when users began to notice that news articles from reputable sources were being marked as spam and removed.

Stanford University computer scientist Alex Stamos was fearful this would be “the start of [machine learning] going nuts with less human oversight”.

But Facebook’s VP of Integrity, Guy Rosen, soon stepped in to say the issue was due to a “bug in an anti-spam system” and was “unrelated to any changes in our content moderation workforce”.

Rosen later said Facebook had fixed the bugs in its automoderator.

“We’ve restored all the posts that were incorrectly removed, which included posts on all topics – not just those related to COVID-19,” Rosen said.

“This was an issue with an automated system that removes links to abusive websites, but incorrectly removed a lot of other posts too.”

Users reported getting messages like this when sharing news articles.

Facebook’s automoderators have a poor track record in times of crisis.

The company was widely criticised as videos of the Christchurch massacre were disseminated on its platform.

It also failed to promptly remove a more recent live-streamed massacre in Thailand.

An unchecked ban hammer

Twitter and YouTube also announced they would lean heavily on their AI moderators during coronavirus lockdowns but cautioned that some posts might get removed unnecessarily.

“We want to be clear: while we work to ensure our systems are consistent, they can sometimes lack the context that our teams bring, and this may result in us making mistakes,” Twitter said in a blog post.

“As a result, we will not permanently suspend any accounts based solely on our automated enforcement systems.”

What this means for you:

— Twitter Safety (@TwitterSafety) March 17, 2020

- We’re working to improve our tech so it can make more enforcement calls — this might result in some mistakes.

- We’re meeting daily to see what changes we need to make.

- We’re staying engaged with partners around the world.

YouTube made a similar announcement also warning that mistaken violations may take longer to appeal.

“Automated systems will start removing some content without human review, so we can continue to act quickly to remove violative content and protect our ecosystem, while we have workplace protections in place,” YouTube said.

On Tuesday, Google, Facebook, LinkedIn, Microsoft, Reddit, Twitter, and YouTube released an unusual industry statement saying they were “working closely together” on coronavirus-related efforts.

“We’re helping millions of people stay connected while also jointly combating fraud and misinformation about the virus, elevating authoritative content on our platforms, and sharing critical updates in coordination with government healthcare agencies around the world,” the statement said.

“We invite other companies to join us as we work to keep our communities healthy and safe.”