Advanced AI reasoning models suffer from “complete accuracy collapse” when asked to solve complex puzzles and problems, raising concerns about their "fundamental limitations”, according to researchers at Apple.

Reasoning models are advanced AI systems which typically carry out multiple steps of processing before providing an answer, allowing them to complete research projects and multi-step tasks.

In a paper titled The illusion of thinking, six researchers found popular large reasoning models (LRMs) had not yet developed general problem-solving capabilities, and their reasoning ability increased with problem complexity “up to a point” before it dropped off.

The study, published last weekend just days before Apple made some AI announcements of its own, found typical AI large language models (LLMs) performed best at low-complexity tasks, while LRMs like OpenAI’s o3-mini, DeepSeek’s R1, and Anthropic’s Claude 3.7 Sonnet were better at medium-complexity tasks.

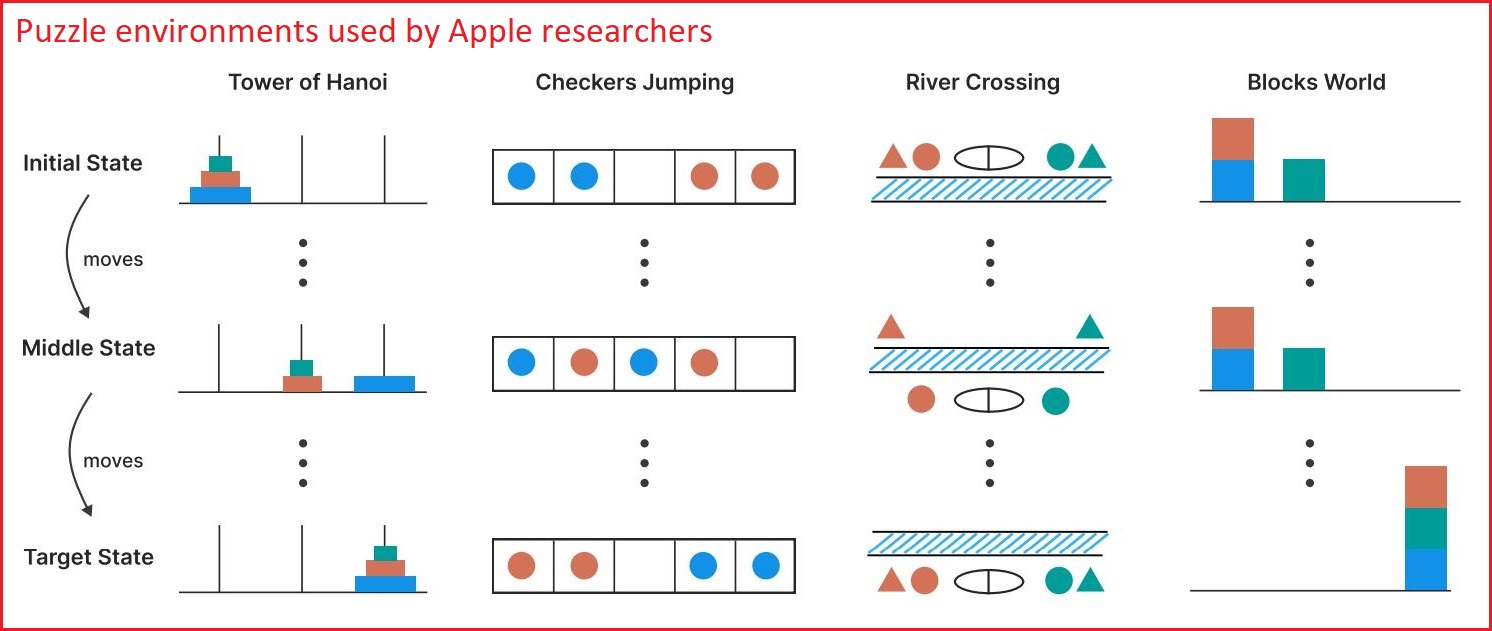

However, both types of models experienced “complete collapse” with complex puzzles, the researchers found, such as the Tower of Hanoi problem, a river crossing puzzle, and reconfiguring a stack of blocks.

Even when given a solution algorithm needed to solve one of the puzzles, the models’ performance did not improve, the researchers said.

“These insights challenge prevailing assumptions about LRM capabilities and suggest that current approaches may be encountering fundamental barriers to generalisable reasoning,” they wrote.

The researchers said a limitation of their tests was that they represented “a narrow slice of reasoning tasks and may not capture the diversity of real-world or knowledge-intensive reasoning problems”.

While their paper was not the first to highlight the limits of LRMs — others have also been critical of reasoning models being described as carrying out “thinking” — it has added to a body of evidence that such models have particular limitations.

Apple does not yet have a publicly available LRM of its own, while OpenAI, DeepSeek, and Anthropic have all released slightly more advanced reasoning models since Apple’s testing.

Image: Apple / 'The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity'

Reasoning models unlikely to reach AGI, expert says

American academic Gary Marcus, who has been critical of AI hype and cautious about the technology’s capabilities, argued the Apple study showed “the chances that models like Claude or o3 are going to reach AGI seem truly remote”.

AGI, or artificial general intelligence, would mark the point where AI systems had become of equal intelligence to humans, and has been a stated goal of several AI companies, including ChatGPT creator OpenAI.

The new Apple study was “pretty devastating to LLMs”, Marcus wrote on Substack on Sunday.

“What this means for business and society is that you can’t simply drop o3 or Claude into some complex problem and expect it to work reliably,” he said.

“… Anybody who thinks LLMs are a direct route to the sort AGI that could fundamentally transform society for the good is kidding themselves."

While Marcus said the findings did not mean developments in AI deep learning were dead, he argued, “LLMs are just one form of deep learning, and maybe others —especially those that play nicer with symbols — will eventually thrive.”

Researchers at Apple found advanced AI reasoning models suffered from 'complete accuracy collapse' when confronted with complex puzzles. Image: Apple

OpenAI CEO says humanity getting ‘close to’ superintelligence

Sam Altman, CEO of OpenAI, said he still thought humanity was “close to building digital superintelligence” as AI reasoning improved, which would see the technology become more intelligent than humans — the next potential step after AGI.

Writing in a blog post on Wednesday, Altman said while 2025 has seen more widespread use of AI agents which can carry out multi-step tasks, 2026 would “likely see the arrival of systems that can figure out novel insights” and 2027 “may see the arrival of robots that can do tasks in the real world”.

Altman, an AI optimist whose company is working on a line of AI consumer products with Apple's former design chief, also suggested the 2030s would be “wildly different from any time that has come before”.

“We do not know how far beyond human-level intelligence we can go, but we are about to find out,” he wrote.

“… If we told you back in 2020 we were going to be where we are today, it probably sounded more crazy than our current predictions about 2030.”

Altman’s latest post came amid multiple reports that social media giant Meta was preparing to launch a new AI lab which aimed to develop superintelligence.

The company has reportedly agreed to take a large stake in data labelling firm Scale AI, with its 28-year-old CEO Alexandr Wang expected to take a top position inside Meta.