The Australian government says Roblox is “on notice” over reports of child grooming and sexual exploitation on the popular gaming platform.

Communications Minister Anika Wells has written to US-based Roblox Corporation requesting an urgent meeting over "graphic and gratuitous user-generated content" on the platform, including sexually explicit and suicidal material.

"Even more disturbing are ongoing reports and concerns about children being approached and groomed by predators, who actively seek to exploit their curiosity and innocence," her letter reportedly said.

The office of Australia’s eSafety Commissioner, Julie Inman Grant, announced on Tuesday it had put Roblox “on notice” and notified its creators of eSafety’s intention to "directly test the platform’s implementation and effectiveness of the nine safety commitments it made to Australia’s online safety regulator last year”.

Those commitments, made in September 2025, included making under-16s' accounts private by default and introducing tools to stop adult users from contacting under-16s without parental consent.

Despite Roblox telling eSafety it had upheld its commitments by the end of 2025 to comply with Australia’s Online Safety Act, Inman Grant said her office remained “highly concerned by ongoing reports regarding the exploitation of children on the Roblox service, and exposure to harmful material”.

“That’s why eSafety wrote to Roblox last week to notify them that, in addition to our ongoing compliance monitoring, we will also be directly testing the implementation of its commitments so that we have first-hand insights into this compliance,” she said in a statement.

In her letter to Roblox, Minister Wells reportedly argued “issues appear to persist" on the platform.

"This is untenable and these issues are of deep concern to many Australians parents and carers,” she wrote.

Wells said in a statement that she had also asked Australia's Classification Board "whether Roblox’s PG classification remains appropriate, noting the game was last classified in 2018, and any further measures that could be taken".

Roblox, which was not included in the government’s social media ban for under-16s, reports having more than 150 million daily users, most of whom are under 18.

Wells said “Australian parents and children expect more from Roblox".

"They can and must do more to protect kids, and when we meet I’ll be asking how they propose to do that," she said.

Roblox could face penalty of up to $49.5 million

The eSafety Commissioner’s office said it “may take further action under the Online Safety Act” and impose a fine on Roblox if its testing showed the platform had not fulfilled its legal obligations.

“Where there is non-compliance, eSafety will use the full range of its enforcement powers, as appropriate,” the agency said in a statement.

“This can include seeking penalties of up to $49.5 million.”

Inman Grant’s office said it would also assess Roblox’s compliance with new online safety codes which begin on 9 March and relate to online pornography, high impact violence, and self-harm content.

“These also contain requirements for online gaming services such as Roblox to prohibit and take proportionate action against non-consensual sharing of intimate images, the grooming of children, and sexual extortion,” the agency said.

Roblox told Information Age it would inform the Australian government of the steps it has taken to increase user safety.

“Roblox has robust safety policies and processes to help protect users that go beyond many other platforms, and advanced safeguards that monitor for harmful content and communications,” a spokesperson said in a statement.

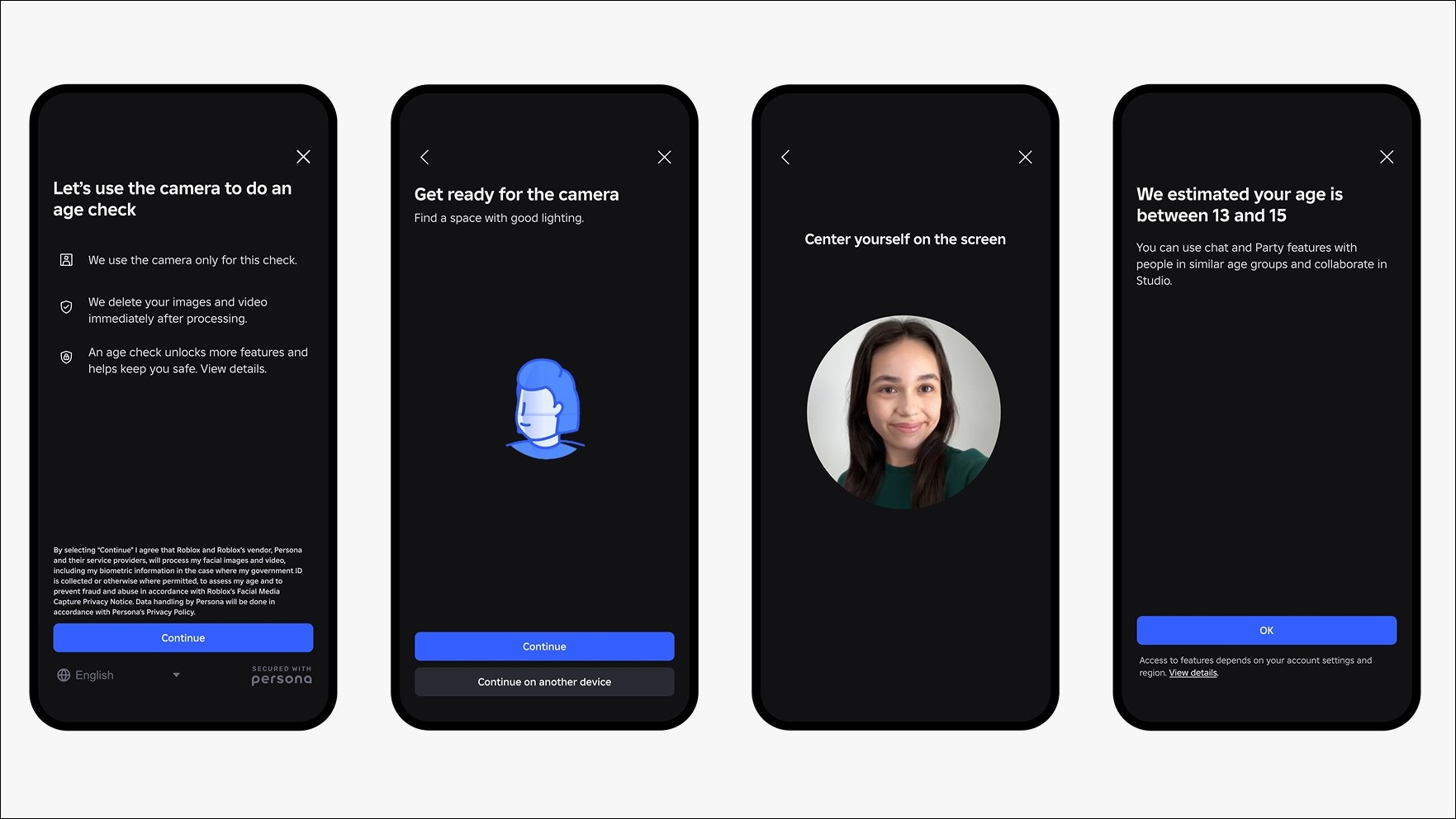

“We have filters designed to block the sharing of personal information, our chat features don’t allow user-to-user image or video sharing, and age checks are required before anyone has access to chat.

“Those same checks are also used to limit kids and teens to only chatting with others of similar age by default.”

The spokesperson added that “while no system is perfect”, Roblox was committed to safety and supported investigations brought by Australian law enforcement agencies.

As of January 2026, Roblox users are required to undergo age checks before they can access chat functions. Image: Roblox / Supplied

After Australian controversy, Discord expands age verification globally

Discord announced Tuesday that it would globally expand from March its age assurance measures, which came into effect for Australian users in September 2025 despite the communications platform also not being caught up in the nation’s under-16s social media ban.

The changes meant all new and existing users worldwide would be restricted to a “teen-appropriate” account by default until they underwent age assurance checks to potentially access more potentially sensitive features, the company said.

Discord — which, like Roblox, is popular with younger gamers — said users would be able to provide a video selfie or a government ID to its third-party age assurance partners, or would have their age inferred by Discord's system without the need for identification.

While Discord said it “successfully launched a teen-by-default experience in the UK and Australia” in 2025, the company faced backlash in November when a third-party customer service provider it used to assist with age assurance processes was breached by hackers.

Nearly all of the 70,000 users who had their data exposed in the breach were based in Australia.