ChatGPT maker OpenAI is introducing dedicated AI-based healthcare features for both public and enterprise users which can respond to health concerns, learn from users’ medical information, and assist clinicians in making diagnoses.

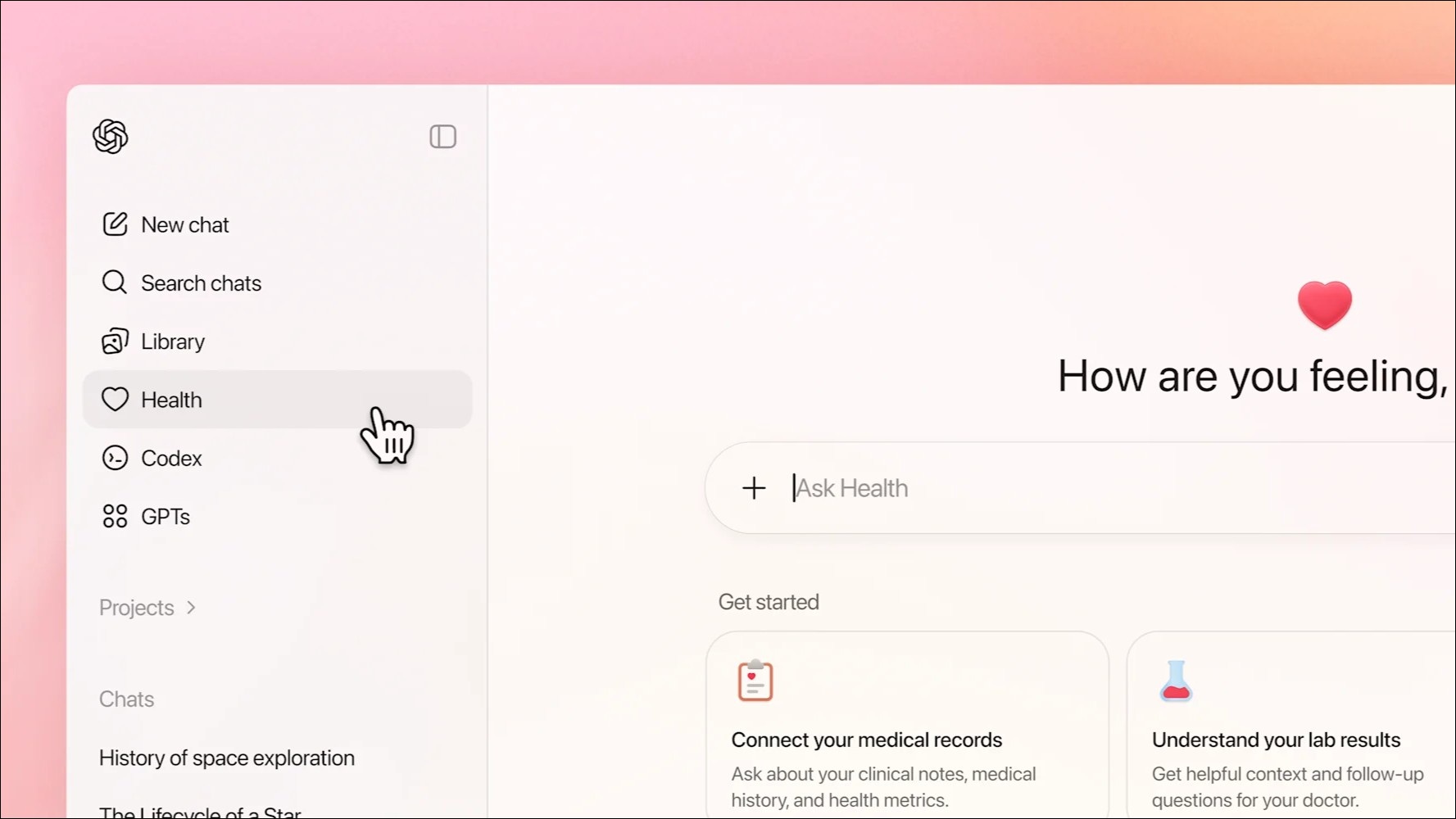

A new ChatGPT Health tab would encourage individual users to share health information by linking personal medical records and popular wellness apps such as Apple Health, Function, and MyFitnessPal, OpenAI said on Thursday.

While the company said the system was not designed to replace real medical care and was “not intended for diagnosis or treatment”, it argued it would help users “navigate everyday questions and understand patterns over time”.

Marketing material showed users asking ChatGPT Health for exercise routines, ways to relieve symptoms, how to examine scans and lab results, which questions to ask a specialist, how to compare health insurance policies, and for possible side effects from certain medications.

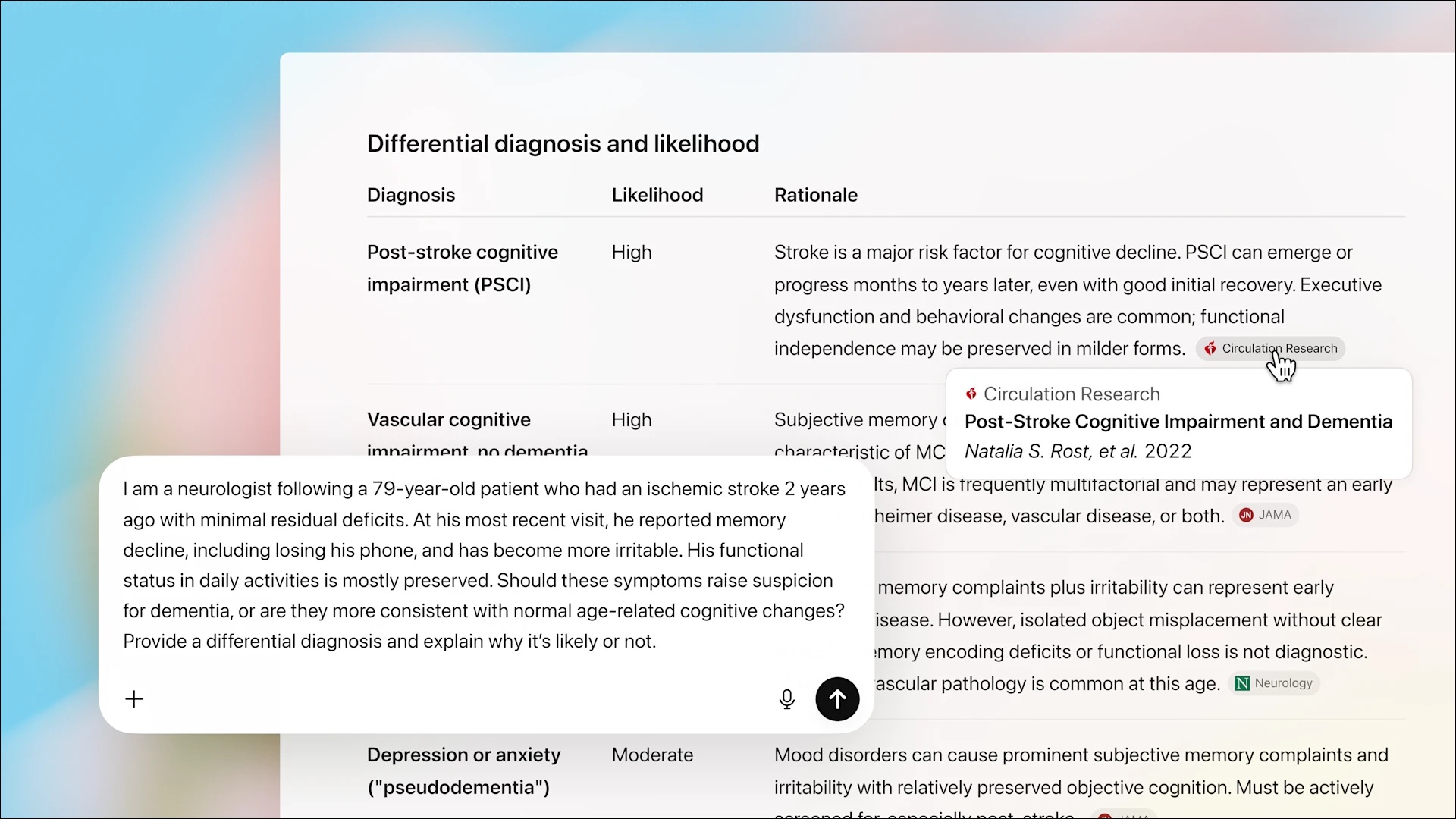

For healthcare organisations, OpenAI for Healthcare would also use ChatGPT to help clinicians with “clinical, research, and operational work”, the company announced on Friday.

The system could also help retrieve and cite evidence from medical sources to help clinicians make their diagnoses, OpenAI said.

Providing diagnostic help could see such an AI system draw the attention of regulators such as Australia’s Therapeutic Goods Administration (TGA), as the agency is already reviewing whether AI scribes used by doctors that provide diagnostic or treatment suggestions need to be classified as medical devices and thereby submitted for regulatory approval.

The TGA said it "routinely monitors the market for newly released products that may meet the definition of a medical device and assesses all available information to confirm compliance with Australian regulations".

"This may require the TGA to request additional or clarifying information from product owners regarding specific system characteristics and functions," it said in a statement.

Will people place ‘too much trust’ in ChatGPT Health?

Professor Kirsten McCaffery, director of the Sydney Health Literacy Lab at the University of Sydney, said she was “equally excited and concerned” by the launch of ChatGPT Health, as she believed the system could help people but may also become overly trusted by some.

"The concern is that people will place too much trust in ChatGPT Health and will make health decisions based on this advice alone and not seek professional advice due to convenience or cost,” she told Information Age.

“Even if people are advised by the model not to make health decisions based on information given, it is likely many people may override this advice.

“There is also the concern that advice based on personal records may feel safer and more authoritative, again encouraging users to trust it to make decisions when there is a risk it is wrong and harmful.”

OpenAI says ChatGPT Health can gather information from a user's medical records and healthcare apps if given permission. Image: OpenAI

An Australian study co-authored by McCaffery which asked a nationally representative sample of more than 2,000 people if they had used ChatGPT for health queries found only one in 10 (9.9 per cent) had done so in the first half of 2024.

However, 39 per cent of people who had not yet used ChatGPT for health concerns said they would consider doing so in the following six months.

Health questions are now “one of the most common ways people use ChatGPT”, says OpenAI, with more than 230 million people asking ChatGPT health and wellness-related queries each week, according to the company.

“There are obvious benefits for better understanding of health information,” McCaffery said.

“We know that ChatGPT can provide information in ways that are personalised and in ways that are more understandable — i.e. it uses lay language and can translate information into different languages.

“In our own study in Australia we found that people whose first language was not English were more likely to use ChatGPT for health questions, which showed they felt they needed more help.”

OpenAI says its enterprise healthcare platform can help clinicians make diagnoses by citing evidence from medical sources and research. Image: OpenAI

Questions of accuracy, privacy, and security

The announcement of OpenAI’s consumer and enterprise health platforms comes as the company faces lawsuits over the mental health impacts of ChatGPT on some users, and after a 2025 "experiment” saw some inadvertently share their private chats with search engines.

It also comes as cybercriminals continue to target platforms that hold sensitive (and thereby lucrative) medical information, which is often held to ransom when it is illegally obtained.

OpenAI has promised to protect sensitive health information “with enhanced privacy” such as purpose-built encryption, and said it would not use ChatGPT Health conversations to train its foundation models.

The integration of medical records and some wellness apps would also be only available in the United States, where the company said it had partnered with third-party AI health data platform B.well.

Any connected wellness apps must also “must meet OpenAI’s privacy and security requirements, including collecting only the minimum data needed, and undergo additional security review specific to inclusion in Health”, the company said.

Industry figures have also raised concerns over whether ChatGPT Health conversations could be requested in future legal cases, as OpenAI recently lost a bid to prevent 20 million anonymised ChatGPT logs from being handed to lawyers for media organisations who are suing it over copyright infringement.

On the enterprise side, ChatGPT for Healthcare would provide clinicians with “transparent citations” from medical sources, OpenAI said.

However, large language models (LLMs) such as ChatGPT and Google’s Gemini sometimes provide incorrect information — colloquially known as a hallucination — such as including incorrect citations or telling users to put glue on their pizza.

Google this week removed several AI responses to health questions from its popular search engine after The Guardian found Gemini was providing some false and misleading responses.

The incident is “a perfect example” of why much of the AI industry’s ‘move fast and break things’ ethos “can’t work in health care”, according to McCaffery.

“If something breaks or goes wrong, someone can die or be seriously harmed,” she said.

More than 260 physicians were consulted during the development of ChatGPT Health and OpenAI for Healthcare, “to understand what makes an answer to a health question helpful or potentially harmful”, OpenAI said.

Due to stricter privacy regulations in some countries, only ChatGPT users outside of the European Economic Area, Switzerland, and the United Kingdom would be eligible to use ChatGPT Health, the company added.

OpenAI has launched a waitlist for users who wish to gain early access to ChatGPT Health, and said it planned to expand access to all users “in the coming weeks”.

American AI company Anthropic also announced new healthcare features for its Claude chatbot on Sunday, allowing US users to integrate their health records and apps.

This story was updated on 13/01/26 to include a comment from the TGA.